Community Articles

- Cloudera Community

- Support

- Community Articles

- Creating a 3 node NiFi cluster using Vagrant and V...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 10-03-2016 10:19 PM - edited 08-17-2019 09:24 AM

Objectives

Upon completion of this tutorial, you should have a 3 node cluster of NiFi running on CentOs 7.2 using Vagrant and VirtualBox.

Prerequisites

- You should already have installed VirtualBox 5.1.x. Read more here: VirtualBox

- You should already have installed Vagrant 1.8.6. Read more here: Vagrant

NOTE: Version 1.8.6 fixes an annoying bug with permissions of the authorized_keys file and SSH. I highly recommend you upgrade to 1.8.6.

- You should already have installed the vagrant-vbguest plugin. This plugin will keep the VirtualBox Guest Additions software current as you upgrade your kernel and/or VirtualBox versions. Read more here: Vagrant vbguest plugin

- You should already have installed the vagrant-hostmanager plugin. This plugin will automatically manage the /etc/hosts file on your local mac and in your virtual machines. Read more here: Vagrant hostmanager plugin

Scope

This tutorial was tested in the following environment:

- Mac OS X 10.11.6 (El Capitan)

- VirtualBox 5.1.6

- Vagrant 1.8.6

- vagrant-vbguest plugin 0.13.0

- vagrant-hostnamanger plugin 1.8.5

- Apache NiFi 1.0.0

Steps

Create Vagrant project directory

Before we get started, determine where you want to keep your Vagrant project files. Each Vagrant project should have its own directory. I keep my Vagrant projects in my ~/Development/Vagrant directory. You should also use a helpful name for each Vagrant project directory you create.

$ cd ~/Development/Vagrant $ mkdir centos7-nifi-cluster $ cd centos7-nifi-cluster

We will be using a CentOS 7.2 Vagrant box, so I include centos7 in the Vagrant project name to differentiate it from a CentOS 6 project. The project is for NiFi, so I include that in the name. And this project will have a cluster of machines. Thus we have a project directory name of centos7-nifi-cluster.

Create Vagrantfile

The Vagrantfile tells Vagrant how to configure your virtual machines. The name of the file is Vagrantfile and it should be created in your Vagrant project directory. You can choose to copy/paste my Vagrantfile below or you can download it from the attachments to this article.

# -*- mode: ruby -*-

# vi: set ft=ruby :

# Using yaml to load external configuration files

require 'yaml'

Vagrant.configure("2") do |config|

# Using the hostmanager vagrant plugin to update the host files

config.hostmanager.enabled = true

config.hostmanager.manage_host = true

config.hostmanager.manage_guest = true

config.hostmanager.ignore_private_ip = false

# Loading in the list of commands that should be run when the VM is provisioned.

commands = YAML.load_file('commands.yaml')

commands.each do |command|

config.vm.provision :shell, inline: command

end

# Loading in the VM configuration information

servers = YAML.load_file('servers.yaml')

servers.each do |servers|

config.vm.define servers["name"] do |srv|

srv.vm.box = servers["box"] # Speciy the name of the Vagrant box file to use

srv.vm.hostname = servers["name"] # Set the hostname of the VM

srv.vm.network "private_network", ip: servers["ip"], :adapater=>2 # Add a second adapater with a specified IP

srv.vm.network :forwarded_port, guest: 22, host: servers["port"] # Add a port forwarding rule

srv.vm.provision :shell, inline: "sed -i'' '/^127.0.0.1\t#{srv.vm.hostname}\t#{srv.vm.hostname}$/d' /etc/hosts"

srv.vm.provider :virtualbox do |vb|

vb.name = servers["name"] # Name of the VM in VirtualBox

vb.cpus = servers["cpus"] # How many CPUs to allocate to the VM

vb.memory = servers["ram"] # How much memory to allocate to the VM

vb.customize ["modifyvm", :id, "--cpuexecutioncap", "33"] # Limit to VM to 33% of available CPU

end

end

end

end

Create a servers.yaml file

The servers.yaml file contains the configuration information for our virtual machines. The name of the file is servers.yaml and it should created in your Vagrant project directory. This file is loaded in from the Vagrantfile. You can choose to copy/paste my servers.yaml file below or you can download it from the attachments to this article.

--- - name: nifi01 box: bento/centos-7.2 cpus: 2 ram: 2048 ip: 192.168.56.101 port: 10122 - name: nifi02 box: bento/centos-7.2 cpus: 2 ram: 2048 ip: 192.168.56.102 port: 10222 - name: nifi03 box: bento/centos-7.2 cpus: 2 ram: 2048 ip: 192.168.56.103 port: 10322

Create a commands.yaml file

The commands.yaml file contains a list of commands that should be run on each virtual machine when it is first provisioned. The name of the file is commands.yaml and it should created in your Vagrant project directory. This file is loaded in from the Vagrantfile and allows us to automate configuration tasks that would otherwise be tedious and/or repetitive. You can choose to copy/paste my commands.yaml file below or you can download it from the attachments to this article.

- "sudo yum -y install net-tools ntp wget java-1.8.0-openjdk java-1.8.0-openjdk-devel" - "sudo systemctl enable ntpd && sudo systemctl start ntpd" - "sudo systemctl disable firewalld && sudo systemctl stop firewalld" - "sudo sed -i --follow-symlinks 's/^SELINUX=.*/SELINUX=disabled/g' /etc/sysconfig/selinux"

Start the virtual machines

Once you have created the 3 files in your Vagrant project directory, you are ready to start your cluster. Creating the cluster for the first time and starting it every time after that uses the same command:

$ vagrant up

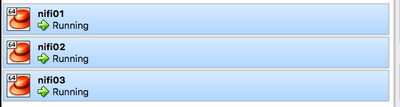

Once the process is complete you should have 3 servers running. You can verify by looking at VirtualBox. You should notice I have 3 virtual machines running called nifi01, nifi02 and nifi03:

Connect to each virtual machine

You are able to login to each of the virtual machines via ssh using the

vagrant ssh command. You must specify the name of the virtual machine you want to connect to.

$ vagrant ssh nifi01

Verify that you can login to each of the virtual machines: nifi01, nifi02, and nifi03.

Download Apache NiFi

We need to download the NiFi distribution file so that we can install it on each of our nodes. Instead of downloading it 3 times, we will download it once on our Mac. We'll copy the file to our Vagrant project directory where each of our virtual machines can access the file via the /vagrant mount point.

$ cd ~/Documents/Vagrant/centos7-nifi-cluster $ curl -O http://mirror.cc.columbia.edu/pub/software/apache/nifi/1.0.0/nifi-1.0.0-bin.tar.gz

NOTE: You may want to use a different mirror if you find your download speeds are too slow.

Create nifi user

We will be running NiFi as the

nifi user. So we need to create that account on each server.

$ vagrant ssh nifi01 $ sudo useradd nifi -d /home/nifi

Repeat the process for nifi02 and nifi03. I recommend having 3 terminal windows open from this point forward, one for each of the NiFi servers.

Verify Host Files

Now that you are logged into each server, you should verify the /etc/hosts file on each server. You should notice the Vagrant hostmanager plugin as updated the /etc/hosts file with the ip address and hostnames of the 3 servers.

NOTE: If you see 127.0.0.1 nifi01 (or nifi02, nifi03) at the top of the /etc/hosts file, delete that line. It will cause issues. The only entry with 127.0.0.1 should be the one with localhost.

UPDATE: If you use the updated Vagrantfile with the "sed" command, this will remove the extraneous entry at the top of the host file. The following line was added to the Vagrantfile to fix the issue.

srv.vm.provision :shell, inline: "sed -i'' '/^127.0.0.1\t#{srv.vm.hostname}\t#{srv.vm.hostname}$/d' /etc/hosts"

Extract nifi archive

We will be running NiFi from our /opt directory, which is where we will extract the archive. You should already be connected to the server from the previous step.

$ cd /opt $ sudo tar xvfz /vagrant/nifi-1.0.0-bin.tar.gz $ sudo chown -R nifi:nifi /opt/nifi-1.0.0

Repeat the process for nifi02 and nifi03

Edit nifi.properties file

We need to modify the nifi.properties file to setup clustering. The nifi.properties file is the main configuration file and is located at <nifi install>/conf/nifi.properties. In our case it should be located at /opt/nifi-1.0.0/conf/nifi.properties.

$ sudo su - nifi $ cd /opt/nifi-1.0.0/conf $ vi nifi.properties

You should edit the following lines in the file on each of the servers:

nifi.web.http.host=nifi01 nifi.state.management.embedded.zookeeper.start=true nifi.cluster.is.node=true nifi.cluster.node.address=nifi01 nifi.cluster.node.protocol.port=9999 nifi.zookeeper.connect.string=nifi01:2181,nifi02:21818,nifi03:2181 nifi.remote.input.host=nifi01 nifi.remote.input.secure=false nifi.remote.input.socket.port=9998

NOTE: Make sure you enter the hostname value that matches the name of the host you are on.

You have the option to specify any port for

nifi.cluster.node.protocol.port as long as there are no conflicts on the server and it matches the other server configurations. You have the option to specify any port for

nifi.remote.input.socket.port as long as there are no conflicts on the

server and it matches the other server configurations. The nifi.cluster.node.protocol.port and nifi.remote.input.socket.port should be different values.

If you used vi to edit the file, press the following key sequence to save the file and exit vi:

:wq

Edit zookeeper.properties file

We need to modify the zookeeper.properties file on each of the servers. The zookeeper.properties file is the configuration file for zookeeper and is located at <nifi install>/conf/zookeeper.properties. In our case it should be located at /opt/nifi-1.0.0/conf/zookeeper.properties. We are providing the list of known zookeeper servers.

$ cd /opt/nifi-1.0.0/conf $ vi zookeeper.properties

Delete the line at the bottom of the file:

server.1=

Add these three lines at the bottom of the file:

server.1=nifi01:2888:3888 server.2=nifi02:2888:3888 server.3=nifi03:2888:3888

If you used vi to edit the file, press the following key sequence to save the file and exit vi:

:wq

Create zookeeper state directory

Each NiFi server is running an embedded Zookeeper server. Each zookeeper instance needs a unique id, which is stored in the <nifi home>state/zookeeper/myid file. In our case, that location is /opt/nifi-1.0.0./state/zookeeper/myid. For each of the hosts, you need to create the myid file. The ids for each server are: nifi01 is 1, nifi02 is 2 and nifi03 is 3.

$ cd /opt/nifi-1.0.0 $ mkdir -p state/zookeeper $ echo 1 > state/zookeeper/myid

Remember that on nifi02 you

echo 2 and on nifi03 you echo 3.

Start NiFi

Now we should have everything in place to start NiFi. On each of the three servers run the following command:

$ cd /opt/nifi-1.0.0 $ bin/nifi.sh start

Monitor NiFi logs

You can monitor the NiFi logs by using the tail command:

$ tail -f logs/nifi-app.logOnce the servers connect to the cluster, you should notice log messages similar to this:

2016-09-30 13:22:59,260 INFO [Clustering Tasks Thread-2] org.apache.nifi.cluster.heartbeat Heartbeat created at 2016-09-30 13:22:59,253 and sent to nifi01:9999 at 2016-09-30 13:22:59,260; send took 5 millis

Access NiFi UI

Now you can access the NiFi web UI. You can log into any of the 3 servers using this URL:

http://nifi01:8080/nifi

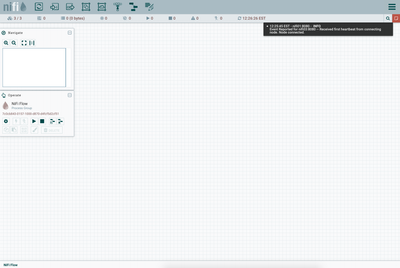

You should see something similar to this:

Notice the cluster indicator in the upper left shows 3/3 which means that all 3 of our nodes are in the cluster. Notice the upper right has a post-it note icon. This icon gives you recent messages and will be colored red. You can see this screenshot showing a message about receiving a heartbeat.

Try accessing nifi02 and nifi03 web interfaces.

Shutdown the cluster

To shutdown the cluster, you only need to run the vagrant command:

$ vagrant halt

Restarting the cluster

When you restart the cluster, you will need to log into each server and start NiFi as it is not configured to auto start.

Review

If you successfully worked through the tutorial, you should have a Vagrant configuration that you can bring up at any time by using vagrant up and bringing down by using vagrant halt. You should also have Apache NiFi configured on 3 servers to run in a clustered configuration.

Created on 10-03-2016 10:36 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

This is awesome, where we can demo clustered NIFI servers to clients instead of a standalone instance.

Created on 10-22-2016 03:52 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Great stuff, thanks Michael!

Created on 10-25-2016 02:04 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

we face issue while bringing up "vagrant up "

Error :

There are errors in the configuration of this machine. Please fix

the following errors and try again:

Vagrant:

* Unknown configuration section 'host manager’.

Resolution : install vagrant-hostmanager

$ vagrant plugin install vagrant-hostmanager

Created on 10-26-2016 01:39 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Did you install the vagrant plugin "vagrant-hostmanager"? It is listed a requirement at the top of the tutorial.