Community Articles

- Cloudera Community

- Support

- Community Articles

- Deploy NiFi On Kubernetes

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 09-04-2019 11:47 AM - edited 09-04-2019 01:53 PM

My Kubernetes series (Part1, Part2) was strictly focused on MiNiFi on K8S. NiFi and MiNiFi may communicate over Site 2 Site; however, often the pattern is to leverage Kafka for a clean message handoff. NiFi within these patterns is generally the central router and transformer of messages. Think of it like "FedEx" for data. Till now most have deployed NiFi on bare metal or VMs. Natural evolution kicks in. Deploy NiFi on k8s and yes it's super simple. In this article I will demonstrate how to deploy both a NiFi and ZooKeeper cluster (none being a single pod!) on Azure Kubernetes Service (AKS); however, all artifacts may be leveraged to deploy on virtually any kubernetes offering.

Prerequisites

- Some knowledge of Kubernetes and NiFi

- AKS / k8s Cluster

- kubectl cli

- NiFi image available in a registry (ie dockerhub)

Deployment

All the contents in the ymls below can be placed into single file for deployment. For this demonstration, chucking it into smaller components makes it easier to explain.

I have loaded a NiFi image into Azure Container Repository. You can use the NiFi image available here in DockerHub.

ZooKeeper

NiFi uses ZooKeeper for several state management functions. More on that here. ZooKeeper for NiFi can be deployed using embedded or stand alone mode. Here I will deploy 3 pods of ZK on k8s. Deploying ZK on k8s is super simple.

kubectl apply -f https://k8s.io/examples/application/zookeeper/zookeeper.yaml

After a few minutes 3 pods of ZK will be available for NiFi to use. Once ZK pods become available, proceed to deploy NiFi on k8s

kubectl get pods -w -l app=zk

NiFi

Below is the k8s deployment yml for NiFi (named nifi.yml). Few fields to highlight

- replicas

- This will be number of NiFi pods to deploy (Cluster)

- Image

- Replace with your image location

apiVersion: extensions/v1beta1

kind: Service #+

apiVersion: v1 #+

metadata: #+

name: nifi-service #+

spec: #+

selector: #+

app: nifi #+

ports: #+

- protocol: TCP #+

targetPort: 8080 #+

port: 8080 #+

name: ui #+

- protocol: TCP #+

targetPort: 9088 #+

port: 9088 #+

name: node-protocol-port #+

- protocol: TCP #+

targetPort: 8888 #+

port: 8888 #+

name: s2s #+

type: LoadBalancer #+

---

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: nifi

spec:

replicas: 2

selector:

matchLabels:

app: nifi

template:

metadata:

labels:

app: nifi

spec:

hostNetwork: true

dnsPolicy: ClusterFirstWithHostNet

containers:

- name: nifi-container

image: sunmanregistry.azurecr.io/nifi:latest

ports:

- containerPort: 8080

name: http

- containerPort: 22

name: ssh

resources:

requests:

cpu: ".5"

memory: "6Gi"

limits:

cpu: "1"

env:

- name: VERSION

value: "1.9"

- name: NIFI_CLUSTER_IS_NODE

value: "true"

- name: NIFI_CLUSTER_NODE_PROTOCOL_PORT

value: "9088"

- name: NIFI_ELECTION_MAX_CANDIDATES

value: "1"

- name: NIFI_ZK_CONNECT_STRING

value: "zk-0.zk-hs.default.svc.cluster.local:2181,zk-1.zk-hs.default.svc.cluster.local:2181,zk-2.zk-hs.default.svc.cluster.local:2181"

To deploy this NiFi k8s manifest:

kubectl apply -f nifi.yml

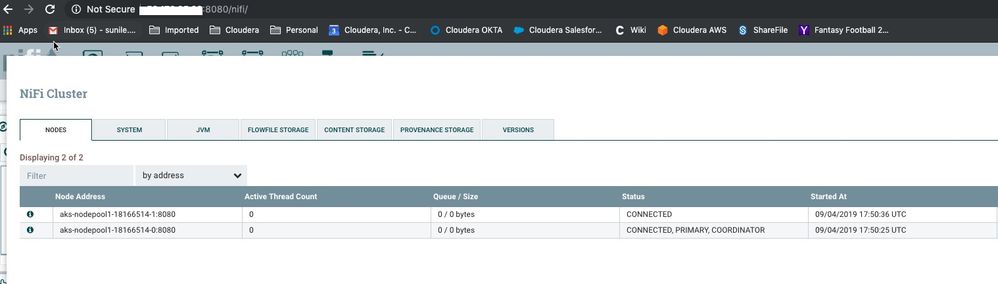

Few minutes later you will have a fully functioning NiFi cluster on Kubernetes

I use super simple a lot. I know. Deploying MiNiFi & NiFi on k8s is just that, super simple. Enjoy.

Created on 04-11-2020 11:09 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello.. This is a very informative article.

I just have a question in terms of creating a Nifi cluster on k8s.. In case of auto-scaling or k8s launching a new pod, if a pod/node crashes, how does Nifi maintains consistency.

We currently use Nifi in cluster mode on VMs but when any node goes down, we have to manually disconnect the failed node from cluster or have to bring up the failed node to get the cluster in edit-able mode i.e. dataflows can be edited from UI. As I understand, Nifi does this to maintain consistency across all nodes.

So my question is in K8s kind of environment, where pods are created and destroyed very quickly.. how will Nifi cluster behave ?

Sorry for writing in a old article, will not mind to start a new thread but just thought the question is relevant here. Sorry I have limited understanding of Kubernetes... Thanks 🙂

Created on 04-13-2020 07:54 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@haris_khan Good observation. Clustering concept in k8s in general requires a fair bit of understanding how the underlying software behaves during these various scenarios. Apache NiFi engineers have built a k8s operator which handles scaling up and down.

I believe you may want to seriously look at NiFi stateless or MiNiFi (both on k8s) if rapid scaling up/down is of interest.

Created on 04-13-2020 09:30 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks Sunil. I did happen to try this the other day. I did it through the helm charts - cetic/nifi and faced this scenario. I started with 2 pod cluster and killed one of the pods and Nifi cluster became read-only but as soon as I brought up another pod, the cluster became normal. Probably this happened because replicas are managed through statefulsets, so when the pod came back it has retained the same properties and volume mounts etc. So I think scaling cluster down might be an issue considering this property of Nifi.

Surely I will start looking into Minifi, it has always been of interest but never looked in detail... Thanks 🙂

Created on 04-15-2021 04:47 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks sunil for this nice article.

I have one query, how you get Nifi UI outside k8 cluster without ingress. I'm not able to render Nifi UI on 8080 port.

Created on 09-02-2021 08:16 AM - edited 09-02-2021 08:16 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

So following this guide directly doesn't actually work. I'm assuming one has to have access to your image registry. Using the base apache/nifi, or a even a modified one doesn't work 'out of the box'. It would be nice if you included some of the changes you made to your nifi image in order to get this functional. Specifically, the error that occurs is related to keys and decrypting some values.

2021-09-01 21:05:33,566 ERROR [main] o.a.nifi.properties.NiFiPropertiesLoader Clustered Configuration Found: Shared Sensitive Properties Key [nifi.sensitive.props.key] required for cluster nodes

2021-09-01 21:05:33,574 ERROR [main] org.apache.nifi.NiFi Failure to launch NiFi due to java.lang.IllegalArgumentException: There was an issue decrypting protected properties

java.lang.IllegalArgumentException: There was an issue decrypting protected properties

at org.apache.nifi.NiFi.initializeProperties(NiFi.java:346)

at org.apache.nifi.NiFi.convertArgumentsToValidatedNiFiProperties(NiFi.java:314)

at org.apache.nifi.NiFi.convertArgumentsToValidatedNiFiProperties(NiFi.java:310)

at org.apache.nifi.NiFi.main(NiFi.java:302)

Caused by: org.apache.nifi.properties.SensitivePropertyProtectionException: Sensitive Properties Key [nifi.sensitive.props.key] not found: See Admin Guide section [Updating the Sensitive Properties Key]

at org.apache.nifi.properties.NiFiPropertiesLoader.getDefaultProperties(NiFiPropertiesLoader.java:220)

at org.apache.nifi.properties.NiFiPropertiesLoader.get(NiFiPropertiesLoader.java:209)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.nifi.NiFi.initializeProperties(NiFi.java:341)

... 3 common frames omitted

Created on 09-15-2021 07:08 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Yes the above comment on the functionality not working any longer is accurate. NiFi has gone through many enhancements and therefore not reflected in this article. I do not plan on updating the article due to the following:

The objective of this article was to get nifi running in K8s because during that time NiFi on K8s offering did not exist within Hortonworks HDF. Recently NiFi on K8s has been GA'd. It's call DataFlow experience. Docs here:https://docs.cloudera.com/dataflow/cloud/index.html. If you want NiFi on K8s, that is your new playground.