Community Articles

- Cloudera Community

- Support

- Community Articles

- HBase Disaster Recovery Architecture Examples

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 03-18-2017 05:27 PM - edited 08-17-2019 01:45 PM

HBase along with Phoenix is one of the most powerful NoSQL combinations. HBase/Phoenix capabilities allow users to host OLTPish workloads natively on Hadoop using HBase/Phoenix with all the goodness of HA and analytic benefits on a single platform (Ie Spark-hbase connector or Phoenix Hive storage handler). Often a requirement for HA implementations is a need for DR environment. Here I will describe a few common patterns and in no way is this the exhaustive HBase DR patterns. In my opinion, pattern 5 is the simplest to implement and provides operational ease & efficiency.

Here are some of the high level replication and availability strategies with HBase/Phoenix

- HBASE provides High Availability within a cluster by managing region server failures transparently.

- HBASE

provides various cross DC asynchronous replication schemes

- Master/Master

replication topology

- Two clusters replicating all edits, bi-directionally to each other

- Master/Slave

topology replication

- One cluster replicating all edits to second cluster

- Cyclic

topology for replication

- A ring topology for clusters, replicating all edits in an acyclic manner

- Hub

and spoke topology for replication

- A central cluster replicating all edits to multiple clusters in a uni-directional manner

- Master/Master

replication topology

- Using various topologies described above cross DC replication scheme can be setup as per desired architecture

Pattern 1

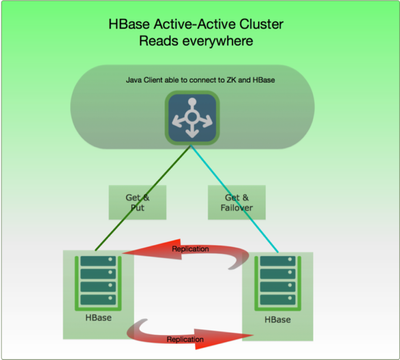

- Reads & Writes served by both clusters

- An implementation of client to provide for stickiness for writes/reads based on a session ID like concept needs to investigated

- Master/Master

replication between clusters

- Bidirectional replication

- Replication post failover - recovery instrumented via Cyclic Replication

Pattern 2

- Reads served by both clusters

- Writes served by single cluster

- Master/Master

replication between clusters

- Bidirectional replication

- Client will failover to secondary cluster

- Replication post failover - recovery instrumented via Cyclic Replication

Pattern 3

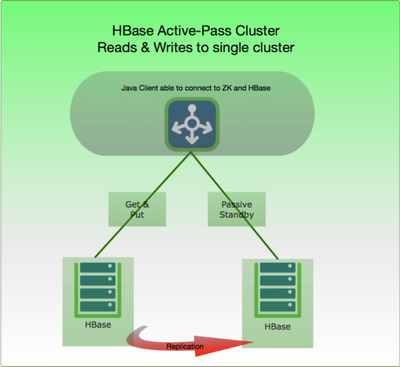

- Reads & Writes served by single cluster

- Master/Master

replication between clusters

- Bidirectional replication

- Client will failover to secondary cluster

- Replication post failover - recovery instrumented via Cyclic Replication

Pattern 4

- Reads & Writes served by single cluster

- Master/Slave

replication between clusters

- Unidirectional replication

- Client will failover to secondary cluster

- Manual resync required on ”primary” cluster due to unidirectional replication

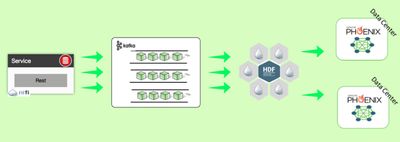

Pattern 5

- Ingestion via NiFi Rest API

- Supports handling secure calls and round trip responses

- Push data to Kafka to democratize data to all apps interested in data set

- Secure Kafka topics via Apache Ranger

- NiFi dual ingest into N number of HBase/Phoenix clusters

- Enables in-sync data stores

- Operational ease

- NiFi back pressuring will handle any ODS downtime

- UI flow orchestration

- Data Governance built in via Data Provenance

- Event level linage

Additional HBase Replication Documentation

- Monitor replication status

- Replication metrics

- Replication Configuration options

- HBaseReplication Internals

- HBase Cluster Replication Details

Created on 11-22-2018 02:39 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Does Master to Master or cyclic keeps on replicating the data back and forth ? If an upsert is executed from C1 and it is propogated to C2. Now as C1 is added in C2 as peer will the replication happen to C1 back and then again to C2 (Going C1 to C2 to C1 to C2 to C1 .....)