Community Articles

- Cloudera Community

- Support

- Community Articles

- How to expand existing NiFi cluster fault toleranc...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 01-04-2016 05:08 PM - edited 08-17-2019 01:35 PM

This is part 2 of the fault tolerance tutorial. Part 1 can be found here: https://community.hortonworks.com/articles/8607/ho...

--------------------------------------------------------------------------------------------------------------------------------------

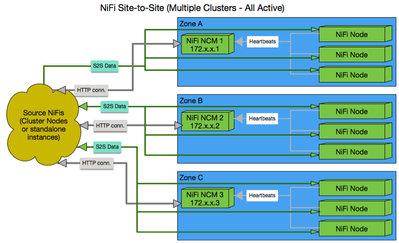

Multi-Cluster Fault Tolerance (One cluster in each zone)

(All Clusters Active all the time)

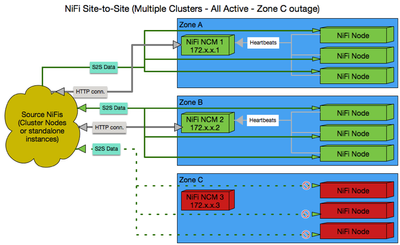

Multiple clusters can be setup across multiple zones and all are active at the same time. This option requires some external process to keep flows in sync across all clusters. Lets take a look at what this setup might look like if we had 3 zones all running a NiFi cluster.

We can see right away that there is a lot more involved with the S2S configuration between the sources systems and these multiple zones. Towards the beginning of this guide we showed what a typical NiFi S2S setup might look like on a source NiFi. With this configuration the sources would need to be setup differently. Rather than delivering data to the Nodes of a single cluster, as we have done in all the other configurations so far, we will need to distribute delivery to all three of our clusters in this configuration.

Each of the clusters operates independently of one another. They do not share status or FlowFile information between them. In this configuration, data will only stop flowing to one of the clusters if one of the following is true:

- 1.The NCM for that cluster reports no nodes available.

- a.This can happen if all nodes are down, but the NCM is still up.

- b.Or NCM has marked all Nodes as disconnected due to lack of heartbeats.

- 2.None of the Nodes in the cluster can be communicated with over the S2S port to deliver data.

- 3.None of the nodes are accepting data because the destination is full or backpressure has disabled the input ports on all nodes.

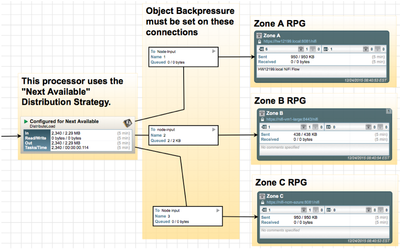

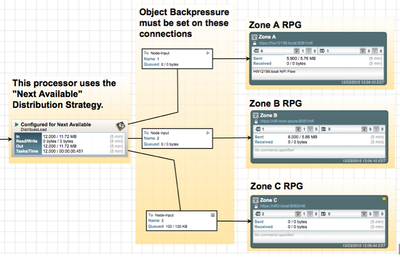

The following image shows how a source NiFi would be setup to do this load distribution to three separate clusters running in your different zones.

The DistributeLoad Processor would be configured as follows:

The “Number of Relationships” value should be configured for the number of clusters you will be load-balancing your data to. Each relationship will be used to establish the connection with those clusters.

If setup correctly, data will be spread across all the NiFi clusters running in all three zones. Should any zone become unreachable completely (no Nodes reachable), data will queue on the connection to that RPG until the backpressure object threshold configured on that connection is reached. At that time all new data will only be distributed to the connections still accepting files for the other zones.

Lets take a look at a couple different scenarios:

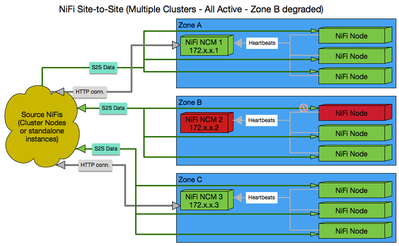

Scenario 1: Zone Partial Outage

In this scenario we will take a look at what happens when connectivity to the NCM and one node in Zone B is lost.

In the above scenario the NCM went down before it marked the first Node as disconnected so the source systems will still try to push data to that down node. That will of course fail so the source will try a different node within Zone B. Had the node been marked disconnected before the NCM went down, the source systems would only know about the two still connected nodes and only try delivering to them. The remaining reachable nodes in Zone B’s cluster now take on more data because of the lost node. Data distribution to the nodes in the clusters located in the remaining Zones are unaffected.

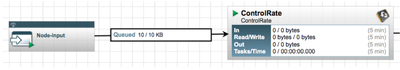

What if you lost two nodes? The last node in Zone B’s cluster would then receive all the data for that cluster. (33.3% to Zone A, 33.3% to zone B, and 33.3% to Zone C) Remember that the clusters in each of the Zones do not talk to one another so that distribution of data from the sources does not change. We can however design a flow to make sure this use case does not cause us problems. Immediately following the input port (Input ports are used to receive data via S2S) on the dataflow in every one of our Zone’s cluster, we need to add a ControlRate processor.

*** Penalty duration on ControlRate processor should be changed from 30 to 1 sec. In the NiFi version (0.4.1) available at the time of writing this, there is a bug that can affect the processors configured rate. (reported in https://issues.apache.org/jira/browse/NIFI-1329 ) Changing the penalty duration gets us around this bug.

Remember that because this is a cluster, the same flow exists on every Node. We can then configure this ControlRate processor to allow only a certain amount of data to pass through it based on a time interval. So if it were set to 1000 Flowfiles/sec, the 3 node cluster would allow a max of 3000 Flowfiles/sec to flow through the processors. If we then set backpressure on the connection between the input port and this ControlRate processor, we can effectively stop the input port if the volume suddenly becomes too high. This will then trigger data to start to queue up on our source. If you remember, backpressure was also set on those source connections. That forces the sender to send more data to the other two clusters, keeping this degraded cluster from becoming overwhelmed.

We can see below what that would look like on the sending NiFi if the Zone B cluster became degraded.

Backpressure is being applied at times on zone B’s connection, so more data is going to zone A and zone C. Every time that backpressure is applied more data is going to the Zone A and Zone C clusters. Backpressure kicks in on Zone B’s connection when the input port on the Zone B cluster nodes stop accepting data because of the control rate configuration. You can see that data is still flowing to Zone B, but at a lower rate.

Scenario 2: Complete Outage of Zone

In this scenario we have a complete outage of one of our zones. The overall behavior is very close to what we saw in scenario 1. Lets assume we lost Zone C completely.

As we have already learned, depending on the order in which the NiFi cluster in Zone C went down, the source NiFi(s) may or may not be still trying to send data to the nodes in this cluster via S2S. Either way with a complete outage, no data will be sent and will begin to queue on the connection feeding the RPG for Zone C on the sending NiFis. Backpressure being set on that connection is very important to make sure the data queue does not continue to grow and grow. There is no failover mechanism in S2S when all Nodes are down to cause this queued data to redirect somewhere else such as another RPG. So this data will remain in this queue until either the queue itself is manually redirected or the down cluster is returned to service.

As we can see in the above, no files are sending to Zone C and backpressure has kicked in on the connection feeding the Zone C RPG. As a result all data is being load balanced to Zone A and Zone B with the exception of the 100 files stuck in Zone C’s connection. Manual intervention would be required by a DFM to re-route these 100 files. They will of course transfer to Zone C on their own as soon as it comes back online.

Just like the first two configurations, this configuration has its pros and cons.

PROs:

- 1.Losing one does not have impact on being able to access the NCM of the other two zones. So monitoring of the dataflow on the running clusters is still available.

- 2.Cluster communications between nodes and NCM are not affected by cross-domain network latency.

- 3.Each zone can scale up or down with out needing to interface with source NiFis sending data via S2S.

- 4.No DNS changes are required.

CONs:

- 1.Some burden of setting up load balancing falls back on the Source NiFis when using S2S. Source NiFis will need to setup a flow designed to spread data across each of the destination clusters.

- 2.There is no auto failover of data delivery from one zone to another. Manual intervention is required to redirect queues feeding down zones.

- 3.Dataflows must be managed independently on the cluster in each zone. There is no manager of managers that can make sure a dataflow is kept in sync across multiple clusters.

- 4.Changes are made directly on each cluster, the flow.tar (on NCM) and flow.xml.gz (on Nodes) is not interchangeable between clusters. They may appear the same; however, component IDs for each of the processors and connections will differ.

This type of deployment is best suited for when the flow is static across all clusters. In order to keep the dataflow static, it would typically be built on a development system and then deployed to each cluster. In this configuration, changes to the dataflow, directly on the production clusters would not be common practice.