Community Articles

- Cloudera Community

- Support

- Community Articles

- Import hive metadata into Atlas

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 10-13-2016 02:35 AM

This article assumes that, you have a HDP-2.5 cluster with Atlas & Hive enabled. Also make sure that atlas is up and running on that cluster. Please refer to this documentation link for deploying cluster with Atlas enabled.

Atlas provides a script/tool to import metadata from hive for all hive entities like tables, database, views, columns etc... This tool/script requires hadoop and hive classpath jars, to make them available to the script

- Make sure that the environment variable HADOOP_CLASSPATH is set OR HADOOP_HOME to point to root directory of your Hadoop installation.

- set HIVE_HOME env variable to the root of Hive installation.

- Set env variable HIVE_CONF_DIR to Hive configuration directory.

- Copy <atlas-conf>/atlas-application.properties to the hive conf directory

Once the above steps are successfully completed, now we are ready to run the script.

Usage: <atlas package>/hook-bin/import-hive.sh

When you run the above command, you should see the below messages over console.

[root@atlas-blueprint-test-1 ~]# /usr/hdp/current/atlas-server/hook-bin/import-hive.sh Using Hive configuration directory [/etc/hive/conf] Log file for import is /usr/hdp/current/atlas-server/logs/import-hive.log 2016-10-13 01:57:18,676 INFO - [main:] ~ Looking for atlas-application.properties in classpath (ApplicationProperties:73) 2016-10-13 01:57:18,701 INFO - [main:] ~ Loading atlas-application.properties from file:/etc/hive/2.5.0.0-1245/0/atlas-application.properties (ApplicationProperties:86) 2016-10-13 01:57:18,922 DEBUG - [main:] ~ Configuration loaded: (ApplicationProperties:99) 2016-10-13 01:57:18,923 DEBUG - [main:] ~ atlas.authentication.method.kerberos = False (ApplicationProperties:102) 2016-10-13 01:57:18,972 DEBUG - [main:] ~ atlas.cluster.name = atlasBP (ApplicationProperties:102) 2016-10-13 01:57:18,972 DEBUG - [main:] ~ atlas.hook.hive.keepAliveTime = 10 (ApplicationProperties:102) 2016-10-13 01:57:18,972 DEBUG - [main:] ~ atlas.hook.hive.maxThreads = 5 (ApplicationProperties:102) 2016-10-13 01:57:18,973 DEBUG - [main:] ~ atlas.hook.hive.minThreads = 5 (ApplicationProperties:102) 2016-10-13 01:57:18,973 DEBUG - [main:] ~ atlas.hook.hive.numRetries = 3 (ApplicationProperties:102) 2016-10-13 01:57:18,973 DEBUG - [main:] ~ atlas.hook.hive.queueSize = 1000 (ApplicationProperties:102) 2016-10-13 01:57:18,973 DEBUG - [main:] ~ atlas.hook.hive.synchronous = false (ApplicationProperties:102) 2016-10-13 01:57:18,974 DEBUG - [main:] ~ atlas.kafka.bootstrap.servers = atlas-blueprint-test-1.openstacklocal:6667 (ApplicationProperties:102) 2016-10-13 01:57:18,974 DEBUG - [main:] ~ atlas.kafka.hook.group.id = atlas (ApplicationProperties:102) 2016-10-13 01:57:18,974 DEBUG - [main:] ~ atlas.kafka.zookeeper.connect = [atlas-blueprint-test-1.openstacklocal:2181, atlas-blueprint-test-2.openstacklocal:2181] (ApplicationProperties:102) 2016-10-13 01:57:18,974 DEBUG - [main:] ~ atlas.kafka.zookeeper.connection.timeout.ms = 200 (ApplicationProperties:102) 2016-10-13 01:57:18,974 DEBUG - [main:] ~ atlas.kafka.zookeeper.session.timeout.ms = 400 (ApplicationProperties:102) 2016-10-13 01:57:18,981 DEBUG - [main:] ~ atlas.kafka.zookeeper.sync.time.ms = 20 (ApplicationProperties:102) 2016-10-13 01:57:18,981 DEBUG - [main:] ~ atlas.notification.create.topics = True (ApplicationProperties:102) 2016-10-13 01:57:18,982 DEBUG - [main:] ~ atlas.notification.replicas = 1 (ApplicationProperties:102) 2016-10-13 01:57:18,982 DEBUG - [main:] ~ atlas.notification.topics = [ATLAS_HOOK, ATLAS_ENTITIES] (ApplicationProperties:102) 2016-10-13 01:57:18,982 DEBUG - [main:] ~ atlas.rest.address = http://atlas-blueprint-test-1.openstacklocal:21000 (ApplicationProperties:102) 2016-10-13 01:57:18,993 DEBUG - [main:] ~ ==> InMemoryJAASConfiguration.init() (InMemoryJAASConfiguration:168) 2016-10-13 01:57:18,998 DEBUG - [main:] ~ ==> InMemoryJAASConfiguration.init() (InMemoryJAASConfiguration:181) 2016-10-13 01:57:19,043 DEBUG - [main:] ~ ==> InMemoryJAASConfiguration.initialize() (InMemoryJAASConfiguration:220) 2016-10-13 01:57:19,045 DEBUG - [main:] ~ <== InMemoryJAASConfiguration.initialize() (InMemoryJAASConfiguration:347) 2016-10-13 01:57:19,045 DEBUG - [main:] ~ <== InMemoryJAASConfiguration.init() (InMemoryJAASConfiguration:190) 2016-10-13 01:57:19,046 DEBUG - [main:] ~ <== InMemoryJAASConfiguration.init() (InMemoryJAASConfiguration:177) . . . . . 2016-10-13 01:58:09,251 DEBUG - [main:] ~ Using resource http://atlas-blueprint-test-1.openstacklocal:21000/api/atlas/entities/1e78f7ed-c8d4-4c11-9bfa-da08be... for 0 times (AtlasClient:784) 2016-10-13 01:58:10,700 DEBUG - [main:] ~ API http://atlas-blueprint-test-1.openstacklocal:21000/api/atlas/entities/1e78f7ed-c8d4-4c11-9bfa-da08be... returned status 200 (AtlasClient:1191) 2016-10-13 01:58:10,703 DEBUG - [main:] ~ Getting reference for process default.timesheets_test@atlasBP:1474621469000 (HiveMetaStoreBridge:346) 2016-10-13 01:58:10,703 DEBUG - [main:] ~ Using resource http://atlas-blueprint-test-1.openstacklocal:21000/api/atlas/entities?type=hive_process&property=qua... for 0 times (AtlasClient:784) 2016-10-13 01:58:10,893 DEBUG - [main:] ~ API http://atlas-blueprint-test-1.openstacklocal:21000/api/atlas/entities?type=hive_process&property=qua... returned status 200 (AtlasClient:1191) 2016-10-13 01:58:10,898 INFO - [main:] ~ Process {Id='(type: hive_process, id: 28f5a31a-4812-497e-925b-21bfe59ba68a)', traits=[], values={outputs=[(type: DataSet, id: 1e78f7ed-c8d4-4c11-9bfa-da08be7c6b60)], owner=null, queryGraph=null, recentQueries=[create external table timesheets_test (emp_id int, location string, ts_date string, hours int, revenue double, revenue_per_hr double) row format delimited fields terminated by ',' location 'hdfs://atlas-blueprint-test-1.openstacklocal:8020/user/hive/timesheets'], inputs=[(type: DataSet, id: c259d3a8-5684-4808-9f22-972a2e3e2dd0)], qualifiedName=default.timesheets_test@atlasBP:1474621469000, description=null, userName=hive, queryId=hive_20160923090429_25b5b333-bba5-427f-8ee1-6b743cbcf533, clusterName=atlasBP, name=create external table timesheets_test (emp_id int, location string, ts_date string, hours int, revenue double, revenue_per_hr double) row format delimited fields terminated by ',' location 'hdfs://atlas-blueprint-test-1.openstacklocal:8020/user/hive/timesheets', queryText=create external table timesheets_test (emp_id int, location string, ts_date string, hours int, revenue double, revenue_per_hr double) row format delimited fields terminated by ',' location 'hdfs://atlas-blueprint-test-1.openstacklocal:8020/user/hive/timesheets', startTime=2016-09-23T09:04:29.069Z, queryPlan={}, operationType=CREATETABLE, endTime=2016-09-23T09:04:30.319Z}} is already registered (HiveMetaStoreBridge:305) 2016-10-13 01:58:10,898 INFO - [main:] ~ Successfully imported all 22 tables from default (HiveMetaStoreBridge:261) Hive Data Model imported successfully!!!

The below message from the console log shows how many tables are imported and is the import successful or not.

2016-10-13 01:58:10,898 INFO - [main:] ~ Successfully imported all 22 tables from default (HiveMetaStoreBridge:261) Hive Data Model imported successfully!!!

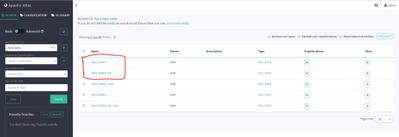

Now we can verify for the imported tables over Atlas UI. It should reflect all the 22 tables that are imported as per above.

The logs for the import script are in <atlas package>/logs/import-hive.log

Running import script on kerberized cluster

The above will work perfectly for a simple cluster but for a kerberized cluster, you need to provide additional details to run the command.

<atlas package>/hook-bin/import-hive.sh -Dsun.security.jgss.debug=true -Djavax.security.auth.useSubjectCredsOnly=false -Djava.security.krb5.conf=[krb5.conf location] -Djava.security.auth.login.config=[jaas.conf location]

- krb5.conf is typically found at /etc/krb5.conf

- for details about jaas.conf, see the atlas security documentation

Created on 05-10-2018 08:14 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

hdp 2.6.3 is missing this script. Any hints to where it was moved?

Created on 05-10-2018 08:22 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

FYI: This file seems to only be present on the hive server.

Created on 08-30-2018 03:07 PM - edited 08-17-2019 08:41 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

I run the script/tool(import-hive.sh) and i can search the hive entities like tables, database, views, columns,but no lineage, is that nomal?

before install atlas, there are two hive table named atlas_testm and atlas_testm_ext(is a view based on atlas_testm) in my hive database cluster.

after install atlas and run the atlas services , i run the script named import-hive.sh,i can saw these two hive table in atlas ui web by search,but no lineage which is relationship of atlas_testm and atlas_testm_ext,is that nomal?

i want to know whether the script named import-hive.sh does not support hive table's history lineage import?

this problem has been bothering me for a long time.

Created on 09-13-2018 12:53 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

At least for version 2.6.3 and above the section "Running import script on kerberized cluster" is wrong. You don't need to provide any of the options (properties) indicated (except maybe the debug one If you want to have debug output) because they are automatically detected and included in the script.

Also at least in 2.6.5 a direct execution of the script in a Kerberized cluster will fail because of the CLASSPATH generated into the script. I had to edit this replacing many single JAR files by a glob inside their parent folder in order for the command to run without error.

If you have this problem see answer o "Atlas can't see Hive tables" question.