Community Articles

- Cloudera Community

- Support

- Community Articles

- LLAP sizing and setup

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 11-29-2017 09:05 PM - edited 08-17-2019 09:58 AM

I'd like to thank @Jean-Philippe Player, @bpreachuk, @ghagleitner, @gopal, @ndembla and @Prasanth Jayachandran for providing input and content for this article.

Introduction

This document describes LLAP setup for reasonable performance with a typical workload. It is intended as a starting point, not as the definitive answer to all tuning questions. However, recommendations in this document have been applied both to our own instances as well as instances we help other teams to run.

The context for the examples

Each step below will be accompanied by an example based on allocating ~50% of a cluster to LLAP, out of 12 nodes with 16 cores and 96Gb dedicated to YARN NodeManager each.

Basic cluster setup

Pick the fraction of the cluster to be used by LLAP

This is determined by the workload (amount of data, concurrency, types of queries, desired latencies); typical values can be in 15-50% range on an existing cluster, or it is possible to give entire cluster to LLAP, depending on customer use case.

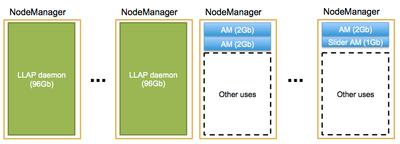

LLAP runs 3 types of YARN containers in the cluster:

a) Execution daemons which process the data

b) query coordinators (Tez AMs) orchestrating the execution of the queriues

and c) a single Slider AM starting and monitoring the daemons.

The bulk of the capacity allotted for LLAP will be used by the Execution daemons. For best results, whole YARN nodes should be allocated for these daemons; therefore, you should pick the number of whole nodes to use based on the fraction of the cluster to be dedicated to LLAP.

Example: we want to use 50% of a 12-node the cluster, so we will have 6 LLAP daemons.

Pick the query parallelism and number of coordinators

This will depend heavily on the application; one thing to be aware of is that with BI tools, the number of running queries at any moment in time is typically much smaller than the number of sessions -users don’t run queries all the time because they take time to look at the results and some tools avoid queries using caching.

The number of queries determines the number of query coordinators (Tez AMs) - one per query + some constant slack in case sessions are temporarily unavailable due to maintenance or cluster issues.

Example: we have determined we need to run 9 queries in parallel; use 10 AMs.

Determine LLAP daemon size

As described above, we will give whole NM nodes to LLAP daemons; however, we also need to leave some space on the cluster for query coordinators and the slider AM.

There are two options. First, it is possible to run these on non-LLAP nodes. In this case, each LLAP daemon can take the entire memory of a NM (see YARN config tab in Ambari, as "Memory allocated for all YARN containers on a node". 😞

If that is acceptable, it's the optimal approach (it maximizes daemon memory and avoids noisy neighbors). However,

- this will use more combined resources than just the (number of LLAP nodes)/(total number of nodes), because AMs will take resources on other nodes.

- it's impossible if all NMs are running LLAP daemons (~100% LLAP cluster), and risky even when there are 1-2 non-LLAP NMs (because all the query coordinators would be concentrated on one node).

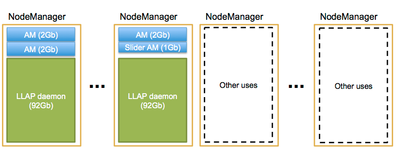

The alternative approach is to run AMs on the nodes with LLAP daemons. In this case, LLAP daemon container size needs to be adjusted down to leave space:

There are (N_Tez_AMs + 1)/N_LLAP_nodes extra processes on each node; we recommend 2Gb per AM process. So, for this case, LLAP daemon will use (NM_memory – 2Gb * ceiling((N_Tez_AMs + 1)/N_LLAP_nodes)) .

Example: We will use the 2nd approach; we need ceiling((10+1)/6) = 2 AM processes per LLAP node, reserving 2 * 2Gb per node. Therefore, LLAP daemon size will be 96Gb – 4Gb = 92Gb.

Set up YARN and the LLAP queue

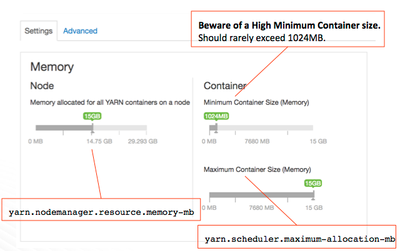

YARN settings for LLAP, as well as the LLAP queue are usually set up by Ambari; if setting up manually or encountering problems, there are a few things to ensure:

- YARN preemption should be enabled. Even if there is enough memory in the cluster for execution daemons, it might be too fragmented for Yarn to allocate whole nodes to LLAP. Preemption ensures that Yarn can free up memory and start LLAP, without it startup might not succeed.

- Minimum YARN container size should be 1024Mb and maximum should be the total memory per NM. LLAP daemon is a container so it will not be able to use more memory than that.

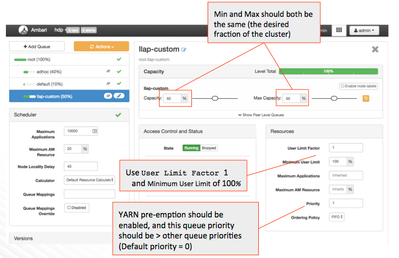

When setting up a custom LLAP queue:

- Set the queue size >= (2Gb * N_TEZ_AMs) + (1Gb [slider AM]) + (daemon_size * N_LLAP_nodes) .

- Ensure the queue has higher priority that other YARN queues (for preemption to work; see the above YARN setup section).

- Make sure queue min and max capacity is the same (full capacity), and there are no user limits on the queue.

Determine the LLAP daemon CPU & memory configuration

Overview

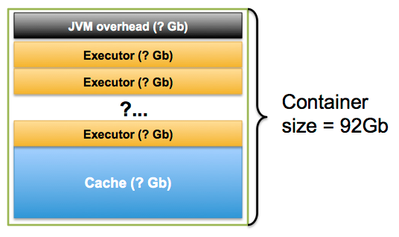

There are three numbers that determine the memory configuration for LLAP daemons.

- Total memory: The container size that was determined above

- Xmx: Memory used by executors, and

- Off-heap cache memory.

Total memory = off-heap cache memory + Xmx (used by executors and daemon-specific overhead) + JVM overhead . The Xmx overhead (shared Java objects and internal structures) is accounted for by LLAP internally, so it can be ignored in the calculations.

In most cases, you can just follow the standard steps below ; however, sometimes the process needs to be tweaked. The following conflicting criteria apply:

- It's good to maximize task parallelism (up to one executor per core).

- It's good to have at least 4 Gb RAM per executor. More memory can provide some diminishing returns; less can sometimes be acceptable but can lead to problems (OOMs, etc.).

- It's good to have IO layer enabled, which requires some cache space; more cache may be useful depending on workload, esp. on a cloud FS for BI queries.

So, number of executors, cache size, and then memory per executor are balance of these 3:

Pick the number of executors per node

Usually the same as the number of cores.

Example: there are 16 cores, so we will use 16 executors.

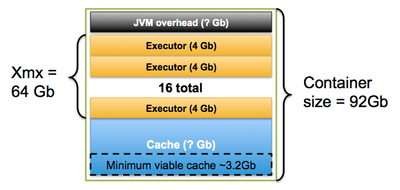

Determine the Xmx and minimum cache memory needed by executors

Daemon uses almost all of its heap memory (Xmx) for processing query work; the shared overheads are accounted for by LLAP. Thus, we determine the daemon Xmx based on executor memory; you should aim to give a single executor around 4Gb. So, Xmx = n_executors * 4Gb. LLAP executors use memory for things like group by tables, sort buffers, shuffle buffers, map join hashtables, PTF, etc.; generally, the more memory, the less likely they are to spill, or to go with a less efficient plan, e.g. switching from a map to shuffle join - for some background, see https://community.hortonworks.com/articles/149998/map-join-memory-sizing-for-llap.html

If there isn't enough memory on the machine, by default, adjust the number of executors down. It is possible to give executors less memory (e.g. 3Gb) to keep their number higher, however, that must always be backed by testing with the target workload. Insufficient memory can cause much bigger perf problems than reduced task parallelism.

Additionally, if IO layer is enabled, each executor would need about ~200Mb of cache (cache buffers are also used as IO buffer pool) for reasonable operation. The cache size will be covered in the next section.

Example: Xmx = 16

executors * 4 Gb = 64 Gb

Additionally, based on 16 executors, we know that it's good to have (0.2 Gb *

16) = 3.2 Gb cache if we are going to enable it.

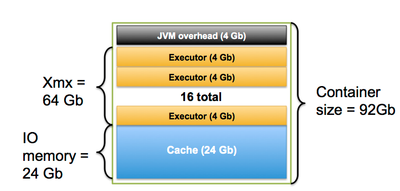

Determine headroom and cache size

We need to leave some memory in the daemon for Java VM overhead (GC structures, etc.) as well as to give the container a little safety margin to prevent it being killed by YARN. This headroom should be about 6% of Xmx, but no more than 6 Gb . This is not set directly and is instead used to calculate the container memory remaining for the use by off-heap cache.

Take out Xmx and the headroom from the total container size to determine cache size. If the cache size is below the viable size above, reduce Xmx to allow for more memory here (typically by reducing the number of executors) or disable LLAP IO (hive.llap.io.enabled = false).

Example:

Xmx is 64 Gb, so headroom = 0.06 * 64 Gb = ~4 Gb (which is lower than 6 Gb).

Cache size = 92 Gb total – 64 Gb Xmx – 4 Gb headroom = 24 Gb. This is above the minimum of 3.2 Gb.

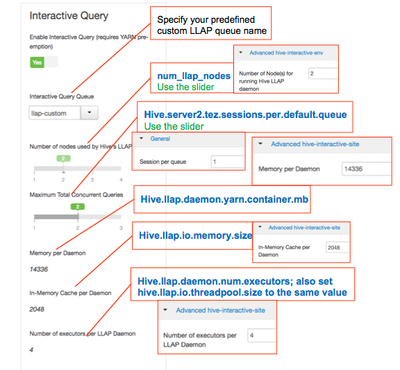

Configure interactive query

You can specify the above settings on the main interactive query panel, as well as in advanced config (or validate the values predetermined by Ambari).

Some other settings from the advanced config that may be of interest:

- AM size can be modified in custom Hive config by changing tez.am.resource.memory.mb.

Created on 12-06-2017 07:04 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Outstanding article!

Created on 12-07-2017 07:33 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thank you 🙂

Created on 12-27-2017 04:39 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks a lot for this article. This is exactly what I needed !

Created on 05-04-2018 01:30 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

where do we set 4gb executor memory and is there a parameter to set the executor memory.

Created on 05-28-2018 04:02 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Created on 05-29-2018 08:03 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Yes, I think that should be sufficient. You can set it higher if you see issues (like container getting killed by YARN) but 12G definitely seems excessive for 32Gb Xmx. I'll try to find out why the engine would recommend such high values.

Created on 01-07-2019 12:00 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Brilliant article. One suggestion. The example you gave in "Configure interactive query" isn't matching with example you discussed. Can you please modify it.