Community Articles

- Cloudera Community

- Support

- Community Articles

- MiNiFi - C++ IoT Cat Sensor

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 02-27-2018 05:57 PM - edited 08-17-2019 08:42 AM

Keeping track of where a cat is can be a tricky task. In this article, we'll design and prototype a smart IoT cat sensor which detects when a cat is in proximity. This sensor is meant to be part of a larger network of cat sensors covering a target space.

Flow Design

Sensor input

We start by polling an image sensor (camera) for image data. We use the GetUSBCamera processor configured for the USB camera device attached to our sensor controller.

On our system, we had to open up permissions on the USB camera device, otherwise MiNiFi's GetUSBCamera processor would record an access denied error in the logs:

chown user /dev/bus/usb/001/014

We then configure the sensor processor as such:

Processors:

- name: Get

class: GetUSBCamera

Properties:

FPS: .5

Format: RAW

USB Vendor ID: 0x045e

USB Product ID: 0x0779

Machine Learning Inference on the Edge

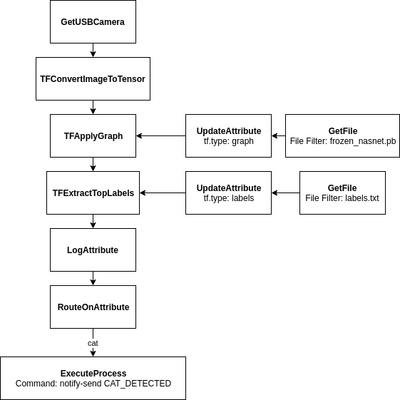

We use TensorFlow to perform class inference on the image data. We do this at the sensor rather than in a centralized system in order to significantly reduce inference latency and network bandwidth consumption. This is a three-step process:

- Convert image data to a tensor using TFConvertImageToTensor

- Perform inference using a pre-trained NASNet Large model applied via TFApplyGraph

- Extract inferred classes using TFExtractTopLabels

Preparation of NASNet Graph

We must perform some preliminary steps to get the NASNet graph into form that MiNiFi can use. First, we export the inference graph using the export_inference_graph.py script from TensorFlow models research/slim:

python export_inference_graph.py --model_name=nasnet_large --output_file=./nasnet_inf_graph.pb

This will also create a labels.txt file, which we will save for later use.

Next, we download and extract the checkpoint nasnet-a_large_04_10_2017.tar.gz.

Next, we use freeze_graph to integrate the pre-trained checkopint with the inference graph, and save the whole thing as a frozen graph:

from tensorflow.python.tools import freeze_graph

freeze_graph.freeze_graph(input_graph='./nasnet_inf_graph.pb',

input_saver='',

input_binary=True,

input_checkpoint='./model.ckpt',

output_node_names='final_layer/predictions',

restore_op_name='save/restore_all',

filename_tensor_name='save/Const:0',

output_graph='./frozen_nasnet.pb',

clear_devices=True,

initializer_nodes='')

MiNiFi Inference Flow

We use the following processors and connections to perform inference on images provided via our camera:

Processors:

- name: Convert

class: TFConvertImageToTensor

Properties:

Input Format: RAW

Input Width: 1280

Input Height: 800

Crop Offset X: 240

Crop Offset Y: 0

Crop Size X: 800

Crop Size Y: 800

Output Width: 331

Output Height: 331

Channels: 3

- name: Apply

class: TFApplyGraph

Properties:

Input Node: input:0

Output Node: final_layer/predictions:0

- name: Extract

class: TFExtractTopLabels

- name: Log

class: LogAttribute

Connections:

- source name: Get

source relationship name: success

destination name: Convert

- source name: Convert

source relationship name: success

destination name: Apply

- source name: Apply

source relationship name: success

destination name: Extract

- source name: Extract

source relationship name: success

destination name: Log

We use the following processors and connections to supply TFApplyGraph with the inference graph and TFExtractTopLabels with the labels file:

Processors:

- name: GraphGet

class: GetFile

scheduling strategy: TIMER_DRIVEN

scheduling period: 120 sec

Properties:

Keep Source File: true

Input Directory: .

File Filter: "frozen_nasnet.pb"

- name: GraphUpdate

class: UpdateAttribute

Properties:

tf.type: graph

- name: LabelsGet

class: GetFile

scheduling strategy: TIMER_DRIVEN

scheduling period: 120 sec

Properties:

Keep Source File: true

Input Directory: .

File Filter: "labels.txt"

- name: LabelsUpdate

class: UpdateAttribute

Properties:

tf.type: labels

Connections:

- source name: GraphGet

source relationship name: success

destination name: GraphUpdate

- source name: GraphUpdate

source relationship name: success

destination name: Apply

- source name: LabelsGet

source relationship name: success

destination name: LabelsUpdate

- source name: LabelsUpdate

source relationship name: success

destination name: Extract

Route/Store/Forward Inferences

For the purposes of this prototype, we'll use RouteOnAttribute in conjunction with the NiFi Expression Language forwarded to an ExecuteProcess using notify-send to notify us of a CAT_DETECTED event. In a production system, we may want to use Remote Processing Groups to forward data of interest to a centralzed system.

Our prototype flow looks like this:

Processors:

- name: Route

class: RouteOnAttribute

Properties:

cat: ${"tf.top_label_0":matches('(282|283|284|285|286|287|288|289|290|291|292|293|294):.*')}

auto-terminated relationships list:

- unmatched

- name: Notify

class: ExecuteProcess

Properties:

Command: notify-send CAT_DETECTED

auto-terminated relationships list:

- success

Connections:

- source name: Log

source relationship name: success

destination name: Route

- source name: Route

source relationship name: cat

destination name: Notify

Conclusion

We can now hold a cat up to our sensor and confirm that it detects a cat and triggers our notification:

---------- Standard FlowFile Attributes UUID:0143d35c-1be5-11e8-a6f9-b06ebf2c6de8 EntryDate:2018-02-27 12:38:21.748 lineageStartDate:2018-02-27 12:38:21.748 Size:4020 Offset:0 FlowFile Attributes Map Content key:filename value:1519753101748318191 key:path value:. key:tf.top_label_0 value:284:Persian cat key:tf.top_label_1 value:259:Samoyed, Samoyede key:tf.top_label_2 value:357:weasel key:tf.top_label_3 value:360:black-footed ferret, ferret, Mustela nigripes key:tf.top_label_4 value:158:papillon key:uuid value:0143d35c-1be5-11e8-a6f9-b06ebf2c6de8 FlowFile Resource Claim Content Content Claim:/home/achristianson/workspace/minifi-article-2018-02-22/flow/contentrepository/1519753075140-43 ---------- [2018-02-27 12:38:23.958] [org::apache::nifi::minifi::core::ProcessSession] [info] Transferring 02951658-1be5-11e8-9218-b06ebf2c6de8 from Route to relationship cat [2018-02-27 12:38:25.754] [org::apache::nifi::minifi::processors::ExecuteProcess] [info] Execute Command notify-send CAT_DETECTED

MiNiFi - C++ makes it easy to create an IoT cat sensor. To complete our cat tracking system, we simply need to deploy a network of these sensors in the target space and configure the flow to deliver inferences to a centralized NiFi instance for storage and further analysis. We might also consider combining the image data with other data such as GPS sensor data using the GetGPS processor.

Created on 03-02-2018 11:03 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

can you add a complete config.yml?

Created on 03-02-2018 11:42 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

which version of TensorFlow needs to be installed? Can you link the installation document?

Created on 03-05-2018 02:06 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Sure, here's the complete yml (some paths, USB ID, image resolution, etc. are specific to my system): https://gist.github.com/achristianson/1dea217e5fcbc88b87e526d919dad2c0. The Tensorflow install process I used is documented here: https://github.com/apache/nifi-minifi-cpp/blob/master/extensions/tensorflow/BUILDING.md. There are multiple ways this could be done, but tensorflow_cc has worked well. The TensorFlow version was used according to the latest master of tensorflow_cc as of the publish date of this article. I believe the version is 1.5.0.

Created on 03-05-2018 02:07 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Created on 03-05-2018 02:11 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks!!!! That's very helpful.