Community Articles

- Cloudera Community

- Support

- Community Articles

- My First Express Upgrade

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 02-10-2016 11:12 AM - edited 08-17-2019 01:17 PM

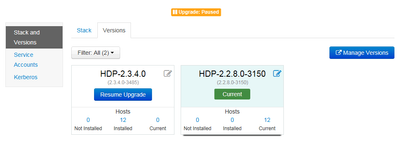

I just completed my first Express upgrade (EU) using Ambari-2.2.0, from HDP-2.2.8 to HDP-2.3.4 and here are my observations and issues I encountered. The cluster has 12 nodes, 2 masters and 10

workers with configured Namenode HA and RM HA running on RHEL-6.5 using Java-7. Installed Hadoop components: HDFS, MR2, Yarn, Hive, Tez, HBase, Pig, Sqoop, Oozie, ZooKeeper, and AmbariMetrics. About 2 weeks before this EU, the cluster was upgraded from HDP-2.1.10 and Ambari-1.7.1. Please use this as a reference: based on cluster settings and previous history (previous upgrade or fresh install), the issues will differ, and the problems I had should by no means considered to be representative, and taking place during every EU.

- It's good idea to backup all cluster supporting data-bases in advance, in my case Ambari, Hive metastore, Oozie and Hue (although Hue cannot be upgraded by Ambari)

- There is no need to prepare or download HDP.repo file in advance, Ambari will crate the file, now called HDP-2.3.4.repo and will distribute it to all nodes.

- The upgrade consists of registering a new HDP version, installing that new version on all nodes, and after that starting the upgrade.

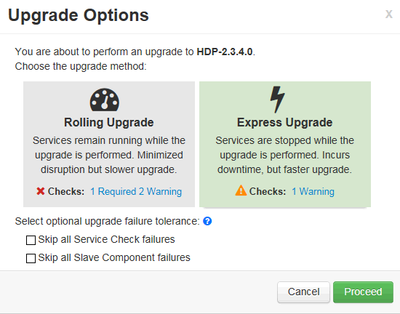

- After starting the upgrade Ambari found that we can also do Rolling upgrade by enabling yarn.timeline-service.recovery.enabled (now false), but instead we decided to do the Express Upgrade (EU).

- There was only one warning for EU, that some *-env.sh files will be over-written. That was fine, however I backed up all those files for easier comparison with new files after the upgrade.

- The upgrade started well, and everything was looking great: ZooKeeper, HDFS Name nodes and Data nodes, and Resource managers were all successfully upgraded and restarted. And then, when it looked like it would be effortless on my part, there was the first set-back: Node managers, all 6 of them could not start after the upgrade. Before starting the upgrade I chose to ignore all failures (on both master and worker components) and decided to keep on going and fix NMs later.

- Upgrade and restart of MapReduce2 and HBase was successful, and then the upgrade wizard tried to do service checks of components upgraded up to that point. As expected, ZK, HDFS and HBase were successful, but Yarn and MR2 tests failed. At that point I decided to see can I fix NMs.

- A cool feature of EU is that one can pause the upgrade at any time, inspect Ambari dashboard, do manual fixes and restart the EU when ready.

- Back to failed NMs, the wizard log was just saying (for every NM) that it cannot find it in the list of started NMs which was not very helpful. So, I checked the log on one of NMs, it was saying:

2016-02-05 13:16:52,503 FATAL nodemanager.NodeManager (NodeManager.java:initAndStartNodeManager(540)) - Error starting NodeManagerorg.apache.hadoop.service.ServiceStateException: org.fusesource.leveldbjni.internal.NativeDB$DBException: Corruption: 2 missing files; e.g.: /var/log/hadoop-yarn/nodemanager/recovery-state/yarn-nm-state/000035.sst

And indeed, in that directory I had 000040.sst but sadly no 000035.sst. I realized that it is my yarn.nodemanager.recovery.dir and because my Yarn NM recovery was enabled, NM tried to recover its state to the one before it was stopped. All our jobs were stopped and we didn't mind about recovering NM states, so after backing up the directory I decided to delete all files in it, and try to start NM manually. Luckily, that worked! The command to start a NM manually, as done by Ambari, as yarn user:

$ ulimit -c unlimited; export HADOOP_LIBEXEC_DIR=/usr/hdp/current/hadoop-client/libexec && /usr/hdp/current/hadoop-yarn-nodemanager/sbin/yarn-daemon.sh --config /usr/hdp/current/hadoop-client/conf start nodemanager

- After that EU was smooth, it upgraded Hive, Oozie, Pig, Sqoop, Tez and passed all service checks.

- At the very end, one can finalize the upgrade, or "Finalize later". I decided to finalize later and inspect the cluster.

- I noticed that ZKFC are still running on old version 2.2.8 and tried to restart HDFS hoping that ZKFC will be started using the new version. They didn't and on top of that I couldn't start NNs! I realized that because HDFS upgrade was not finalized I need the "-rollingUpgarde started" flag, so I started NNs manually, as hdfs user (Note: this is only required if you want to restart NNs before finalizing the upgrade):

$ ulimit -c unlimited; /usr/hdp/current/hadoop-client/sbin/hadoop-daemon.sh --config /usr/hdp/current/hadoop-client/conf start namenode -rollingUpgrade started

- After finalizing the upgrade and restarting HDFS, everything was running on HDP new version. In addition, I did following check to make sure the old version is not used any more:

- hdp-select status | grep 2\.2\.8 returns nothing

- ls -l /usr/hdp/current | grep 2\.2\.8 returns nothing

- ps -ef | grep java | grep 2\.2\.8 returns nothing or something not related to HDP

- After finalizing the upgrade Oozie service check was failing. I realized that Oozie share lib in HDFS is now in /user/oozie/share/lib_20160205182129, where the date/time in the directory name is derived from the time of creation. However, permissions were insufficient, all jars had 644 permissions instead of 755. So, as hdfs user I changed permissions and after that Oozie service check was all right:

$ hdfs dfs -chmod -R 755 /user/oozie/share/lib_20160205182129

- Pig service check was also failing. I found that pig-env.sh was wrong still having HCAT_HOME, HIVE_HOME, PIG_CLASSPATH and PIG_OPTS pointing to jars in now non-existent /usr/lib/hive and /usr/lib/hive-catalog directories. I commented out everything leaving only:

JAVA_HOME=/usr/lib/jvm/jre-1.7.0-openjdk.x86_64

HADOOP_HOME=${HADOOP_HOME:-/usr}

if [ -d "/usr/lib/tez" ]; then

PIG_OPTS="$PIG_OPTS -Dmapreduce.framework.name=yarn"

fi- Fixed templeton.libjars which got scrambled during the upgrade

templeton.libjars=/usr/hdp/${hdp.version}/zookeeper/zookeeper.jar,/usr/hdp/${hdp.version}/hive/lib/hive-common.jar- At this point all service checks were successful, and additional tests running Pi, Teragen/Terasort, simple Hive and Pig jobs were completing without issues.

- And so, my first EU was over! Despite these minor setbacks it was much faster than doing it all manually. Give it a try when you have a chance