Community Articles

- Cloudera Community

- Support

- Community Articles

- NiFi - Understanding how to use Process Groups and...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 02-11-2016 09:36 PM - edited 08-17-2019 01:14 PM

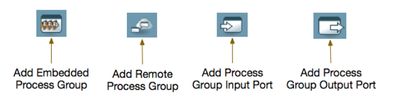

The purpose of this article is to explain what Process Groups and Remote Process Groups (RPGs) are and how input and output ports are used to move FlowFiles between them. Process groups are a valuable addition to any complex dataflow. They give DataFlow Managers (DFMs) the ability to group a set of processors on to their own imbedded canvas. Remote Process groups allow a DFM to treat another NiFi instance or cluster as just another process group in the larger dataflow picture. Simply being able to build flows on different canvases is nice, but what if I need to move NiFi FlowFiles between these canvases? This is where input and output ports come in to play. They allow you move FlowFiles between these canvases that are either local to a single NiFi or between the canvases of complete different NiFi instances/clusters.

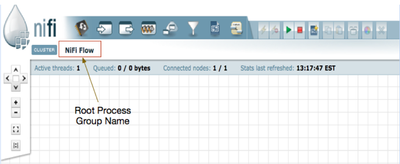

Embedded Process Groups: Lets start by talking about the simplest use of multiple embedded canvases through process groups. When you started NiFi for the very first time you are given a blank canvas. This blank canvas is noting more then a process group in itself. The process group is referred to as the root process group.

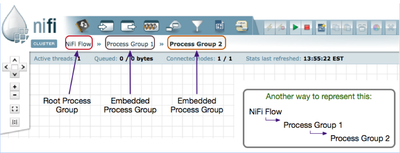

From there you are able to add additional process groups to that top-level canvas. These added process groups allow you drill down in to them giving additional blank canvases you could build dataflows on. When you enter a process group you will see the hierarchy represented just above the canvas in the UI ( NiFi Flow >> Process Group 1 ). NiFi does not restrict the number of process groups you can create or the depth you can go with them. You could compare the process group hierarchy to that of a Windows directory structure. So if you added another process group inside one that you already created, you would essentially now have gone two layers deep. ( NiFi Flow >> Process Group 1 >> Process Group 2 ). The hierarchy represented above you canvas allows you to quickly jump up one or more layers all the way to the root level by simply clicking on the name of the process group.

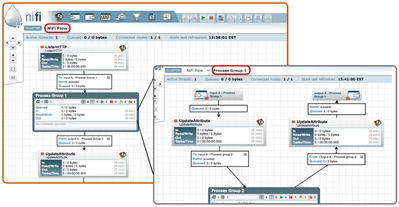

While you can add any number of process groups at the same embedded level, the hierarchy is only shown from root down to the current process group you are in. Now that we understand how to add embedded process groups, lets talk about how we move data in and out of these process groups. This is where input and output ports come in to play. Input and output ports exist to move FlowFIles between a process group andONE LEVEL UPfrom that process group. Input ports will accept FlowFiles coming from one level up and output ports allow FlowFiles to be sent one level up. If I have a process group added to my canvas, I cannot drag a connection to it until at least one input port exists inside that process group. I also cannot drag a connection off of that process group until at least on output port exists inside the process group. You can only move FlowFiles up or down one level at a time. Given the example of a process group within another process group, FlowFiles would need to be moved from the deepest level up to the middle layer before finally being able to be moved to the root canvas.

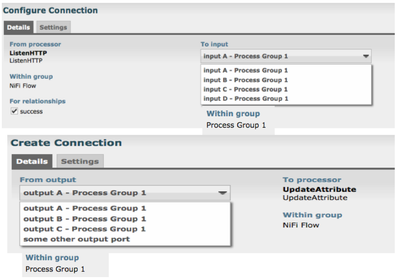

In the above example I have a small flow pushing FlowFiles into an embedded process group (Process Group 1) and also pulling data from the same embedded process group. As you can see, I have created an input and output port inside Process Group 1. This allowed me to draw a connection to and from the process group on the root canvas layer. You can have as many different input and output ports inside any process group as you like. When you draw the connection to a process group, you will be able to select which input port to send the FlowFiles to. When you draw a connection from a process group to another processor, you will be able to pick which output port to pull FlowFiles from.

Every input and output port within a single process group must have a unique name. NiFi validates the port name to prevent this from happening.

Remote Process Groups:

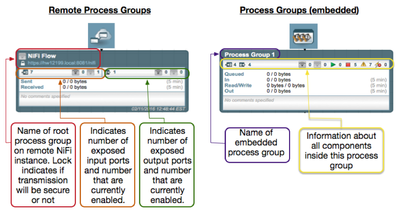

We refer to the ability to send FlowFiles between different NiFi instances as Site-to-Site. Site-to-Site is configured very much in the same way we just configured moving files between embedded process groups on a single NiFi instance. Instead of moving FlowFiles between different process groups (layers) within the same NiFi, we are moving FlowFiles between different NiFi instances or clusters. If a DFM reaches a point in their dataflow where they want to send data to another NiFi instance or cluster, they would add a Remote Process Group (RPG). These Remote Process Groups are not configured with unique system port numbers, but instead all utilize the same Site-to-Site port number configured in your nifi.properties files. I will not be covering the specific NiFi configuration needed to enable site-to-site in this article. For information on how to enable and configure Site-to-Site on a NiFi instance, see the Site-to-Site Properties section of the Admin Guide. Lets take a quick look at how these two components differ:

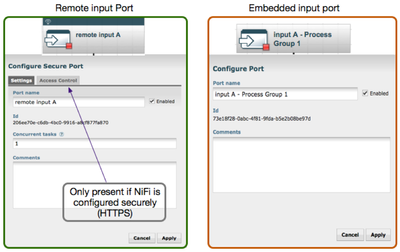

As I explained earlier, input and output ports are used to move FlowFiles one level up from the process group they are created in. At the top level of your canvas (root process group level) adding input or output ports provides the ability for that NiFi to receive (input port) FlowFiles from another NiFi instance or have another NiFi pull files from (output port) that NiFi. We refer to input and output ports added the top level as remote input or output ports. While the same input and output icon in the UI is used to add both remote and embedded input and output ports, you will notice that they are rendered differently when added to the canvas.

If your NiFi has been configured to be secure (HTTPS) using server certificates, the remote input/output port’s configuration windows will have an “Access Control” tab where you must authorize which remote NiFI systems are allowed to see and access these ports. If not running secure, all remote ports are exposed and accessible by any other NiFi instance.

In single instance you can send data to an input port inside a process group by dragging a connection to the process group and selecting the name of the input port from a selection menu provided. Provided that the remote NiFi instance has input ports exposed to your NiFi instance, you can drag a connection to the RPG much in the same way you previously dragged a connection to the embedded process groups within a single instance of NiFi. You can also hover over the RPG and drag a connection off of the RPG, which will allow you to pull data from an available output port on the target NiFi.

The Source NiFi (standalone or cluster) can have as many RPGs as a DFM would like. You can have multiple RPGs in different areas of your dataflows that all connect to the same remote instance. While the target NiFi contains the input and output ports (Only Input and output ports added to root level process group can be used for Site-to-Site Flowfile transfers).

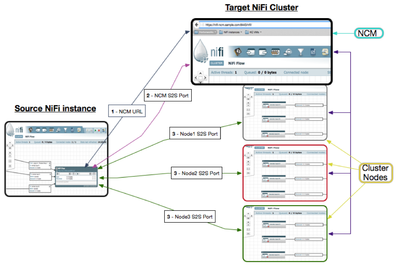

When sending data between two standalone NiFi instance the setup of your RPG is fairly straight forward. When adding the RPG, simply provide the URL for the target instance. The source RPG will communicate with the URL to get the Site-to-Site port to use for FlowFile transfer. When sending FlowFiles via Site-to-Site to a NiFi that is a NiFi cluster we want the data going to every node in the cluster. The Site-to-Site protocol handles this for you with some additional load-balancing benefits built in. The RPG is added and configured to point at the URL of the NCM. (1)The NCM will respond with the Site-to-Site port for the NCM. (2) The source will connect to the Site-to-Site port of the NCM which will respond to the source NiFi with the URLs, Site-to-Site port numbers, and current loads on every connected node. (3) The source NiFi will then load-balance FlowFile delivery to each of those nodes giving fewer FlowFiles to nodes that are under heavier load. The following diagram illustrates these three steps:

A DFM may choose to use Site-to-Site to redistribute data arriving on a single node in a cluster to every node in that same cluster by adding a RPG that points back at the NCM for that cluster. In this case the source NiFi instance is also one of the target NiFi instances.

Created on 08-01-2018 03:51 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Matt,

Thanks for this nice article. I experimented a little with RPGs and I notice that although you can have multiple RPGs in different areas of your dataflows that all connect to the same remote instance, the source Nifi can send their FFs to the multiple "copies" of the RPG, however they will not receive their corresponding FFs from the RPG they sent them to, instead FFs will all be sent out to the same calling point (I guess it is the first one set on the canvas). Is that expected behavior? If so, doesn't it limit reuse of RPG substantially?

Created on 08-01-2018 04:54 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

-

I a not sure i am following your comments completely. Keep in mind that this Article was written against Apache NiFi 0.x versions. The look of the UI and some of the configuration/capabilities relevant to RPGs have changed as of Apache NIFi 1.x.

-

When you say "source NiFi", are you referring to the NiFi instance with the RPG or the NiFi instance with an input or output port?

-

Keep in mind the following:

1. The NiFi with the RPG on the canvas is always acting as the client. It will establish the connection to the target instance/cluster.

2. An RPG added to the canvas of a NiFi cluster is running on every node in that cluster with no regard for any other node in the cluster.

3. An RPG regularly connects the target NiFi cluster to retrieve S2S details which include number of nodes, load on nodes, available remote input/output ports, etc... (Even if URL provided in RPG is of a single node in the target cluster, the details collected will be for all nodes in target cluster).

4. A node distribution strategy is calculated based on the details collected.

-

During the actual sending of FlowFiles to a target NiFi instance/cluster remote input port, the number of FlowFiles sent is based on configured port properties in the RPG. So it may be the case that those settings are default, so FlowFiles are not load-balanced very well.

-

During the actual retrieving of FlowFiles from a target NiFi instance/cluster remote output port, the RPG will round-robin the node in the target NiFi pulling FlowFiles from the remote output port based on the port configuration properties in the RPG. So it may be that one source node has an RPG that run before the others and connects and is allocated all FlowFiles on the target remote output port before any other node in source Nifi cluster runs. There are some limitations in load-balancing using such a get/pull setup.

-

For more info on configuring your remote ports via the RPG, see the following article:

*** above article is based off Apache NiFi 1.2+ versions of RPG.

-

Thanks,

Matt

Created on 08-01-2018 06:47 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Maybe I can simplify my point by seeing RPGs just as functions with inputs and outputs, or servers with requests and responses. If I call the same function in various places, I expect to receive the output matching my input each time. But with RPGs, I copied the same twice on my canvas and called them with different flow files, all responses came out of the same. Maybe RPGs are not meant for that and the analogy with a function does not hold.

Romain

Created on 01-20-2022 11:57 AM - edited 01-20-2022 12:00 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hello,

Is it still the best practice to create say 100 process groups for 100 dataflow/etl pipelines each of which has multiple processors in each pipeline. Wont 100 process groups be difficult to see on a single canvas? Or any better way so we can easily see and search the 100 ETL pipelines using some filter like name, userid, date etc. to narrow down the list.

Created on 01-24-2022 06:54 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@ebeb

You can name each of your process groups uniquely by name that identifies the ETL pipeline it contains.

Then you can use the Search box in the upper right corner of the UI to search for specific ETL process groups. You are correct that having 100 PG on a single canvas, if zoomed out far enough to see them all, would result in PG boxes rendered without the visible names on them. So if the PG were not named making them easy to search for. the only option would be to zoom in and pan around looking for a PG you want to access.

There is no way to enter some search which would hide some rendered items and highlight others. All items are always visible, so unique naming when dealing with large dataflows is very important from a usability perspective. Every component when created is assigned a Unique ID (UUID) which can be used to search on as well.

If each of your pipelines does the same thing, but on different data, creating a separate dataflow for each would not be the most efficient use of resources. Maybe better to tag your data accordingly and share pipeline processing where it makes sense.

Created on 01-24-2022 08:33 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

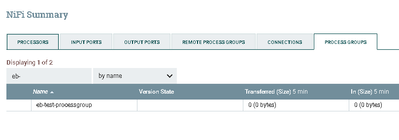

Thanks @MattWho , actually found a way to filter/search process groups by name using the Summary option in the top right menu. This is very useful to find all the ETL pipelines once we give proper names and then by entering a part of the name we can show all matching Process Groups. Being a newbie I am trying to compare Streamsets UI to Nifi UI so I can work the same way. Streamsets provides an initial list of all ETL pipelines to filter by name etc. I guess if just after Nifi login if we saw two links: Summary and Canvas then users can click intuitively on the summary screen and review all their Process groups and click on specific PG they want to work with. This would make it similar to other ETL tools like Streamsets.