Community Articles

- Cloudera Community

- Support

- Community Articles

- Parsing Any Document with Apache NiFi 1.5 with Apa...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 01-18-2018 12:43 AM - edited 08-17-2019 09:22 AM

I have just started working on updated Apache Tika and Apache OpenNLP processors for Apache 1.5 and while testing found an interesting workflow I would like to share.

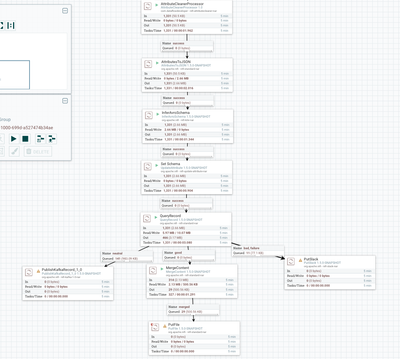

I am using a few of my processors in this flow:

- https://github.com/tspannhw/nifi-attributecleaner-processor <- Just updated this

- https://github.com/tspannhw/nifi-nlp-processor

- https://github.com/tspannhw/nifi-extracttext-processor <- this needs a major version refresh

- https://github.com/tspannhw/nifi-corenlp-processor

Here is the flow that I was working on.

Step 1 - Load Some PDFs

Step 2 - Use the built-in Apache Tika Processor to extract metadata from the files

Step 3 - Pull Out the Text using my Apache Tika processor

Step 4 - Split this into individual lines

Step 5 - Extract out the text of the line into an attribute ((^.*$)) into a sentence

Step 6 - Run NLP to analyze for names and locations on that sentence

Step 7 - Run Stanford CoreNLP sentiment analysis on the sentence

Step 8 - I run my attribute cleaner to turn those attributes into AVRO safe names

Step 9 - I turn all the attributes into a JSON Flow File

Step 10 - I Infer an Avro schema ( I only needed this once, then I'll remove it)

Step 11 - I set the name of the Schema to be looked up from the Schema Registry

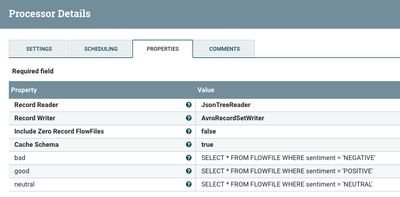

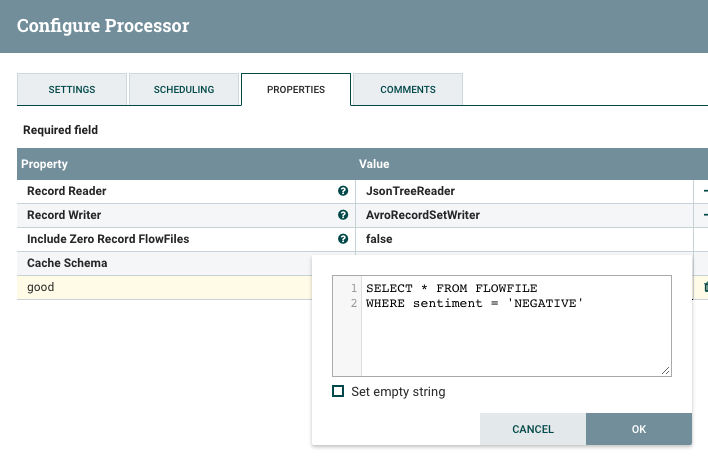

Step 12 - I run QueryRecord to route POSITIVE, NEURAL and NEGATIVE sentiment to different places. Example SQL: SELECT * FROM FLOWFILE WHERE sentiment = 'NEGATIVE' Thanks Apache Calcite! We also convert from JSON to AVRO for sending to Kafka also for easy conversion to Apache ORC for Apache Hive usage.

Step 13-14-15 - I send records to Kafka 1.0, Some get merged to store as a file and some get made into Slack messages.

Step 16. Done

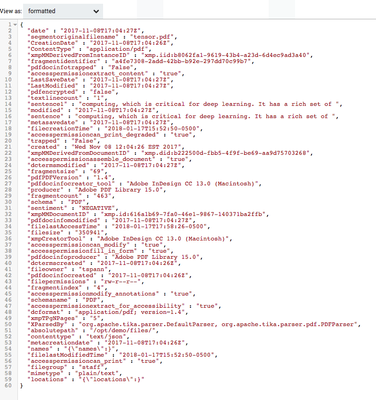

Here is an example of my generated JSON file.

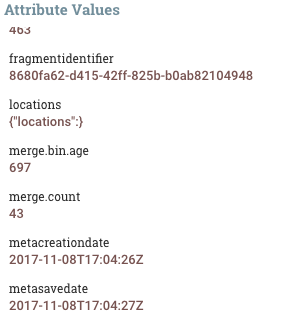

Here are some of the attributes after the run.

You can see the queries in the QueryRecord processor.

The results of a run showing a sentence, file meta data and sentiment.

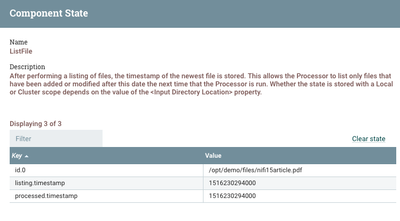

We are now waiting for new PDFs (and other file types) to arrive in the directory for immediate processing.

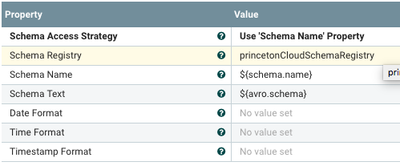

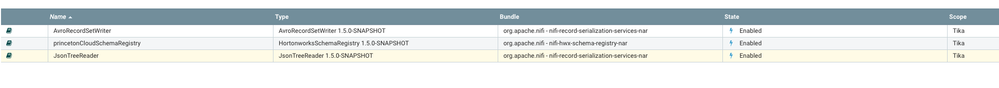

I have a JSONTreeReader, a Hortonworks Schema Registry and and AvroRecordSetWriter.

We set the properties and the schema register for the reader and writer. Obviously we can use other readers and writers as needed for types like CSV.

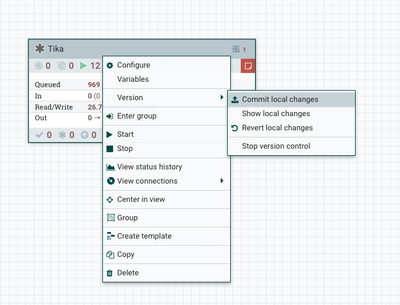

When I am done, since it's Apache NiFi 1.5, I commit my changes for versioning.

Bam!

For the upcoming processor I will be interfacing with:

- https://wiki.apache.org/tika/TikaOCR

- https://wiki.apache.org/tika/TikaAndNER

- https://wiki.apache.org/tika/TikaAndNLTK

- https://wiki.apache.org/tika/GrobidQuantitiesParser

- https://wiki.apache.org/tika/TikaAndMITIE

- https://wiki.apache.org/tika/AgeDetectionParser

- https://wiki.apache.org/tika/TikaAndVision

- https://wiki.apache.org/tika/TikaAndVisionDL4J

- https://wiki.apache.org/tika/ImageCaption

Apache Tika has added some really cool updates, so I can't wait to dive in.

Created on 03-12-2018 02:19 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Created on 01-28-2020 08:48 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi, When uploading the xml project I get the following error:

com.dataflowdeveloper.processors.process.CoreNLPProcessor is not known to this NiFi instance.

Please your help.

Karen

Created on 01-30-2020 01:46 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

That is a custom processor I wrote, you need to install it in the lib directory and restart nifi

download nar from here . https://github.com/tspannhw/nifi-corenlp-processor/releases

Created on 01-30-2020 02:54 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks you. This Worked!