Community Articles

- Cloudera Community

- Support

- Community Articles

- Processing Social Media Feeds in Stream with Apach...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 08-19-2016 12:19 AM - edited 08-17-2019 10:44 AM

Use Case: Process a Media Feed, Store Everything, Run Sentiment Analysis on the Stream, and Act on a Condition

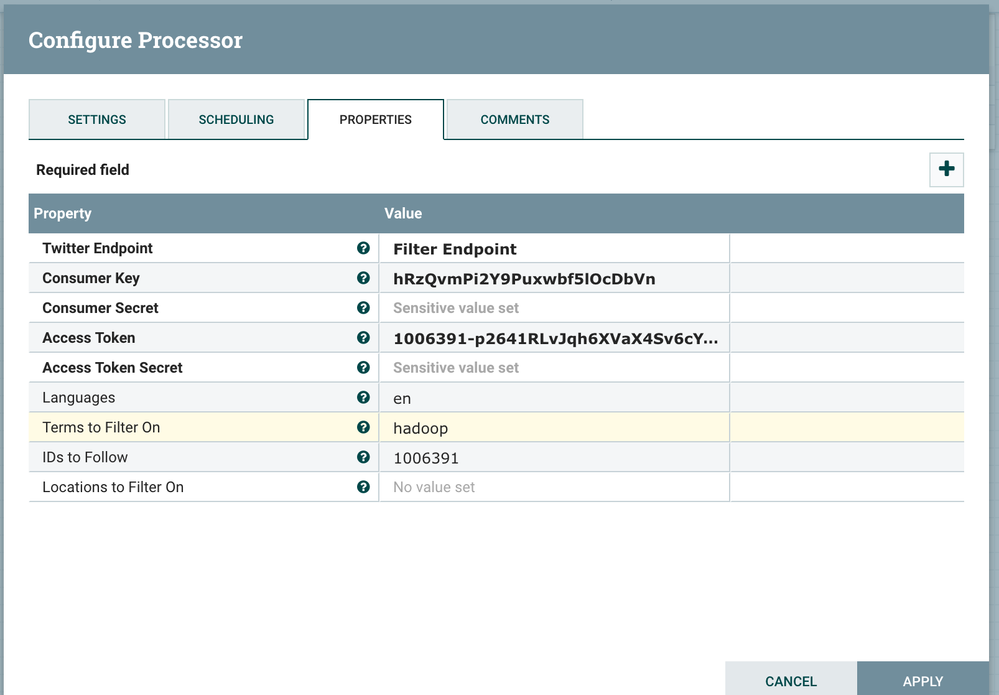

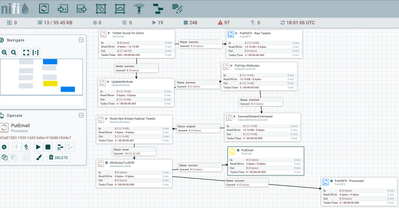

I have my GetTwitter processor looking at my twitter handle and the keyword Hadoop, something I tend to tweet frequently.

I use an EvaluateJsonPath to pull out all the attributes I like (msg, user name, geo information, etc...). I use a AttributesToJSON processor to make a new JSON file from just my attributes for a smaller tweet. I store the raw JSON data in HDFS as well in a separate directory.

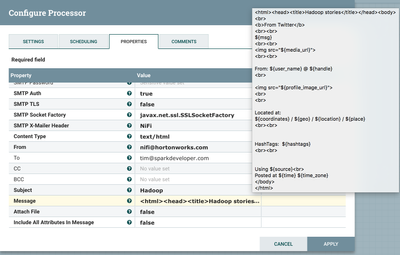

Sending HTML Email is a bit tricky, you need to make sure you don't include extra text, so nothing in the message but RAW HTML as seen below and don't Attach Files or Include All Attributes in Message. Make sure you set the Content Type to text/html.

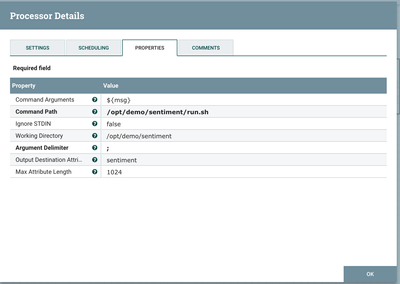

For sentiment analysis I wanted to run something easy, so I use an ExecuteStreamCommand to run a Python 2.7 script that uses NLTK Vader SentimentIntensityAnalyzer. The NiFi part is easy, just a command and call a shell script. The hard part is setting up Python and NLTK on the HDP 2.4 Sandbox. The NLTK with text corpus for proper analysis is almost 10 gigabytes of data.

If you don't have Python 2.7 or Python 3.4 installed on your box, as my VM had Python 2.6, you need to install Python 2.7 while keeping your existing Python 2.6 for existing application. This is a bit tricky so I have detailed these steps so you will be able to install and run this great ML tool.

Directions on how to install Python 2.7 on Centos 6.x can be found here. More details can be found here.

sudo yum install -y centos-release-SCL sudo yum install -y python27 sudo yum groupinstall "Development tools" -y sudo yum install zlib-devel -y sudo yum install bzip2-devel -y sudo yum install openssl-devel -y sudo yum install ncurses-devel -y sudo yum install sqlite-devel -y cd /opt sudo wget --no-check-certificate https://www.python.org/ftp/python/2.7.6/Python-2.7.6.tar.xz sudo tar xf Python-2.7.6.tar.xz cd Python-2.7.6 sudo ./configure --prefix=/usr/local sudo make && sudo make altinstall Now we can use: /usr/local/bin/python2.7 /usr/local/bin/python2.7 get-pip.py wget https://bootstrap.pypa.io/get-pip.py sudo /usr/local/bin/pip2.7 install -U nltk sudo /usr/local/bin/pip2.7 install -U numpy sudo /usr/local/bin/python2.7 -m nltk.downloader -d /usr/local/share/nltk_data all (almost 10 gig of data) sudo /usr/local/bin/pip2.7 install vaderSentiment sudo /usr/local/bin/pip2.7 install twython

The run.sh called from ExecuteStreamCommand. I use the BASH shell script since I want to make sure Python 2.7 is used. There are other ways, but this works for me.

/usr/local/bin/python2.7 /opt/demo/sentiment/sentiment.py "$@"

That script calls sentiment.py with parameters passed from NiFi.

from nltk.sentiment.vader import SentimentIntensityAnalyzer

import sys

sid = SentimentIntensityAnalyzer()

ss = sid.polarity_scores(sys.argv[1])

print('Compound {0} Negative {1} Neutral {2} Positive {3} '.format(ss['compound'],ss['neg'],ss['neu'],ss['pos']))

Once the data is in Hadoop, I was also running a Scala Spark 1.6 with Spark SQL batch job to process Stanford CoreNLP sentiment analysis on it as well. I also tried running that as a Scala Spark 1.6 Spark Streaming that did the same thing but received the data from Kafka (could also receive from NiFi Site-To-Site). Another option is to write a Processor in Java or Scala that can run that as part of the flow.

With Apache NiFi, you have a lot of options depending on your needs, all get the features and benefits that only Apache NiFi provides.

Now we have a bunch of data! Hooray, both raw and slimmed down. A select portion was converted to HTML and emailed out. Note, I have used Gmail and Outlook.com/Hotmail to send, but they tend to shut you down after a while for spam concerns. I use my own mail server (Dataflowdeveloper.com) since I have full control, you can use your corporate server as long as you have SMTP login and permissions. You may need to check with your administrators on that for firewall, ports and other security precautions.

What to do with an HDFS directory full of same schema JSON files from Twitter?

I also used a Spark batch job to produce an ORC Hive table with an extra column for Stanford Sentiment. You can quickly run queries on that via beeline, DBVisualizer or Ambari Hive View.

beeline !connect jdbc:hive2://localhost:10000/default; !set showHeader true; set hive.vectorized.execution.enabled=true; set hive.execution.engine=tez; set hive.vectorized.execution.enabled =true; set hive.vectorized.execution.reduce.enabled =true; set hive.compute.query.using.stats=true; set hive.cbo.enable=true; set hive.stats.fetch.column.stats=true; set hive.stats.fetch.partition.stats=true; show tables; describe sparktwitterorc; analyze table sparktwitterorc compute statistics; analyze table sparktwitterorc compute statistics for columns;

I do a one-time compute statistics to enhance performance. That table is now ready for high-speed queries.

You can also run a fast Hive query from the command-line:

beeline -u jdbc:hive2://localhost:10000/default -e "SELECT * FROM rawtwitter where sentiment is not null and time like 'Thu Aug 18%' and lower(msg) like '%hadoop%' LIMIT 100;"

Spark SQL says my data looks like:

|-- coordinates: string (nullable = true) |-- followers_count: string (nullable = true) |-- friends_count: string (nullable = true) |-- geo: string (nullable = true) |-- handle: string (nullable = true) |-- hashtags: string (nullable = true) |-- language: string (nullable = true) |-- location: string (nullable = true) |-- msg: string (nullable = true) |-- place: string (nullable = true) |-- profile_image_url: string (nullable = true) |-- retweet_count: string (nullable = true) |-- sentiment: string (nullable = true) |-- source: string (nullable = true) |-- tag: string (nullable = true) |-- time: string (nullable = true) |-- time_zone: string (nullable = true) |-- tweet_id: string (nullable = true) |-- unixtime: string (nullable = true) |-- user_name: string (nullable = true)

My Hive Table on this directory of tweets looks like:

create table rawtwitter( handle string, hashtags string, msg string, language string, time string, tweet_id string, unixtime string, user_name string, geo string, coordinates string, location string, time_zone string, retweet_count string, followers_count string, friends_count string, place string, source string, profile_image_url string, tag string, sentiment string, stanfordSentiment string ) ROW FORMAT SERDE 'org.apache.hive.hcatalog.data.JsonSerDe' LOCATION '/social/twitter';

Now you can create charts and graphs from your social data.