Community Articles

Find and share helpful community-sourced technical articles.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Announcements

Now Live: Explore expert insights and technical deep dives on the new Cloudera Community Blogs — Read the Announcement

- Cloudera Community

- Support

- Community Articles

- RAPIDS.ai on Spark 3 in Cloudera CDP-PvC Base

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Cloudera Employee

Created on

11-15-2020

05:19 PM

- edited on

11-22-2020

10:03 PM

by

VidyaSargur

RAPIDS.ai is a set of Nvidia libraries designed to help data scientists and engineers to leverage the Nvidia GPUs to accelerate all the steps in the data science process. From data exploration through to model development. Recently, with the inclusion of GPU processing support in Spark 3, RAPIDS has released a set of plugins designed to accelerate Spark DataFrame operations with the aid of GPU accelerators.

This article gives an introduction to setting up RAPID.ai on Cloudera's CDP Platform.

Before starting, ensure that you have access to the following requirements:

Prerequisites

- Cloudera CDP Private Cloud Base 7.1.3+

- Cloudera CDS 3

- Centos 7

- Epel Repo already added and installed

- Kernel 3.10 (deployment script was just tested on this version)

- Check run `uname -r`

- Full administrator and sudo rights

Nvidia Drivers

Firstly in order to be able to support GPUs in the OS layer, we need to install the Nvidia Hardware drivers. See: cdp_rapids for ansible helper scripts for installing Nvidia drivers. The ansible_cdp_pvc_base folder contains a ansible playbooks to install Nvidia Drivers on Centos 7. It has been tested successfully on AWS and should in theory work for any Centos 7 install. Specific instructions for rhel / centos 8 and ubuntu flavours are not included here. See Nvidia's official notes or the plenty of other articles on the web for that.

Later Nvidia drivers are available but for this example I have selected `440.33.01` drivers with CUDA 10.2. This is because as of writing most data science and ML libraries are still compiled and distributed for CUDA 10.2 with CUDA 11 support being in the minority. Recompiling is also a painstaking process. As an example, compiling a working TensorFlow 2.x on CUDA 11 takes about 40 mins on an AWS `g4dn.16xlarge` instance.

Note

A lot of the tutorials for installing Nvidia drivers on the web assume that you are running a local desktop setup. Xorg / Gnome Desktop, etc are not needed to get a server working. `nouveau` drivers may also not be active for VGAoutput in a server setup.

Common Issues

- Incorrect kernel headers or kernel dev packages: Be very careful with these. Sometimes the specific version for your linux distro may take a fair bit of searching to find. A default Kernel-devel / Kernel-headers install with yum or apt-get may also end up updating your kernel to a newer version too after which you will have to ensure that Linux is booting with the correct kernel

- Not explicitly setting your driver and CUDA versions: This can result in a yum update or apt-get update automatically updating everything to the latest and greatest and breaking all the libraries in your code which have only been compiled for an older CUDA version.

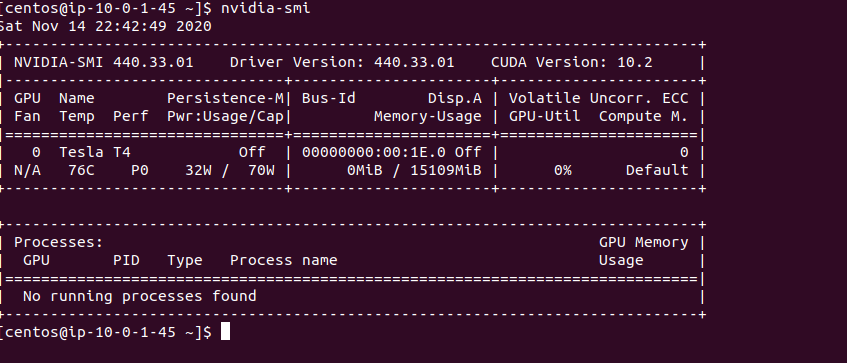

Validating Nvidia Driver Install

Before proceeding to enable GPU on YARN, check that your Nvidia drivers are installed correctly:

- Run nvidia-smi, it should list out the GPUs on your system. If nothing shows up, then your drivers were not properly installed. If this is showing nothing or erroring out, there are a few things to check.

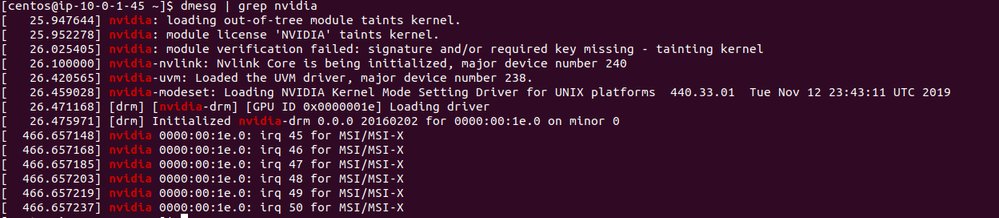

- Run dmesg | grep nvidia to see if Nvidia drivers are being started on boot:

Setting up RAPIDs on Spark on CDP

Now that we have the drivers installed, we can work on getting Spark 3 on YARN with GPUs scheduling enabled.

Note: CSD 3 should be installed onto your cluster first. Refer to CDS 3 Docs.

Enabling GPU in YARN

With Spark enabled, we need to enable GPUs on YARN. For those who have skimmed the Apache docs for enabling GPUs on YARN, these instructions will differ as Cloudera Manager will manage changes to configuration files like resource-types.xml for you.

To schedule GPUs, we need GPU isolation and for that we need to leverage Linux Cgroups. Cgroups are Linux controls to help limit the hardware resources that different applications will have access to. This includes CPU, RAM, GPU, etc, and is the only way to truly guarantee the amount of resources allocated to a running application.

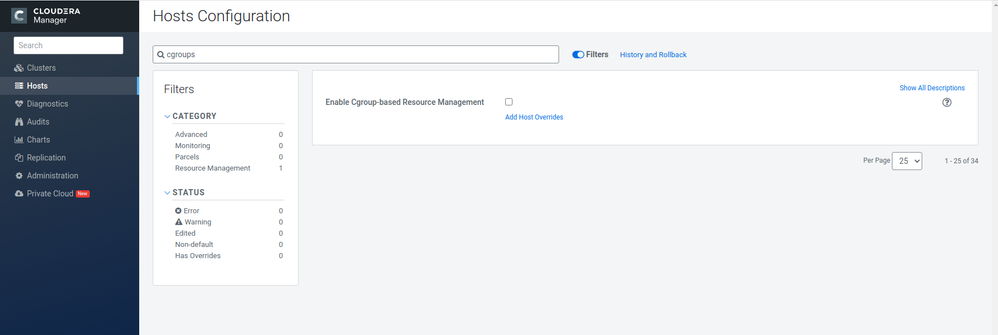

In Cloudera Manager, enabling Cgroups is a host-based setting that can be set from the Hosts >> Hosts Configuration option in the left side toolbar.

Search for Cgroups to find the tickbox.

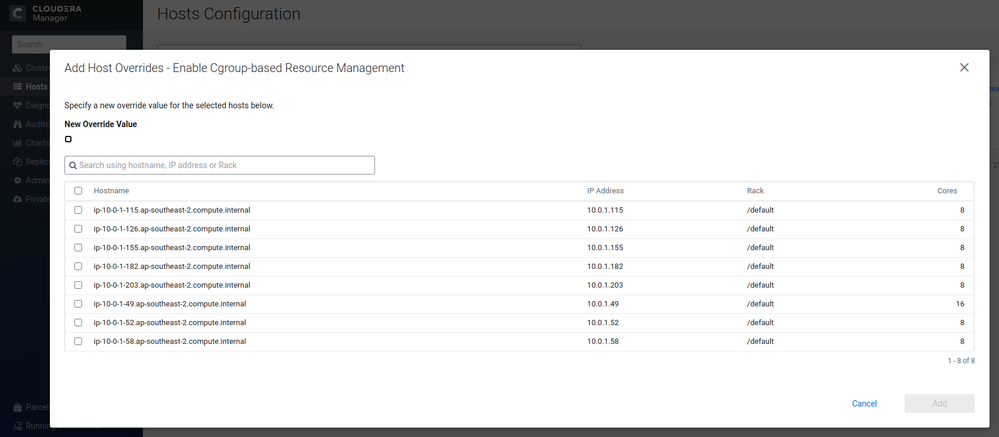

Selecting the big tickbox will enable it for all nodes, but it can also be set on a host-by-host basis through the Add Host Overrides option. For more details on the finer details of the Cgroup settings, refer to CDP-PvC Base 7.1.4 cgroups documentation.

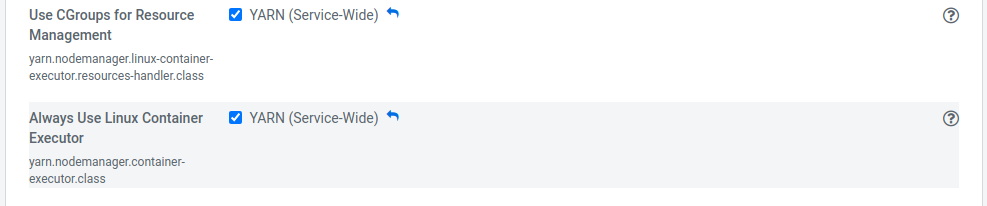

With Cgroups enabled, we now need to turn on Cgroup Scheduling under the YARN service.

- Go to the YARN service Cloudera Manager

- Go to Resource Management, and then search for cgroup

- Tick the setting Use CGroups for Resource Management

- Turn on Always Use Linux Container Executor

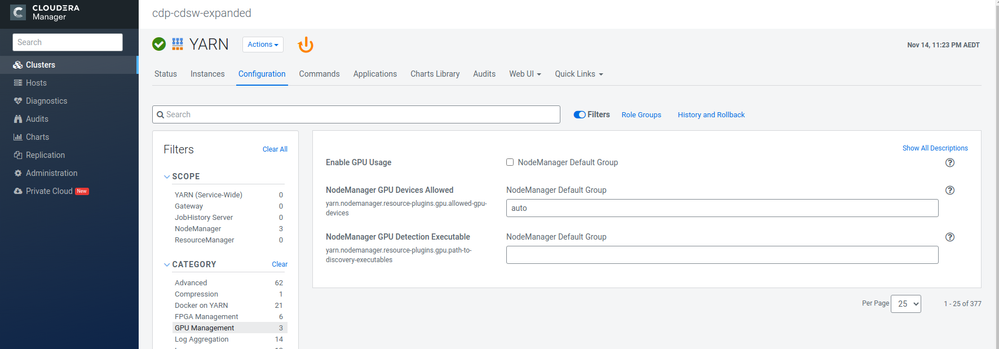

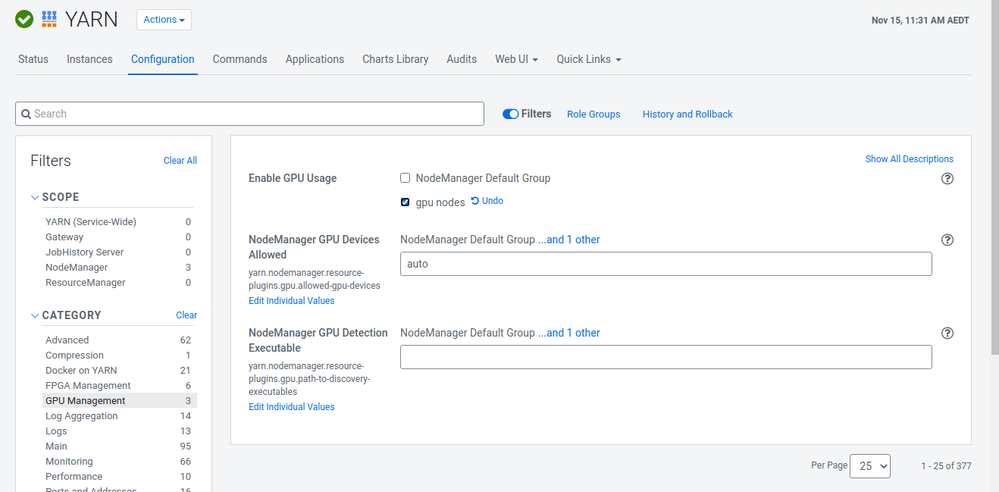

- Now we can enable GPU on YARN through the Enable GPU Usage tickbox. You can find that under Yarn >> Configuration then the GPU Management category.

Before you do that, however, it is worth noting that it will by default enable GPUs on all the nodes which means all your YARN Node Managers will have to have GPUs. If only some of your nodes have GPUs, read on!

YARN Role Groups

As discussed, Enable GPU Usage would enable it for the whole cluster. That means that all the Yarn nodes would have to have GPUs and for a large sized cluster that could get very expensive. There are two options if you have an expense limit on the company card.

We can add a small dedicated compute cluster with all GPU nodes on the side. But then users would have to connect to two different clusters depending on if they want to use GPUs or not. Now that can in itself be a good way to filter out users who really really need GPUs and can be trusted with them versus users that just want to try GPUs cause it sounds cool but that is a different matter. If you wish to pursue that option then follow the instructions in Setting Up Compute Clusters. Once your dedicated compute cluster is up and running you will have to follow the previous steps to enable CGroups, Cgroup scheduling and then turn on Enable GPU Usage` in YARN.

The other option is to stick with one cluster whilst adding a GPU or two is to create Role Groups. These allow for different Yarn configs on a host by host basis. So, some can have Enable GPU Usage turned on whilst others do not.

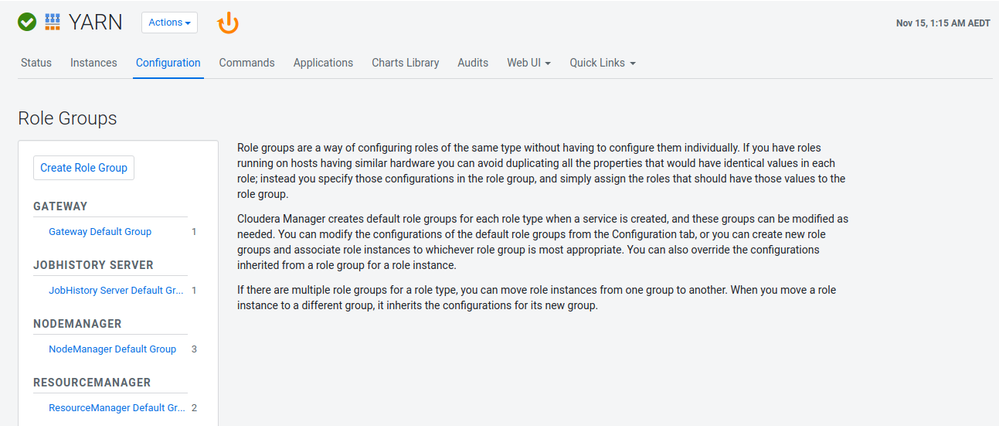

Role groups are configured on the service level. To setup a GPU role group for YARN, do the following:

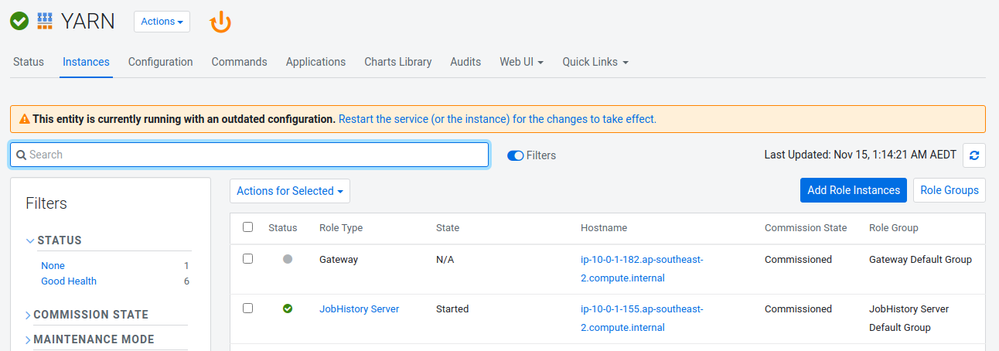

- In Cloudera Manager navigate to Yarn >> Instances. You will see the Role Groups button just above the table listing all the hosts.

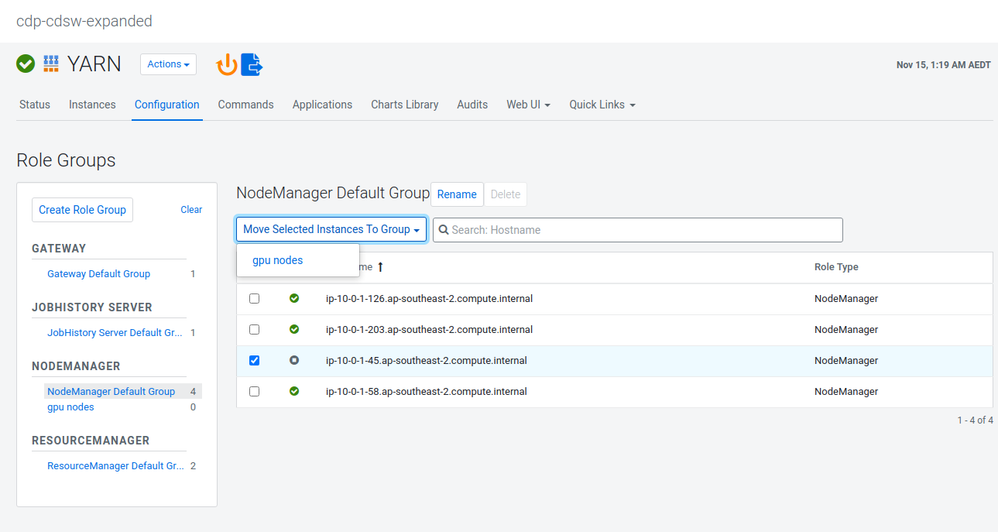

- Click Create a role group. In my case, I entered the Group Name gpu nodes of the Role Type NodeManager and I set the Copy From field to NodeManager Default Group.

- With our role group created, it is time to assign the GPU hosts to the gpu nodes group. Select the nodes in the NodeManager Default Group that have GPUs and move them to the new GPU Node group.

- With our role group created, we will be able to Enable GPU Usage just for the GPU nodes. For more details on role groups, refer to Configuring Role Groups . Note you may need to restart the cluster first.

Adding Rapids Libraries

To leverage Nvidia RAPIDS, YARN and the Spark executors have to be able to access the Spark RAPIDS libraries. The required jars are present in Nvidia RAPIDs Getting Started.

In my sample ansible code, I have created a /opt/rapids folder on all the YARN nodes. Spark also requires a discover GPU resources script too. Refer to Spark 3 GPU Scheduling I have also included the getGpusResources.sh script under ansible_cdp_pvc_base/files/getGpusResources.sh in this repo: cdp_rapids.

Verifying YARN GPU

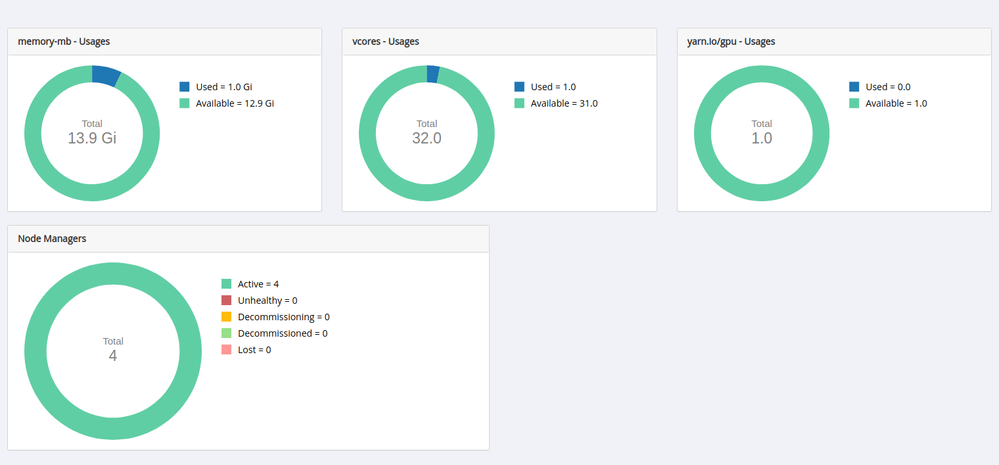

Now that we have everything set up, we should be able to see yarn.io/gpu listed as one of the usage meters on the Yarn2 cluster overview screen. If you do not see this dial, it means that something in the previous config has gone wrong.

Launching Spark shell

We now finally have everything configured, now we can launch GPU-enabled Spark shell.

Run the following on an edge node. Note the following commands and assume that

/opt/rapids/cudf-0.15-cuda10-2.jar, /opt/rapids/rapids-4-spark_2.12-0.2.0.jar and /opt/rapids/getGpusResources.sh exist. You will need them on each GPU node as well. Also, you will need different ".jar" files depending on the CUDA version that you have installed.

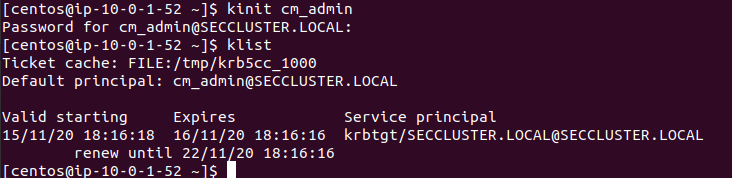

On a Kerberised cluster, you will need to firstly kinit as a valid kerberos user first for the following to work. You can use klist to verify that you have an active kerberos ticket.

To start your Spark shell, run the following.

Note: Depending on your executor setup and the amount of CPU and RAM you have available, you will need to set different settings for driver-core, driver-memory, executor-cores and executor-memory.

export SPARK_RAPIDS_DIR=/opt/rapids

export SPARK_CUDF_JAR=${SPARK_RAPIDS_DIR}/cudf-0.15-cuda10-2.jar

export SPARK_RAPIDS_PLUGIN_JAR=${SPARK_RAPIDS_DIR}/rapids-4-spark_2.12-0.2.0.jar

spark3-shell \

--master yarn \

--deploy-mode client \

--driver-cores 6 \

--driver-memory 15G \

--executor-cores 8 \

--conf spark.executor.memory=15G \

--conf spark.rapids.sql.concurrentGpuTasks=4 \

--conf spark.executor.resource.gpu.amount=1 \

--conf spark.rapids.sql.enabled=true \

--conf spark.rapids.sql.explain=ALL \

--conf spark.rapids.memory.pinnedPool.size=2G \

--conf spark.kryo.registrator=com.nvidia.spark.rapids.GpuKryoRegistrator \

--conf spark.plugins=com.nvidia.spark.SQLPlugin \

--conf spark.rapids.shims-provider-override=com.nvidia.spark.rapids.shims.spark301.SparkShimServiceProvider \

--conf spark.executor.resource.gpu.discoveryScript=${SPARK_RAPIDS_DIR}/getGpusResources.sh \

--jars ${SPARK_CUDF_JAR},${SPARK_RAPIDS_PLUGIN_JAR}

Breaking down the Spark Submit command

Here are some brief tips to help you understand the command:

spark submit

Note: This is not definitive and do consult the RAPIDS.ai and Spark 3 documentation for further details.

- The GPU on G4 instance I had has 16GB VRAM. So I upped executor memory to match so that there is some data buffering capability in the executor. Since GPUs are typically RAM constrained, it is important to try and minimise the amount of time that you are bottlenecking waiting for data. There is no science around how much to up the executor memory. It just depends on your application and how much IO you have going to and from the GPU. Also non-GPU friendly ops will be completed by the executor on CPU so ensure that the executors are beefy enough to handle those. Same with executor cores, I upped it to a recommended level for pulling and pushing data from HDFS.

- spark.rapids.sql.explain=ALL : It helps to highlight which parts of a spark operation can and can't be done on GPU. Example of operations which aren't current currently be done on GPU include: Regex splitting of strings, Datetime logic, some statistical operations. Overall the less non supported operations that you have better the performance.

- spark.rapids.shims-provider override=com.nvidia.spark.rapids.shims.spark301.SparkShimServiceProvider : The reality of the Spark ecosystem today is that not all Spark is equal. Each commercial distribution can be slightly different and as such the "shims" layer provides a kind of mapping to make sure that rapids performs seamlessly. Currently, RAPIDs doesn't have a Cloudera shim out of the box, but Cloudera's distribution of Spark 3 closely mirrors the open source. So we need explicitly specify this mapping of the open-source Spark 3.0.1 Shim to the Cloudera CDS parcel for now. And for the observant, yes, this is version-specific, and as Spark 3 matures, we will need to ensure the current Shim layer is loaded. This will be rectified in a later RAPIDs version

- spark.rapids.memory.pinnedPool.size : in order to optimise for GPU performance, Rapids allows for "pinning" memory specific to assist in transferring data to the GPU. GPU that is "pinned" for this purpose won't be available for other operations.

The other flags are pretty self explanatory. It is worth noting that Spark RAPIDS sql needs to be explicitly enabled. Refer to the official RAPIDs tuning guide for more details: Nvidia RAPIDs tuning guide

Conclusion

Spark RAPIDs is a very promising technology that can help to greatly accelerate big data operations. Good Data Analysis like other intellectual tasks requires getting into the "flow" or so call it "into the zone". Interruptions, like waiting for 10 minutes for your query to run, can be very disruptive to this. RAPIDs is a good acceleration package in other to help reduce these wait times.

In its current iteration, however, it does still have quite a few limitations. There is no native support for datetime and more complicated statistical operations. GPUs outside of extremely high-end models are typically highly RAM bound so users need to be aware of and manage memory well. Managing memory on GPUs, however, is more an art than a science and requires good understanding of GPU technology and access to specialist tools. Support for data formats is also not as extensive as with native Spark. There is a lot of promise however, and it will be good to see where this technology goes. Happy Coding!

5,285 Views

Comments

Cloudera Employee

Created on 01-27-2021 08:56 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

is it supported ?

Cloudera Employee

Created on 01-27-2021 09:11 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Spark 3 on CDP Private Cloud Base with GPU Support is fully supported. RAPIDS.ai is a Nvidia product though and not a Cloudera product offering