Community Articles

- Cloudera Community

- Support

- Community Articles

- Running TensorFlow on YARN 3.1 with or without GPU...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on

10-12-2018

06:11 PM

- edited on

03-11-2020

03:58 PM

by

lwang

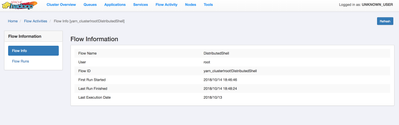

Running TensorFlow on YARN 3.1 with or without GPU

You have the option to run with or without Docker containers. If you are not using Docker containers you will need CUDA, TensorFlow and all your Data Science libraries.

Tips from Wangda

Basically GPU on YARN give you isolation of GPU device. Let's say a Node with 4 GPUS. First task comes ask 1 GPU. (Yarn.io/gpu=1). And YARN NM gives the task GPU0. Then the second task comes, ask 2 GPUs. And YARN NM gives the task GPU1/GPU2. So from TF perspective, you don't need to specify which GPUs to use. TF will automatically detect and consume whatever available to the job. For this case, task2 cannot see other GPUs apart from GPU1/GPU2.

If you wish to run Apache MXNet deep learning programs, see this article: https://community.hortonworks.com/articles/222242/running-apache-mxnet-deep-learning-on-yarn-31-hdp....

Installation

- Install CUDA and Nvidia libraries if you have NVidia cards.

- Install Python 3.x

- Install Docker

- Install PIP

- sudo yum groupinstall 'Development Tools' -y

- sudo yum install cmake git pkgconfig -y

- sudo yum install libpng-devel libjpeg-turbo-devel jasper-devel openexr-devel libtiff-devel libwebp-devel -y

- sudo yum install libdc1394-devel libv4l-devel gstreamer-plugins-base-devel -y

- sudo yum install gtk2-devel -ysudo yum install tbb-devel eigen3-devel -y

- pip3.6 install --upgrade pip

- pip3.6 install tensorflow

- pip3.6 install numpy -U

- pip3.6 install scikit-learn -U

- pip3.6 install opencv-python -U

- pip3.6 install keras

- pip3.6 install hdfs

- git clone https://github.com/tensorflow/models/

You can see a docker example: https://github.com/hortonworks/hdp-assemblies/blob/master/tensorflow/markdown/Dockerfile.md

Run Command for an Example Classification

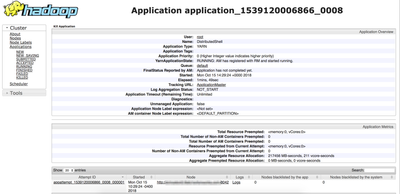

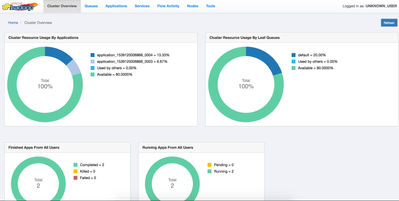

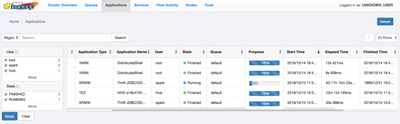

yarn jar /usr/hdp/current/hadoop-yarn-client/hadoop-yarn-applications-distributedshell.jar -jar /usr/hdp/current/hadoop-yarn-client/hadoop-yarn-applications-distributedshell.jar -shell_command python3.6 -shell_args "/opt/demo/DWS-DeepLearning-CrashCourse/tf.py /opt/demo/images/photo1.jpg" -container_resources memory-mb=512,vcores=1

Without Docker

container_resources memory-mb=3072,vcores=1,yarn.io/gpu=2

With Docker (Enable it first: https://docs.hortonworks.com/HDPDocuments/HDP3/HDP-3.0.1/data-operating-system/content/dosg_enable_g...

-shell_env YARN_CONTAINER_RUNTIME_TYPE=docker \ -shell_env YARN_CONTAINER_RUNTIME_DOCKER_IMAGE=<docker-image-name> \

Running a More Complex Training Job

This is the main example: https://github.com/tensorflow/models/tree/master/tutorials/image/cifar10_estimator

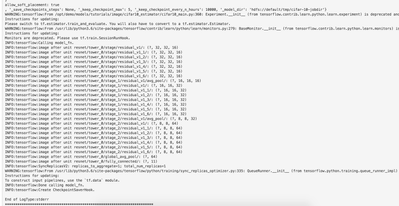

yarn jar /usr/hdp/current/hadoop-yarn-client/hadoop-yarn-applications-distributedshell.jar -jar /usr/hdp/current/hadoop-yarn-client/hadoop-yarn-applications-distributedshell.jar -shell_command python3.6 -shell_args "/opt/demo/models/tutorials/image/cifar10_estimator/cifar10_main.py --data-dir=hdfs://default/tmp/cifar-10-data --job-dir=hdfs://default/tmp/cifar-10-jobdir --train-steps=10000 --eval-batch-size=16 --train-batch-size=16 --sync --num-gpus=0" -container_resources memory-mb=512,vcores=1

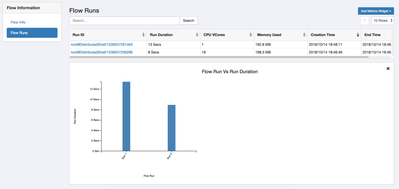

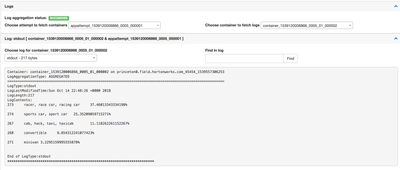

Example Output

[hdfs@princeton0 DWS-DeepLearning-CrashCourse]$ python3.6 tf.py 2018-10-15 02:37:23.892791: W tensorflow/core/framework/op_def_util.cc:355] Op BatchNormWithGlobalNormalization is deprecated. It will cease to work in GraphDef version 9. Use tf.nn.batch_normalization(). 2018-10-15 02:37:24.181707: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA 273 racer, race car, racing car 37.46013343334198% 274 sports car, sport car 25.35209059715271% 267 cab, hack, taxi, taxicab 11.118262261152267% 268 convertible 9.854312241077423% 271 minivan 3.2295159995555878%

Output Written to HDFS

hdfs dfs -ls /tfyarn

Found 1 items

-rw-r--r-- 3 root hdfs 457 2018-10-15 02:35 /tfyarn/tf_uuid_img_20181015023542.json

hdfs dfs -cat /tfyarn/tf_uuid_img_20181015023542.json

{"node_id273": "273", "humanstr273": "racer, race car, racing car", "score273": "37.46013343334198", "node_id274": "274", "humanstr274": "sports car, sport car", "score274": "25.35209059715271", "node_id267": "267", "humanstr267": "cab, hack, taxi, taxicab", "score267": "11.118262261152267", "node_id268": "268", "humanstr268": "convertible", "score268": "9.854312241077423", "node_id271": "271", "humanstr271": "minivan", "score271": "3.2295159995555878"}

Full Source Code

https://github.com/tspannhw/TensorflowOnYARN

Resources

- https://www.tensorflow.org/

- https://github.com/tspannhw/ApacheDeepLearning101/blob/master/yarn.sh

- https://github.com/hortonworks/hdp-assemblies/

- https://docs.hortonworks.com/HDPDocuments/HDP3/HDP-3.0.1/data-operating-system/content/configuring_g...

- https://docs.hortonworks.com/HDPDocuments/HDP3/HDP-3.0.1/data-operating-system/content/dosg_enable_g...

- https://docs.hortonworks.com/HDPDocuments/HDP3/HDP-3.0.0/data-operating-system/content/dosg_recommen...

- https://feathercast.apache.org/2018/10/02/deep-learning-on-yarn-running-distributed-tensorflow-mxnet...

- https://github.com/deep-diver/CIFAR10-img-classification-tensorflow

- https://aajisaka.github.io/hadoop-document/hadoop-project/hadoop-yarn/hadoop-yarn-applications/hadoo...

- https://conferences.oreilly.com/strata/strata-ny-2018/public/schedule/detail/68289

- https://github.com/tspannhw/ApacheDeepLearning101/blob/master/analyzehdfs.py

- https://github.com/open-source-for-science/TensorFlow-Course

- https://github.com/hortonworks/hdp-assemblies/

https://github.com/hortonworks/hdp-assemblies/blob/master/tensorflow/markdown/Dockerfile.md

https://github.com/hortonworks/hdp-assemblies/blob/master/tensorflow/markdown/TensorflowOnYarnTutori...

https://github.com/hortonworks/hdp-assemblies/blob/master/tensorflow/markdown/RunTensorflowJobUsingH...

Documentation

- https://hadoop.apache.org/docs/r3.1.0/

- https://docs.hortonworks.com/HDPDocuments/HDP3/HDP-3.0.1/data-operating-system/content/options_distr...

Coming Soon