Community Articles

- Cloudera Community

- Support

- Community Articles

- Submitting Spark Jobs From Apache NiFi Using Livy

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 12-22-2016 08:16 PM - edited 08-17-2019 06:52 AM

Running Livy on HDP 2.5

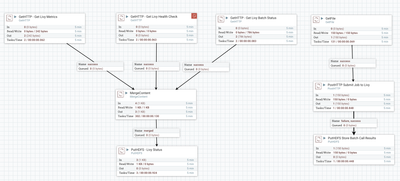

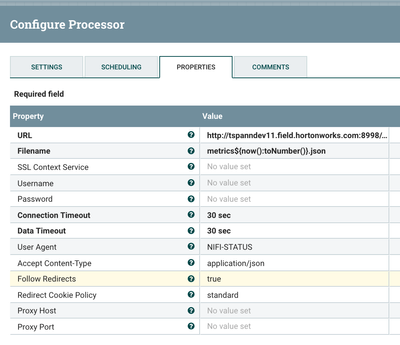

Ingest Metrics REST API From Livy with Apache NiFi / HDF

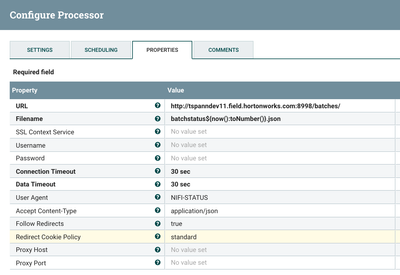

Use GetHTTP To Ingest The Status On Your Batch From Livy

Running Livy

The first step, we download Livy from github. To install on HDP 2.5, is simple. I found a node that wasn't too busy and put the project their.

To run, it's simple:

export SPARK_HOME=/usr/hdp/current/spark-client/ export HADOOP_CONF_DIR=/etc/hadoop/conf nohup ./livy-server &

That's it, you have a basic unprotected Livy instance running. This is important, there's no security on there. You should either put Knox in front of this or enable Livy's security.

I wanted to submit a Scala Spark Batch Job. So I wrote a quick one below to have something to call.

Source Code for Example Spark 1.6.2 Batch Application:

- https://github.com/tspannhw/links

- https://community.hortonworks.com/repos/73816/links-spark-batch-application-example.html?shortDescri...

Step 1: GetFile

Store File /opt/demo/sparkrun.js with JSON to trigger Spark through Livy.

{"file": "/apps/Links.jar","className": "com.dataflowdeveloper.links.Links"}Step 2: PostHTTP Make the call to Livy REST API to submit Spark job.

Step 3: PutHDFS Store results of call to Hadoop HDFS

Livy Logs

16/12/21 22:50:25 INFO LivyServer: Using spark-submit version 1.6.2 16/12/21 22:50:25 WARN RequestLogHandler: !RequestLog 16/12/21 22:50:25 INFO WebServer: Starting server on http://tspanndev11.field.hortonworks.com:8998 16/12/21 22:51:20 INFO SparkProcessBuilder: Running '/usr/hdp/current/spark-client/bin/spark-submit' '--name' 'Livy' '--class' 'com.dataflowdeveloper.links.Links' 'hdfs://hadoopserver:8020/opt/demo/links.jar' '/linkprocessor/379875e9-5d99-4f88-82b1-fda7cdd7bc98.json' 16/12/21 22:51:20 INFO SessionManager: Registering new session 0

Spark Compiled JAR File Must Be Deployed to HDFS and Be Readable

hdfs dfs -put Links.jar /appshdfs dfs -chmod 777 /apps/Links.jar

Checking YARN for Our Application

yarn application --list

Submitting a Scala Spark Job Normal Style

/bin/spark-submit --class "com.dataflowdeveloper.links.Links" --master yarn --deploy-mode cluster /opt/demo/Links.jar

Deploying a Scala Spark Application Built With SBT

scp target/scala-2.10/links.jar user@server:/opt/demo

Reference:

- https://docs.microsoft.com/en-us/azure/hdinsight/hdinsight-apache-spark-livy-rest-interface

- https://docs.microsoft.com/en-us/azure/hdinsight/hdinsight-apache-spark-create-standalone-applicatio... https://github.com/cloudera/livy

- http://livy.io/quickstart.html

- https://community.hortonworks.com/articles/73355/adding-a-custom-processor-to-nifi-linkprocessor.htm... https://github.com/tspannhw/Logs

- https://community.hortonworks.com/repos/73434/apache-spark-apache-logs-parser-example.html

Liv REST API

- Livy REST API for Interactive Spark Sessions (JSON) http://server:8998/sessions

- Livy Stats via REST API (JSON) http://server:8998/healthcheck?pretty=true http://server:8998/metrics?pretty=true

- Livy Batch Submit Status via REST API (JSON) http://server:8998/batches

To Submit to Livy from the Command Line

curl -X POST --data '{"file": "/opt/demo/links.jar","className": "com.dataflowdeveloper.links.Links","args": ["/linkprocessor/379875e9-5d99-4f88-82b1-fda7cdd7bc98.json"]}'

-H "Content-Type: application/json" http://server:8998/batches

NIFI Template

Created on 12-24-2016 07:24 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Nice! BTW, HDP 2.5 has Livy built-in. Can be found under Spark service in Ambari.

Created on 02-14-2017 03:13 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

That Livy is only for Zeppelin, it's not safe to use that

In HDP 2.6, there will be a Livy available for general usage.

Created on 02-20-2017 08:11 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

How to submit a python spark job with kerberos keytab and principal ?

Created on 09-13-2017 01:28 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Livy supports that is now a full citizen in HDP. I have not tried it, but post a question.

Created on 11-28-2017 03:32 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

default port is 8999

Created on 12-08-2017 02:18 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Created on 02-23-2022 11:54 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Team,

We have Apache Livy running on EMR . As part of our POC we need to submit spark jobs via Livy . For this we need to build connection from NiFi -> Livy which will submit a session.

While connecting to Livy via NiFi we are having issues in ExecuteSparkInteractive , here is the error :

LivySessionController[id=5c0f3fbf-f3a8-1dc8-0000-0000432c09c1] Livy Session Manager Thread run into an error, but continues to run: java.lang.NullPointerException

We have used telnet command from NiFi nodes directly to connect with Livy and that was working fine but the connection from NiFi is resisting.

From the node we are able to submit the Livy session / Spark Job.

Thanks,

Haider Naveed

Created on 02-24-2022 01:04 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

@HaiderNaveed As this is an older post, you would have a better chance of receiving a resolution by starting a new thread. This will also be an opportunity to provide details specific to your environment that could aid others in assisting you with a more accurate answer to your question. You can link this thread as a reference in your new post. Thanks!