Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Adding a second user on hadoop cluster

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Adding a second user on hadoop cluster

- Labels:

-

Apache Hadoop

-

Apache Spark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is it possible to add a second user on hadoop cluster like the spark user?

Created on 01-10-2020 06:04 PM - edited 01-10-2020 06:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On the edgenode

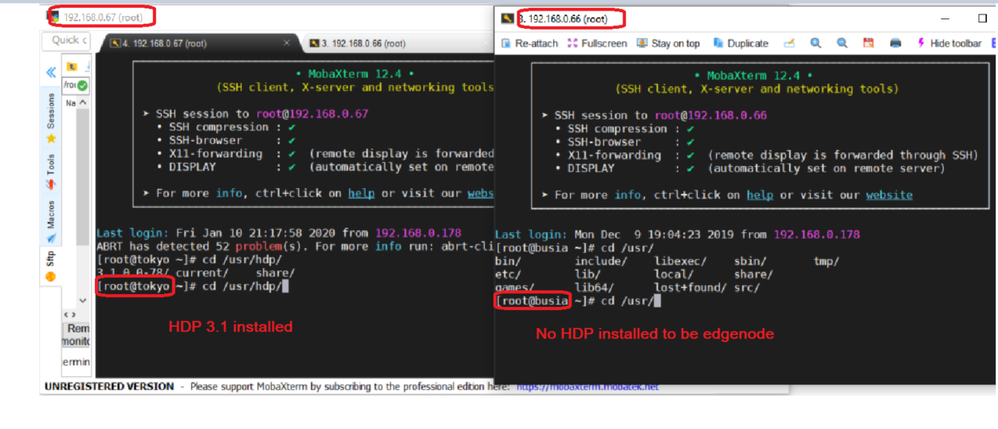

Just to validate your situation I have spun up single node cluster Tokyo IP 192.168.0.67 and installed an edge node Busia IP 192.168.0.66 I will demonstrate the spark client setup on the edge node and evoke the spark-shell

First I have to configure the passwordless ssh below my edge node

Passwordless setup

[root@busia ~]# mkdir .ssh

[root@busia ~]# chmod 600 .ssh/

[root@busia ~]# cd .ssh

[root@busia .ssh]# ll

total 0

Networking not setup

The master is unreachable from the edge node

[root@busia .ssh]# ping 198.168.0.67

PING 198.168.0.67 (198.168.0.67) 56(84) bytes of data.

From 198.168.0.67 icmp_seq=1 Destination Host Unreachable

From 198.168.0.67 icmp_seq=3 Destination Host Unreachable

On the master

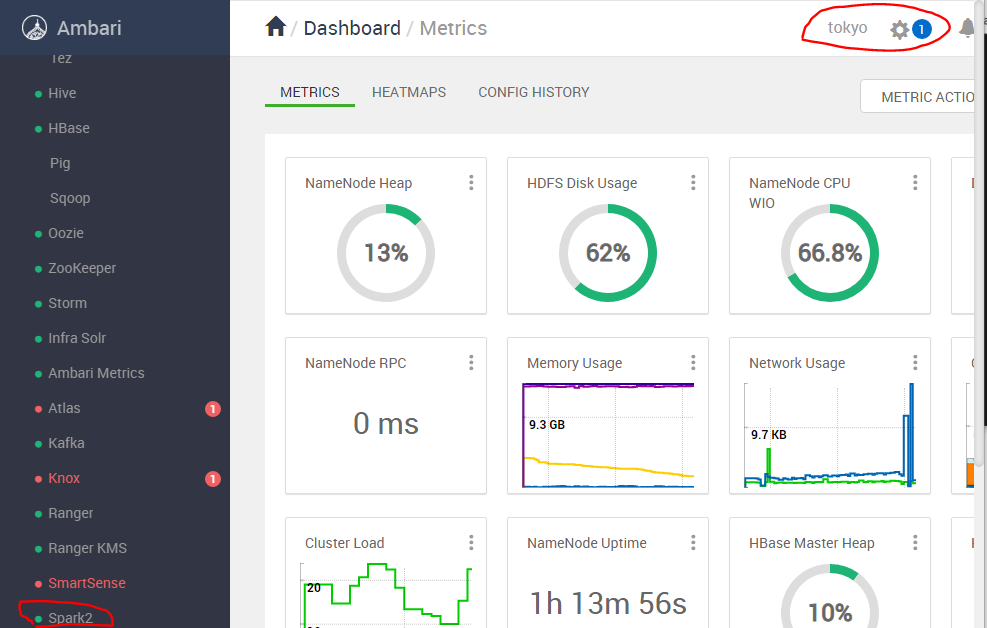

The master has a single node HDP 3.1.0 cluster, I will deploy the clients to the edge node from here

[root@tokyo ~]# cd .ssh/

[root@tokyo .ssh]# ll

total 16

-rw------- 1 root root 396 Jan 4 2019 authorized_keys

-rw------- 1 root root 1675 Jan 4 2019 id_rsa

-rw-r--r-- 1 root root 396 Jan 4 2019 id_rsa.pub

-rw-r--r-- 1 root root 185 Jan 4 2019 known_hosts

Networking not setup

The edge node is still unreachable from the master Tokyo

[root@tokyo .ssh]# ping 198.168.0.66

PING 198.168.0.66 (198.168.0.66) 56(84) bytes of data.

From 198.168.0.66 icmp_seq=1 Destination Host Unreachable

From 198.168.0.66 icmp_seq=2 Destination Host Unreachable

Copied the id-ira.pub key to the edgenode

[root@tokyo ~]# cat .ssh/id_rsa.pub | ssh root@192.168.0.215 'cat >> .ssh/authorized_keys'

The authenticity of host '192.168.0.215 (192.168.0.215)' can't be established.

ECDSA key fingerprint is SHA256:ZhnKxkn+R3qvc+aF+Xl5S4Yp45B60mPIaPpu4f65bAM.

ECDSA key fingerprint is MD5:73:b3:5a:b4:e7:06:eb:50:6b:8a:1f:0f:d1:07:55:cf.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.0.215' (ECDSA) to the list of known hosts.

root@192.168.0.215's password:

Validation the passwordless ssh works

[root@tokyo ~]# ssh root@192.168.0.215

Last login: Fri Jan 10 22:36:01 2020 from 192.168.0.178

[root@busia ~]# hostname -f

busia.xxxxxx.xxx

xxxxxx

Single node Cluster

[root@tokyo ~]# useradd asmarz

[root@tokyo ~]# su - asmarz

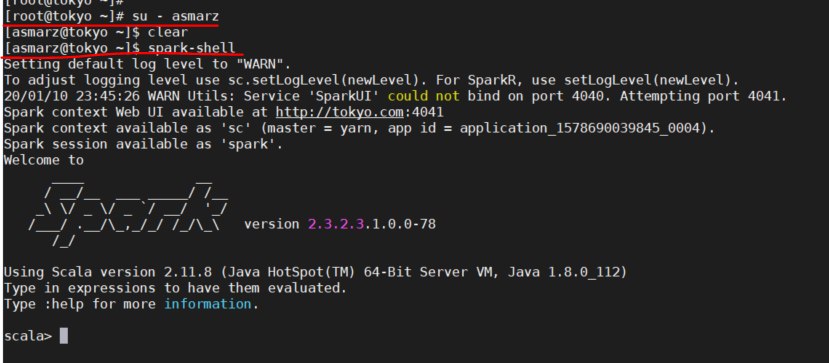

On the master as user asmarz I can access the spark-shell and execute any spark code

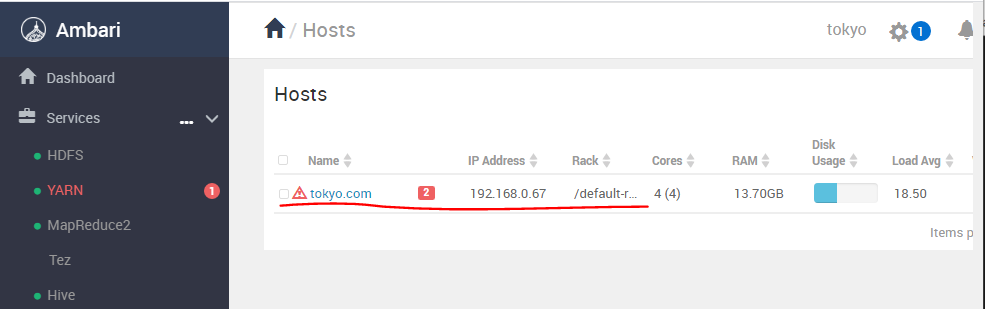

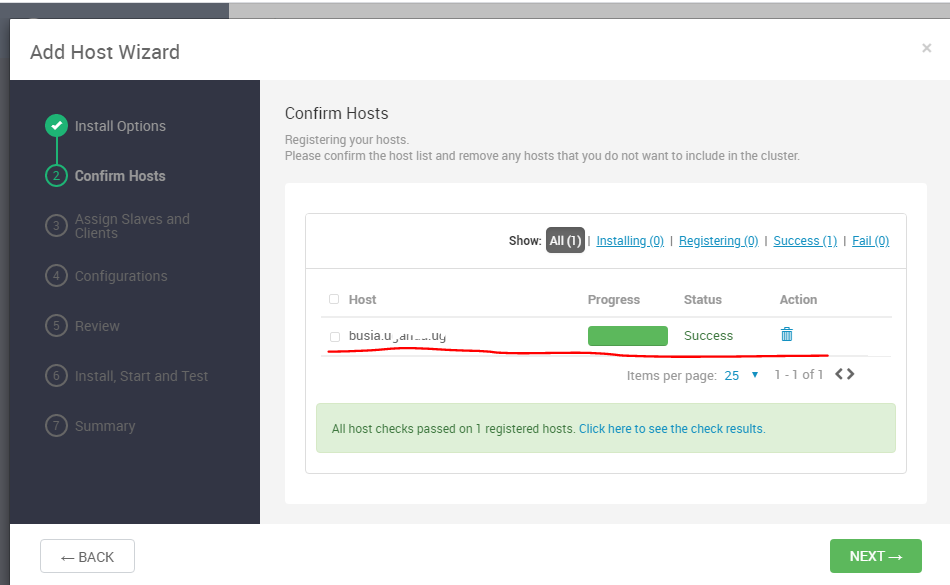

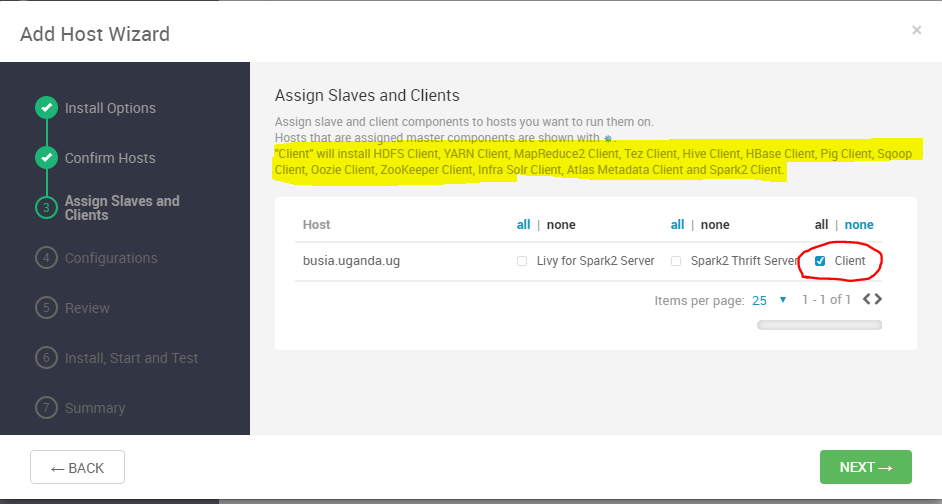

Add the edge node to the cluster

Install the clients on the edge node

On the master as user asmarz I have access to the spark-shell

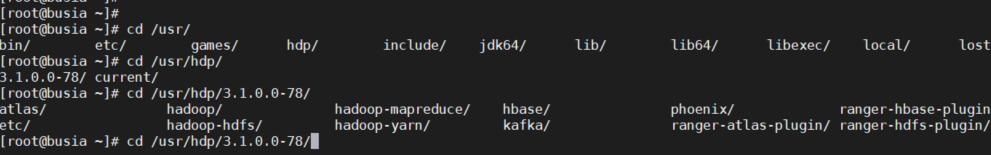

Installed Client components on the edge-node can be seen in the CLI

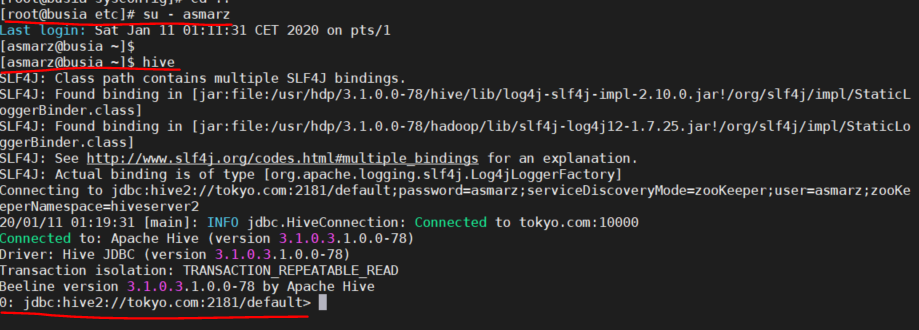

I chose to install all the clients on the edge node just to demo as I have already install the hive client on the edge node without any special setup I can now launch the hive HQL on the master Tokyo from the edge node

After installing the spark client on the edge node I can now also launch the spark-shell from the edge node and run any spark code, so this demonstrates that you can create any user on the edge node and he /she can rive Hive HQL, SPARK SQL or PIG script. You will notice I didn't update the HDFS , YARN, MAPRED,HIVE configurations it was automatically done by Ambari during the installation it copied over to the edge node the correct conf files !!

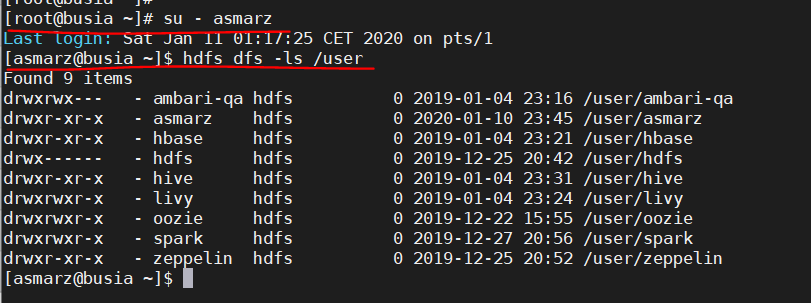

The asmarz user from the edge node can also acess HDFS

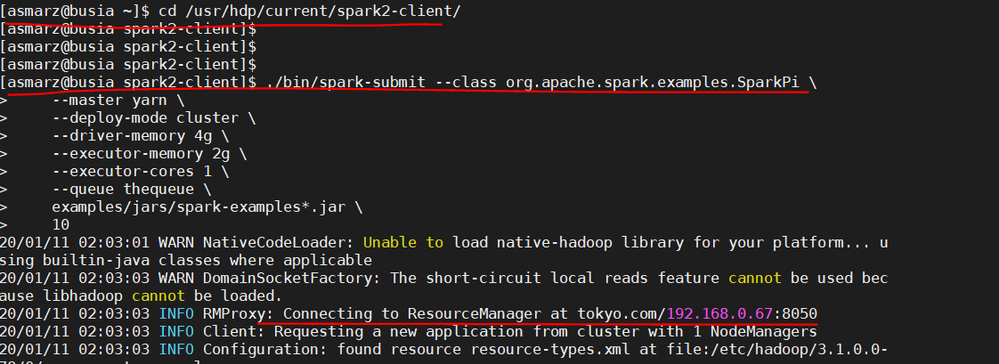

Now as user asmarz I have launched a spark-submit job from the edge node

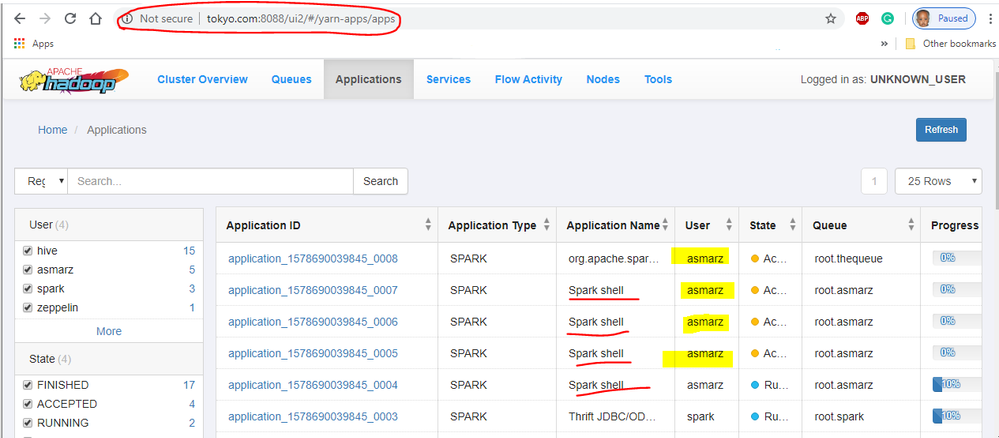

The launch is successful on the master Tokyo see Resource Manager URL, that can be confirmed in the RM UI

This walkthrough validates that any user on the edge node can launch a job in the cluster this poses a security problem in production hence my earlier hint of Kerberos.

Having said that you will realize I didn't do any special configuration after the client installation because Ambari distributes the correct configuration of all the component and it does that for every installation of a new component that's the reason Ambari is a management tool

If this walkthrough answers your question, please do accept the answer and close the thread.

Happy hadooping

Created 01-09-2020 10:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your question is ambiguous can you elaborate? It's possible to add users to a cluster with all the necessary privileges to execute ie spark, hive in a kerberized cluster you can merge the different keytabs i.e hive, spark,oozie etc or control through Ranger.

But if you can elaborate on your use-case then we can try to find a technical solution.

Created 01-10-2020 02:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your reply 🙂

I will explain my actual situation.

I have installed Hadoop Cluster using ambari HortonWorks.

One of nodes is an Edge node, I defined it as a spark master and I could then run spark-sumbit from this linux server (edge node) with spark user (spark) on 4 workers ( datanodes)

My question now, I will have developers and end users who should execute scripts from their local machine on the edge node. These users should not access directly the linux server (Edge node) but they will have to launch scripts (spark-sumbit). How can I create accounts for them? How could they access the edge node ?

Thanks

Asma

Created on 01-10-2020 05:49 AM - edited 01-10-2020 06:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Now I have a better understanding of your deployment, I think that is a wrong technical approach. Having an edge node it a great idea in that you can create and control access to the cluster from the edge node, and usually, you have only Client software ONLY YARN, HDFS , OOZIE, ZOOKEEPER, SPARK, SQOOP.PIG client etc but not a Master node.

Edge nodes run within the cluster allow for centralized management of all the Hadoop configuration entries on the cluster nodes which helps to reduce the amount of administration needed to update the config. When you configure a Linux box as an edge node during the deployment ambari configures update the conf files with the correct values so that all commands against the Cluster can be run from the edge node.

For security and good practice, edge nodes need to be multi-homed into the private subnet of the Hadoop cluster as well as into the corporate network. Keeping your Hadoop cluster in its own private subnet is an excellent practice, so these edge nodes serve as a controlled window inside the cluster.

In a nutshell, you don't need a Master process the edge node but Client to initiate communication with the Cluster

Hope that helps

Created 01-10-2020 06:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In a nutshell, you don't need a Master process the edge node but Client to initiate communication with the Cluster

=> Actually this is my question 🙂 Which client? Should I create a local user like spark and then ask the end users to use it in order to launch this command for example from their machines??

spark-sumbit --master spark://edgenode:7077 calculPi.jar?

All that I need for now that the end users could execute their scripts spark or Python ... from their side, how could we do this? do they need to access the edge server?

Thanks

Asma

Created 01-10-2020 08:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HDP Client means a set of binaries and libraries to run commands and develop software for a particular Hadoop service. So, if you install Hive client you can run beeline, if you install HBase client you can run an HBase shell and if you install Spark Client your can run spark-shell etc.

But I would advise you install at least these clients so on edge node

- zookeeper-client

- sqoop-client

- spark2-client

- slider-client

- spark-client

- oozie-client

- hbase-client

The local users created on the edge node can execute the spark-shell to run the spark submit , but the only difference is if you have a kerberized cluster you will have to generate keytabs and copy them over to the edge node for every user

Hope that answers your question

Created 01-10-2020 08:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you again!!

1) I have installed these client on my edge node 🙂

2) For instance, we decide that the cluster is not configured with kerberos

3) Actually, I want that end users could submit spark-shell 🙂

4) For this , I created a local user called "sparkuser" on the edge node

5) with which tool could client use the spark-shell? API? application? All I want that users can use the sparkuser that I have created on edge nôde to submit their scripts but I don't not want that these users access the edge node server directly (like remotelx or via an API? or else? I hope that I explained more 😄

Many thanks for your help and patience

Asma

Created on 01-10-2020 06:04 PM - edited 01-10-2020 06:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On the edgenode

Just to validate your situation I have spun up single node cluster Tokyo IP 192.168.0.67 and installed an edge node Busia IP 192.168.0.66 I will demonstrate the spark client setup on the edge node and evoke the spark-shell

First I have to configure the passwordless ssh below my edge node

Passwordless setup

[root@busia ~]# mkdir .ssh

[root@busia ~]# chmod 600 .ssh/

[root@busia ~]# cd .ssh

[root@busia .ssh]# ll

total 0

Networking not setup

The master is unreachable from the edge node

[root@busia .ssh]# ping 198.168.0.67

PING 198.168.0.67 (198.168.0.67) 56(84) bytes of data.

From 198.168.0.67 icmp_seq=1 Destination Host Unreachable

From 198.168.0.67 icmp_seq=3 Destination Host Unreachable

On the master

The master has a single node HDP 3.1.0 cluster, I will deploy the clients to the edge node from here

[root@tokyo ~]# cd .ssh/

[root@tokyo .ssh]# ll

total 16

-rw------- 1 root root 396 Jan 4 2019 authorized_keys

-rw------- 1 root root 1675 Jan 4 2019 id_rsa

-rw-r--r-- 1 root root 396 Jan 4 2019 id_rsa.pub

-rw-r--r-- 1 root root 185 Jan 4 2019 known_hosts

Networking not setup

The edge node is still unreachable from the master Tokyo

[root@tokyo .ssh]# ping 198.168.0.66

PING 198.168.0.66 (198.168.0.66) 56(84) bytes of data.

From 198.168.0.66 icmp_seq=1 Destination Host Unreachable

From 198.168.0.66 icmp_seq=2 Destination Host Unreachable

Copied the id-ira.pub key to the edgenode

[root@tokyo ~]# cat .ssh/id_rsa.pub | ssh root@192.168.0.215 'cat >> .ssh/authorized_keys'

The authenticity of host '192.168.0.215 (192.168.0.215)' can't be established.

ECDSA key fingerprint is SHA256:ZhnKxkn+R3qvc+aF+Xl5S4Yp45B60mPIaPpu4f65bAM.

ECDSA key fingerprint is MD5:73:b3:5a:b4:e7:06:eb:50:6b:8a:1f:0f:d1:07:55:cf.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.0.215' (ECDSA) to the list of known hosts.

root@192.168.0.215's password:

Validation the passwordless ssh works

[root@tokyo ~]# ssh root@192.168.0.215

Last login: Fri Jan 10 22:36:01 2020 from 192.168.0.178

[root@busia ~]# hostname -f

busia.xxxxxx.xxx

xxxxxx

Single node Cluster

[root@tokyo ~]# useradd asmarz

[root@tokyo ~]# su - asmarz

On the master as user asmarz I can access the spark-shell and execute any spark code

Add the edge node to the cluster

Install the clients on the edge node

On the master as user asmarz I have access to the spark-shell

Installed Client components on the edge-node can be seen in the CLI

I chose to install all the clients on the edge node just to demo as I have already install the hive client on the edge node without any special setup I can now launch the hive HQL on the master Tokyo from the edge node

After installing the spark client on the edge node I can now also launch the spark-shell from the edge node and run any spark code, so this demonstrates that you can create any user on the edge node and he /she can rive Hive HQL, SPARK SQL or PIG script. You will notice I didn't update the HDFS , YARN, MAPRED,HIVE configurations it was automatically done by Ambari during the installation it copied over to the edge node the correct conf files !!

The asmarz user from the edge node can also acess HDFS

Now as user asmarz I have launched a spark-submit job from the edge node

The launch is successful on the master Tokyo see Resource Manager URL, that can be confirmed in the RM UI

This walkthrough validates that any user on the edge node can launch a job in the cluster this poses a security problem in production hence my earlier hint of Kerberos.

Having said that you will realize I didn't do any special configuration after the client installation because Ambari distributes the correct configuration of all the component and it does that for every installation of a new component that's the reason Ambari is a management tool

If this walkthrough answers your question, please do accept the answer and close the thread.

Happy hadooping