Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Apache Nifi - How to generate Email alerts in ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Apache Nifi - How to generate Email alerts in a Nifi Cluster

- Labels:

-

Apache NiFi

Created 09-02-2016 11:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am trying to use PutEmail in my workflow to send email alert whenever something fails. I have 8 slave nodes and my dataflow is running on all slaves (meaning not just primary node)

The issue is that I get multiple emails if one processor has errors etc. I think this is because we have 8 slaves, so PutEmail is running on all 8 slaves and therefore I get multiple emails.

- Is there a way to ensure that we always get 1 Email instead of 8?

Thanks

Obaid

Created 11-14-2016 04:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I guess it depends what you are trying to achieve. You are right that you would get an email per node in your cluster, but each email would be for the errors on that node only, so its not like you are getting 8 of the same email.

If you really want only 1 email... It would probably be easiest to use something in between as a buffer for all your errors. For example, have a Kafka topic like "errors" and have each node in your cluster publish using PublishKafka. Then have a ConsumeKafka that runs only on primary node, merges together some amount of errors, and sends to a PutEmail (or maybe Merge's together first). Could do the same thing JMS, or a shared filesystem, or anywhere you put the errors and then retrieve from.

Created 09-02-2016 11:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The PutEmail processor can be configured to run only on the primary node. The configuration is available under the scheduling tab of the processor settings window.

Created on 09-03-2016 09:10 AM - edited 08-19-2019 01:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @Sam Hjelmfelt for your reply,

Yes if the data lands on Primary node, PutEmail works as expected. However id the data lands on a Slave node, no Email is generated and flowfiles get stuck on connection for ever (i.e Slave nodes are not able to talk to primary node).

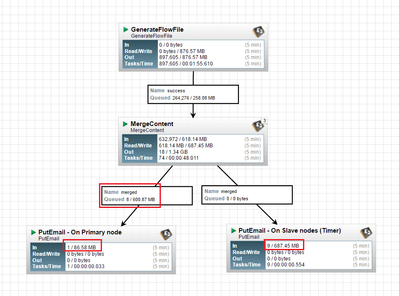

Following is an example flow (template is attached, please check it out):

In the below dataflow, we generate flow files and run MurgeContent (every 20 seconds) and then pass the result on to two PutEmail processors in parallel. First PutEmail is running on Primary, where as the second PutEmail processor is running on all Slave nodes (Timer event). For PutEmail on primary, it seems like for 9 generated files, only 1 got processed where as 8 got stuck on the connection (seems like slave nodes not able to talk to primary). Second PutEmail worked just fine i.e it processed all 9 flowfiles.

So, is there a way to generate 1 Email alert if a processor fails in a cluster?

PS:

Created 09-04-2016 08:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 11-14-2016 10:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Obaid Salikeen Were you able to send notification alert? I'm having this same issue and i will like to configure email alerts for NiFi where there is a failure.

Created 11-15-2016 06:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Joshua Adeleke : Yes I was able to send Email alerts through PutEmail (some time back), however I dont use it for alerts (actually still looking for a better solution).

Current approach: I implemented a ReportingTask, to send metrics to InfluxDB (particularly success/failure connection metrics) and use Capacitor for alerts (and you could use any other system to monitor metrics). So for example you could issue alerts if there are flowfiles landing on any Processor's failure channel (dosent works all the time since some processors dont have failure relationships).

However, for a more better approach, checkout Bryan's comments above !

Created 12-02-2016 07:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Obaid Salikeen Thank you for also putting up the xml file. It helped while setting up mine. I want to ask...Have you tried sending to an email group? The mail group created has a special character before it(#emailgroup@domain.com). How can i use this email group even with the special character? @Bryan Bende

Created 11-14-2016 04:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I guess it depends what you are trying to achieve. You are right that you would get an email per node in your cluster, but each email would be for the errors on that node only, so its not like you are getting 8 of the same email.

If you really want only 1 email... It would probably be easiest to use something in between as a buffer for all your errors. For example, have a Kafka topic like "errors" and have each node in your cluster publish using PublishKafka. Then have a ConsumeKafka that runs only on primary node, merges together some amount of errors, and sends to a PutEmail (or maybe Merge's together first). Could do the same thing JMS, or a shared filesystem, or anywhere you put the errors and then retrieve from.

Created 11-15-2016 12:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a lot @Bryan Bende for sharing your thoughts,

- Could you also recommend how we should monitor dataflows for detecting all failures (Other then PutEmail, would you also recommend monitoring Nifi Logs, or do you think PutEmail is a good enough solution)?

- Another idea I wanted to discuss/share: Write a reporting task, and report failures/errors to configured Email/Slack etc, this way you would not need to hookup PutEmail with each processor (considering you have many processors, connecting all with PutEmail make it look complicated/complex). by default, you could get alerts for any failure without configuring/changing flows, Any thoughts ?

Thanks again

Obaid

Created 11-15-2016 02:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

PutEmail is definitely good for specific parts of the flow. As you mentioned it can get complex quickly trying to route all the failures to a single PutEmail.

The ReportingTask is definitely a good idea. When a ReportingTask executes it gets access to a ReportingContext which has access to BulletinRepository, which then gets you access to any of the bulletins you see in the UI. You could have one that got all the error bulletins and sent them somewhere or emailed them.

Along the lines of monitoring the logs, you could probably configure NiFi's logback.xml to do UDP or TCP forwarding of all log events at the ERROR level, and then have a ListenUDP/ListenTCP processor in NiFi receive them and send an email. If you are in a cluster I guess you would have all nodes forward to only one of the nodes. This introduces possibility for circular logic, meaning if the ListenUDP/ListenTCP had problems that would generate more ERROR logs which would get sent back to ListenUDP/ListenTCP, and this produce more errors and keep doing this until the problem was resolved, but that is probably rare.