Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Apache nifi - how to convert a file .txt into ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Apache nifi - how to convert a file .txt into Parquet (to save into HDFS) with Nifi?

- Labels:

-

Apache NiFi

-

HDFS

Created 01-08-2021 09:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, i can't compress so many files.txt into a Parquet format to save in HDFS.

How can i do that? @ApacheNifi

Created on 01-11-2021 05:54 AM - edited 01-11-2021 05:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You must have the reader incorrectly configured for your CSV schema.

Created on 01-08-2021 09:54 AM - edited 01-08-2021 09:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Lallagreta The solution you are looking for is to leverage NiFi Parquet Processors w/ Parquet Record Reader/Writer

Some fun links:

The Parquet procs are part of Nifi1.10 and up, but you can also install the nars into any older nifi versions:

If this answer resolves your issue or allows you to move forward, please choose to ACCEPT this solution and close this topic. If you have further dialogue on this topic please comment here or feel free to private message me. If you have new questions related to your Use Case please create separate topic and feel free to tag me in your post.

Thanks,

Steven

Created 01-09-2021 02:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, thank you so much for your answer.

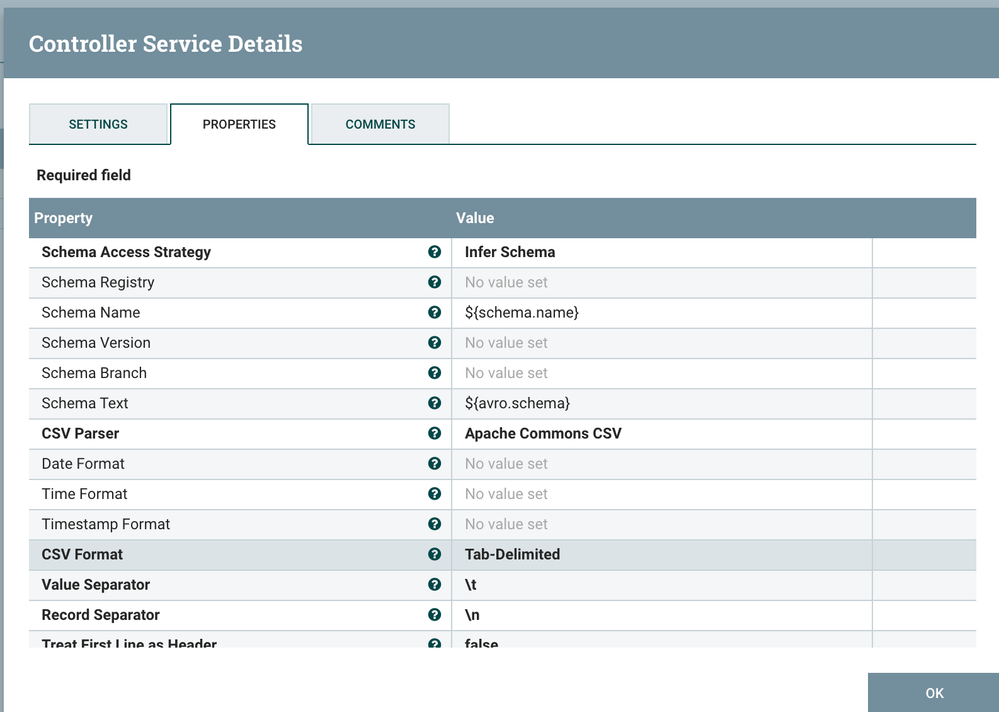

I understand that i had to treat the data as CSV format with a “tab” delimiter rather than a “,”.

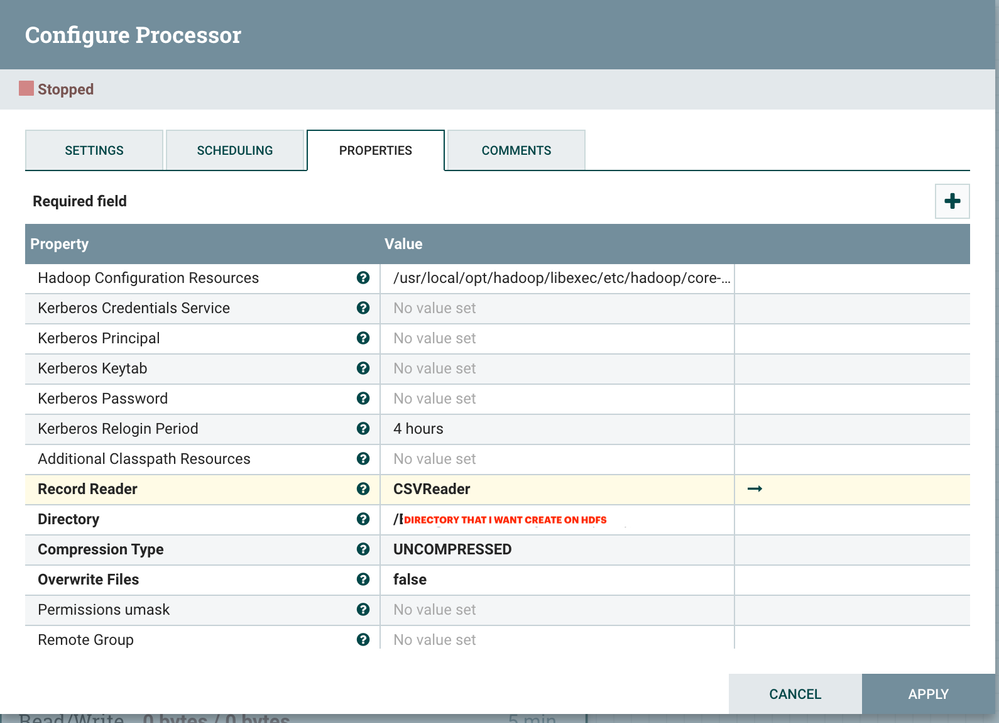

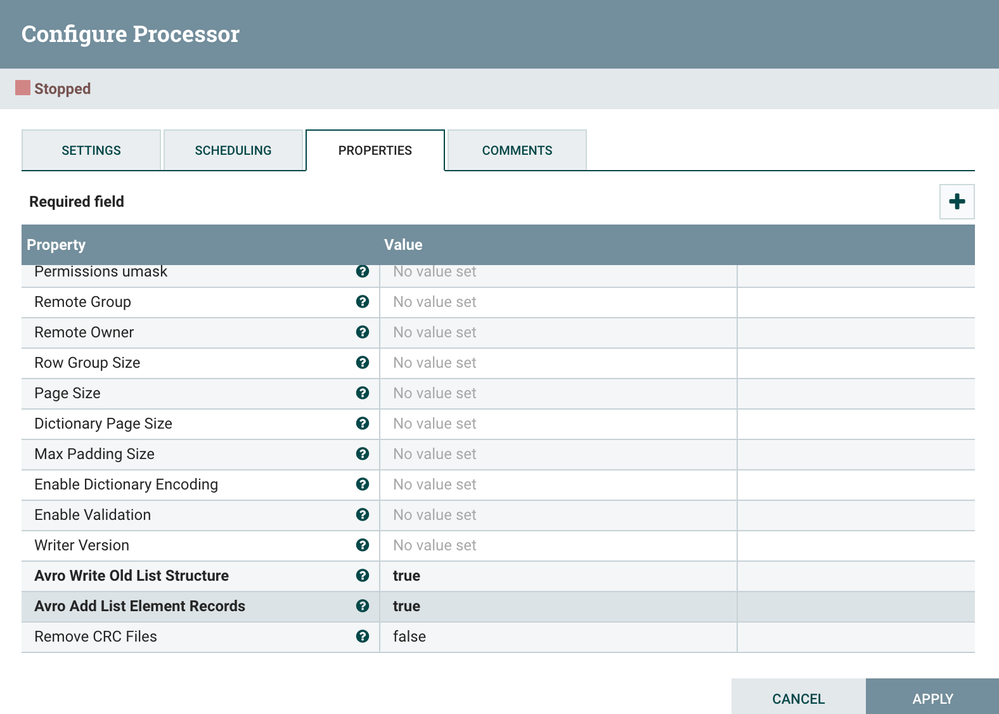

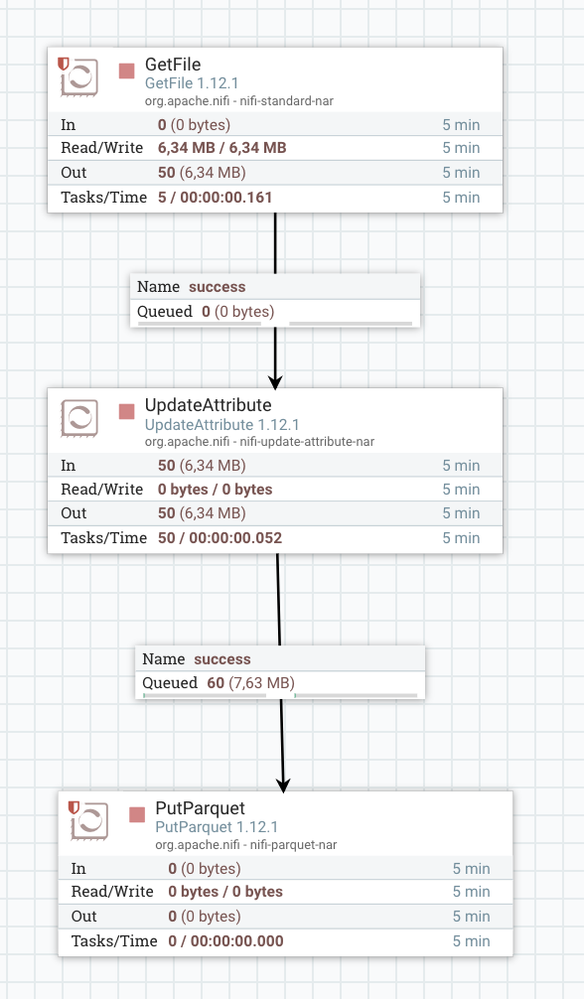

For my project i use this flow:

GetFile -> UpdateAttributo -> PutParquet but something go wrong.

The error that compare is: "Unable to create record reader".

This is my processor configuration:

THANK YOU @ApacheNifi

Created on 01-11-2021 05:54 AM - edited 01-11-2021 05:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You must have the reader incorrectly configured for your CSV schema.