Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Are there any limits to horizontally scaling N...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Are there any limits to horizontally scaling Nifi?

- Labels:

-

Apache NiFi

Created 10-19-2017 09:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there a maximum amount of nodes a Nifi cluster can contain? If so what and where are the bottlenecks? Are there figures for max data per second or max events per second?

Created on 10-19-2017 12:45 PM - edited 08-17-2019 06:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

NiFi has not explicitly defined max for the number of nodes that can be added to a single NiFi cluster.

Just keep in mind that the more nodes you add, the more request replication that must occur between nodes. For example, If a user is connected to node 1 of 100 nodes and makes a change, that change must be replicated to all 99 other nodes. NiFi is configured with a number of node protocol threads (default 10). So NIFi is only capable of replicating that change to 10 nodes at a time. This value should be increased to accommodate larger clusters. Failing to adjust this value my result in nodes disconnecting because they did not receive the change request fast enough. In addition, you may need to be more tolerant on your connection and heartbeat timeouts.

As far as max data per second, that is a hard number to lay out. It is highly dependent on a number of factors.

Mostly affected by your particular dataflow implementation. Since NiFi is just a blank canvas in which you build your dataflow, in the end your dataflow design defines your performance/throughput in most cases. This comes down to which processors you use and how they are configured.

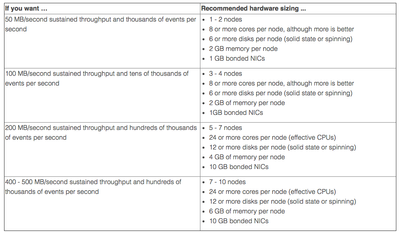

Assuming you have a well designed and optimized dataflow design, you can expect upwards of the following:

*** These numbers will still be affected by use of some processors. CompressContent for example: this processor can be CPU intensive over longer periods of time when compressing large files, so I can become a bottleneck.

If you found that this answer addressed you question, please take a moment to click "accept".

Thank you,

Matt

Created on 10-19-2017 12:45 PM - edited 08-17-2019 06:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

NiFi has not explicitly defined max for the number of nodes that can be added to a single NiFi cluster.

Just keep in mind that the more nodes you add, the more request replication that must occur between nodes. For example, If a user is connected to node 1 of 100 nodes and makes a change, that change must be replicated to all 99 other nodes. NiFi is configured with a number of node protocol threads (default 10). So NIFi is only capable of replicating that change to 10 nodes at a time. This value should be increased to accommodate larger clusters. Failing to adjust this value my result in nodes disconnecting because they did not receive the change request fast enough. In addition, you may need to be more tolerant on your connection and heartbeat timeouts.

As far as max data per second, that is a hard number to lay out. It is highly dependent on a number of factors.

Mostly affected by your particular dataflow implementation. Since NiFi is just a blank canvas in which you build your dataflow, in the end your dataflow design defines your performance/throughput in most cases. This comes down to which processors you use and how they are configured.

Assuming you have a well designed and optimized dataflow design, you can expect upwards of the following:

*** These numbers will still be affected by use of some processors. CompressContent for example: this processor can be CPU intensive over longer periods of time when compressing large files, so I can become a bottleneck.

If you found that this answer addressed you question, please take a moment to click "accept".

Thank you,

Matt

Created 06-06-2019 12:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Matt Clarke,

Does the table you shared above hold true today as well?

Apache Nifi Crash Course video on https://www.youtube.com/watch?v=fblkgr1PJ0o mentions the same that a cluster should preferably have a single digit number but if really needed you can rather have 2 separate clusters with 10 nodes each and establish a sync between them.

All I am trying to understand is with the latest version it still holds true and 10 nodes are still good to hold hundreds of thousands of events per second?

Thanks in advance!