Support Questions

- Cloudera Community

- Support

- Support Questions

- Atlas 0.7 and sqoop hook

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Atlas 0.7 and sqoop hook

- Labels:

-

Apache Atlas

-

Apache Sqoop

Created on 06-21-2016 08:07 AM - edited 08-18-2019 06:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

Using HDP-2.4.0.0-169

Downloaded atlas 0.7 compiled it and tested hive hook - it works - Create table [new table nane] AS select * FROM [source tablename]; creates record to atlas and data lineage picture too.

After it I tried sqoop hook. Followed http://atlas.incubator.apache.org/Bridge-Sqoop.html

After command:

[root@bigdata21 apache-atlas-0.7-incubating-SNAPSHOT]# sqoop import --connect jdbc:mysql://bigdata21.webmedia.int/test --table sqoop_test --split-by id --hive-import -hive-table sqoop_test28 --username margusja --P Warning: /usr/hdp/2.4.0.0-169/accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. 16/06/21 10:58:45 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6.2.4.0.0-169 Enter password: 16/06/21 10:58:47 INFO tool.BaseSqoopTool: Using Hive-specific delimiters for output. You can override 16/06/21 10:58:47 INFO tool.BaseSqoopTool: delimiters with --fields-terminated-by, etc. 16/06/21 10:58:48 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 16/06/21 10:58:48 INFO tool.CodeGenTool: Beginning code generation 16/06/21 10:58:48 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `sqoop_test` AS t LIMIT 1 16/06/21 10:58:48 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `sqoop_test` AS t LIMIT 1 16/06/21 10:58:48 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/hdp/2.4.0.0-169/hadoop-mapreduce Note: /tmp/sqoop-root/compile/06fa514f9424df2ae372f4ac8ee13db8/sqoop_test.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 16/06/21 10:58:49 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-root/compile/06fa514f9424df2ae372f4ac8ee13db8/sqoop_test.jar 16/06/21 10:58:49 WARN manager.MySQLManager: It looks like you are importing from mysql. 16/06/21 10:58:49 WARN manager.MySQLManager: This transfer can be faster! Use the --direct 16/06/21 10:58:49 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path. 16/06/21 10:58:49 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql) 16/06/21 10:58:49 INFO mapreduce.ImportJobBase: Beginning import of sqoop_test SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/hdp/2.4.0.0-169/hadoop/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/hdp/2.4.0.0-169/zookeeper/lib/slf4j-log4j12-1.6.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 16/06/21 10:58:50 INFO impl.TimelineClientImpl: Timeline service address: http://bigdata21.webmedia.int:8188/ws/v1/timeline/ 16/06/21 10:58:50 INFO client.RMProxy: Connecting to ResourceManager at bigdata21.webmedia.int/192.168.81.110:8050 16/06/21 10:58:53 INFO db.DBInputFormat: Using read commited transaction isolation 16/06/21 10:58:53 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`id`), MAX(`id`) FROM `sqoop_test` 16/06/21 10:58:53 INFO mapreduce.JobSubmitter: number of splits:2 16/06/21 10:58:53 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1460979043517_0177 16/06/21 10:58:53 INFO impl.YarnClientImpl: Submitted application application_1460979043517_0177 16/06/21 10:58:53 INFO mapreduce.Job: The url to track the job: http://bigdata21.webmedia.int:8088/proxy/application_1460979043517_0177/ 16/06/21 10:58:53 INFO mapreduce.Job: Running job: job_1460979043517_0177 16/06/21 10:58:58 INFO mapreduce.Job: Job job_1460979043517_0177 running in uber mode : false 16/06/21 10:58:58 INFO mapreduce.Job: map 0% reduce 0% 16/06/21 10:59:02 INFO mapreduce.Job: map 50% reduce 0% 16/06/21 10:59:03 INFO mapreduce.Job: map 100% reduce 0% 16/06/21 10:59:03 INFO mapreduce.Job: Job job_1460979043517_0177 completed successfully 16/06/21 10:59:03 INFO mapreduce.Job: Counters: 30 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=310792 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=197 HDFS: Number of bytes written=20 HDFS: Number of read operations=8 HDFS: Number of large read operations=0 HDFS: Number of write operations=4 Job Counters Launched map tasks=2 Other local map tasks=2 Total time spent by all maps in occupied slots (ms)=4299 Total time spent by all reduces in occupied slots (ms)=0 Total time spent by all map tasks (ms)=4299 Total vcore-seconds taken by all map tasks=4299 Total megabyte-seconds taken by all map tasks=2751360 Map-Reduce Framework Map input records=2 Map output records=2 Input split bytes=197 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=73 CPU time spent (ms)=1700 Physical memory (bytes) snapshot=346517504 Virtual memory (bytes) snapshot=4934926336 Total committed heap usage (bytes)=166199296 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=20 16/06/21 10:59:03 INFO mapreduce.ImportJobBase: Transferred 20 bytes in 13.5187 seconds (1.4794 bytes/sec) 16/06/21 10:59:03 INFO mapreduce.ImportJobBase: Retrieved 2 records. 16/06/21 10:59:03 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `sqoop_test` AS t LIMIT 1 16/06/21 10:59:03 INFO hive.HiveImport: Loading uploaded data into Hive Logging initialized using configuration in jar:file:/usr/hdp/2.4.0.0-169/hive/lib/hive-common-1.2.1000.2.4.0.0-169.jar!/hive-log4j.properties OK Time taken: 1.574 seconds Loading data to table default.sqoop_test28 Table default.sqoop_test28 stats: [numFiles=4, totalSize=40] OK Time taken: 0.958 seconds

I can see some activities in atlas log:

2016-06-21 10:51:29,423 INFO - [qtp1828757853-561 - 7e31fa55-3424-4542-a40a-9380def0759d:] ~ Retrieving entity with guid=174a50b6-4f36-4604-9a5e-af2496ca7078 (GraphBackedMetadataRepository:144)

2016-06-21 10:58:35,398 INFO - [NotificationHookConsumer thread-0:] ~ updating entity {

id : (type: hive_db, id: <unassigned>)

name : default

clusterName : primary

description : Default Hive database

location : hdfs://bigdata21.webmedia.int:8020/apps/hive/warehouse

parameters : {}

owner : public

ownerType : ROLE

qualifiedName : default@primary

} (GraphBackedMetadataRepository:292)

2016-06-21 10:58:35,460 INFO - [NotificationHookConsumer thread-0:] ~ Retrieving entity with guid=2e1a95f0-de1f-47fe-9458-b551b0fa24de (GraphBackedMetadataRepository:144)

2016-06-21 10:58:35,463 INFO - [NotificationHookConsumer thread-0:] ~ Retrieving entity with guid=f569991c-d794-4c87-8cdc-a0f11189efb3 (GraphBackedMetadataRepository:144)

2016-06-21 10:58:35,466 INFO - [NotificationHookConsumer thread-0:] ~ Retrieving entity with guid=3749ed31-471c-420c-bb62-3a1db62b5757 (GraphBackedMetadataRepository:144)

2016-06-21 10:58:35,472 INFO - [NotificationHookConsumer thread-0:] ~ Retrieving entity with guid=7b13e8dc-cde6-4529-a26b-272c8ac44b8c (GraphBackedMetadataRepository:144)

2016-06-21 10:58:35,489 INFO - [NotificationHookConsumer thread-0:] ~ Putting 4 events (HBaseBasedAuditRepository:103)

2016-06-21 10:58:35,501 INFO - [NotificationHookConsumer thread-0:] ~ Retrieving entity with guid=35977939-feb2-4727-ba21-0128bccfbb37 (GraphBackedMetadataRepository:144)

2016-06-21 10:58:35,504 INFO - [NotificationHookConsumer thread-0:] ~ Putting 1 events (HBaseBasedAuditRepository:103)

2016-06-21 10:58:35,510 INFO - [NotificationHookConsumer thread-0:] ~ Putting 0 events (HBaseBasedAuditRepository:103)

2016-06-21 10:58:35,511 INFO - [NotificationHookConsumer thread-0:] ~ Retrieving entity with guid=2e1a95f0-de1f-47fe-9458-b551b0fa24de (GraphBackedMetadataRepository:144)

But there is no data lineage for my hive tables I have created using sqoop import.

Also I noticed that my sqoop import command is different from tutorial http://hortonworks.com/hadoop-tutorial/cross-component-lineage-apache-atlas/

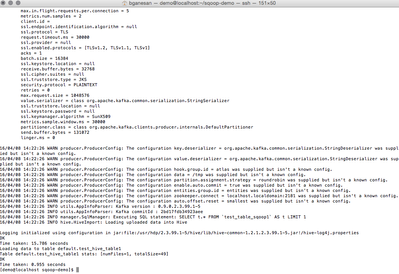

The first part of output log (max.in.flights.per.connection until linger.ms) is missing in my output. So I suspect that my hook configuration is somehow broken. I can not see any output line about kafka.

In my environment hive hook is working so I conclude that atlas is working and kafka is listening messages.

Br, Margus

Created 06-21-2016 08:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I copied sqoop-1.4.6.2.3.99.1-5.jar from sandbox to my environment (HDP-2.4.0.0-169. Original is sqoop-1.4.6.2.4.0.0-169.jar) and now it is working.

So why sqoop-1.4.6.2.4.0.0-169.jar there is no SqoopJobDataPublisher class? and in sqoop-1.4.6.2.3.99.1-5.jar there is?

Created 06-21-2016 08:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok may-be this is the reason:

jar tvf sqoop-1.4.6.2.4.0.0-169.jar | grep .class | grep SqoopJobDataPublisher

There is no SqoopJobDataPublisher in sqoop 1.4.6 ?

But in sandbox:

[root@localhost sqoop]# jar tvf sqoop-1.4.6.2.3.99.1-5.jar | grep .class | grep SqoopJobDataPublisher 3462 Tue Jan 01 00:00:00 EST 1980 org/apache/sqoop/SqoopJobDataPublisher$Data.class 644 Tue Jan 01 00:00:00 EST 1980 org/apache/sqoop/SqoopJobDataPublisher.class

Created 06-21-2016 08:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I copied sqoop-1.4.6.2.3.99.1-5.jar from sandbox to my environment (HDP-2.4.0.0-169. Original is sqoop-1.4.6.2.4.0.0-169.jar) and now it is working.

So why sqoop-1.4.6.2.4.0.0-169.jar there is no SqoopJobDataPublisher class? and in sqoop-1.4.6.2.3.99.1-5.jar there is?

Created 04-19-2017 10:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

what is the path of <sqoop lib> ? and I configured atlas server on my local machine. Do I need to set up sqoop there on its own?