Support Questions

- Cloudera Community

- Support

- Support Questions

- Cannot Insert Data from Text File Format Table to ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Cannot Insert Data from Text File Format Table to Parquet Format Table, MapRedTask Error

Created on

08-01-2023

11:33 PM

- last edited on

08-21-2023

02:46 AM

by

VidyaSargur

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

After Multi Delimiter Issue is solved. I got an error with data insertion.

So, after the data successfully inserted to text file format table with rowserde hive.contrib.serde2.MultiDelimitSerDe. Then i need to insert those data to parquet format table.

But when i ran the query to insert data, it failed.

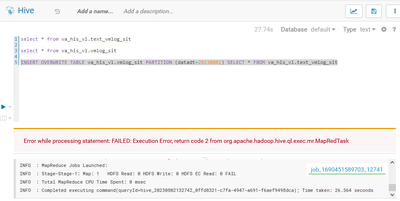

Error message: "[FATAL] 10:25:08 vaproject.xxx tHiveRow_1 Error while processing statement: FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask".

I used this script:

"INSERT OVERWRITE TABLE ParquetTable PARTITION (datadt=dateint) SELECT * FROM TextFileTable"

What is wrong or what am i missing? Please need your help. Thank you.

Sincerely,

Gideon Maruli

IT Data Management

Created 08-21-2023 03:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@itdm_bmi We are still seeing the error "java.lang.ClassNotFoundException: Class org.apache.hadoop.hive.contrib.serde2.MultiDelimitSerDe"

This means, you have not uploaded the "hive-contrib" jar to Hive classpath as mentioned here.

If you are on CDP(>7.1.5 onward) then, we will not have to upload hive-contrib jar to the class path. This serde class is already added to Hive native serde list.

You just need to alter the original table, and change the serde(Note: only applies to CDP version > 7.1.5)

ALTER TABLE <table name> SET SERDE 'org.apache.hadoop.hive.serde2.MultiDelimitSerDe'If you are on an older version, do consider uploading to hive-contrib jar to hive classpath by uploading this to aux jar path location.

Created 08-02-2023 01:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@itdm_bmi This error message is very generic. We could not say what caused the job to fail. Is it possible for you to share the YARN application log of application_1690451589703_12741 ?

You may collect it using the command :

yarn logs -applicationId application_1690451589703_12741 > /tmp/application_1690451589703_12741.logAttach this application_1690451589703_12741.log file. If you are not comfortable sharing the log files here, you may file a support case. We'll be happy to assist.

Created 08-14-2023 02:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry took a long time to reply,

I couldn't find that application_id anymore. So i try new task, just simply data insertion query with application_id = application_1690451589703_37927

In log file i think i found the error. It is written

"Caused by: java.lang.RuntimeException: Map operator initialization failed

at org.apache.hadoop.hive.ql.exec.mr.ExecMapper.configure(ExecMapper.java:137)

... 22 more

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.ClassNotFoundException: Class org.apache.hadoop.hive.contrib.serde2.MultiDelimitSerDe not found

at org.apache.hadoop.hive.ql.exec.MapOperator.getConvertedOI(MapOperator.java:326)

at org.apache.hadoop.hive.ql.exec.MapOperator.setChildren(MapOperator.java:361)

at org.apache.hadoop.hive.ql.exec.mr.ExecMapper.configure(ExecMapper.java:106)

... 22 more

Caused by: java.lang.ClassNotFoundException: Class org.apache.hadoop.hive.contrib.serde2.MultiDelimitSerDe not found

at org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:2409)

at org.apache.hadoop.hive.ql.plan.PartitionDesc.getDeserializer(PartitionDesc.java:170)

at org.apache.hadoop.hive.ql.exec.MapOperator.getConvertedOI(MapOperator.java:292)

... 24 more

2023-08-14 14:41:08,061 INFO [IPC Server handler 11 on 42850] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Diagnostics report from attempt_1690451589703_37927_m_000000_1: Error: java.lang.RuntimeException: Error in configuring object

at org.apache.hadoop.util.ReflectionUtils.setJobConf(ReflectionUtils.java:113)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:79)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:137)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:462)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:349)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:174)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1731)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:168)

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.ReflectionUtils.setJobConf(ReflectionUtils.java:110)

... 9 more

Caused by: java.lang.RuntimeException: Error in configuring object

at org.apache.hadoop.util.ReflectionUtils.setJobConf(ReflectionUtils.java:113)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:79)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:137)

at org.apache.hadoop.mapred.MapRunner.configure(MapRunner.java:38)

... 14 more

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.ReflectionUtils.setJobConf(ReflectionUtils.java:110)

... 17 more

Caused by: java.lang.RuntimeException: Map operator initialization failed

at org.apache.hadoop.hive.ql.exec.mr.ExecMapper.configure(ExecMapper.java:137)

... 22 more

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.ClassNotFoundException: Class org.apache.hadoop.hive.contrib.serde2.MultiDelimitSerDe not found

at org.apache.hadoop.hive.ql.exec.MapOperator.getConvertedOI(MapOperator.java:326)

at org.apache.hadoop.hive.ql.exec.MapOperator.setChildren(MapOperator.java:361)

at org.apache.hadoop.hive.ql.exec.mr.ExecMapper.configure(ExecMapper.java:106)

... 22 more

Caused by: java.lang.ClassNotFoundException: Class org.apache.hadoop.hive.contrib.serde2.MultiDelimitSerDe not found

at org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:2409)

at org.apache.hadoop.hive.ql.plan.PartitionDesc.getDeserializer(PartitionDesc.java:170)

at org.apache.hadoop.hive.ql.exec.MapOperator.getConvertedOI(MapOperator.java:292)

... 24 more"

Sorry i cant share the log file.

Created 08-21-2023 03:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@itdm_bmi We are still seeing the error "java.lang.ClassNotFoundException: Class org.apache.hadoop.hive.contrib.serde2.MultiDelimitSerDe"

This means, you have not uploaded the "hive-contrib" jar to Hive classpath as mentioned here.

If you are on CDP(>7.1.5 onward) then, we will not have to upload hive-contrib jar to the class path. This serde class is already added to Hive native serde list.

You just need to alter the original table, and change the serde(Note: only applies to CDP version > 7.1.5)

ALTER TABLE <table name> SET SERDE 'org.apache.hadoop.hive.serde2.MultiDelimitSerDe'If you are on an older version, do consider uploading to hive-contrib jar to hive classpath by uploading this to aux jar path location.

Created 08-28-2023 08:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@itdm_bmi, Have the replies helped resolve your issue? If so, please mark the appropriate reply as the solution, as it will make it easier for others to find the answer in the future.

Regards,

Vidya Sargur,Community Manager

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Learn more about the Cloudera Community: