Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Checkpoint Status on name node

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Checkpoint Status on name node

- Labels:

-

HDFS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I keep getting the follwoing health error message:

The filesystem checkpoint is 22 hour(s), 40 minute(s) old. This is 2,267.75% of the configured checkpoint period of 1 hour(s). Critical threshold: 400.00%. 10,775 transactions have occurred since the last filesystem checkpoint. This is 1.08% of the configured checkpoint transaction target of 1,000,000.

What is causing this and how can I get it to stop.

Logs:

Number of transactions: 8 Total time for transactions(ms): 1 Number of transactions batched in Syncs: 0 Number of syncs: 6 SyncTimes(ms): 132

Number of transactions: 8 Total time for transactions(ms): 1 Number of transactions batched in Syncs: 0 Number of syncs: 7 SyncTimes(ms): 155

Finalizing edits file /dfs/nn/current/edits_inprogress_0000000000000021523 -> /dfs/nn/current/edits_0000000000000021523-0000000000000021530

Starting log segment at 21531

Rescanning after 30000 milliseconds

Scanned 0 directive(s) and 0 block(s) in 0 millisecond(s).

list corrupt file blocks returned: 0

list corrupt file blocks returned: 0

BLOCK* allocateBlock: /tmp/.cloudera_health_monitoring_canary_files/.canary_file_2014_10_09-07_29_47. BP-941526827-192.168.0.1-1412692043930 blk_1073744503_3679{blockUCState=UNDER_CONSTRUCTION, primaryNodeIndex=-1, replicas=[ReplicaUnderConstruction[[DISK]DS-a7270ad4-959d-4756-b731-83457af7c6a3:NORMAL|RBW]]}

BLOCK* addStoredBlock: blockMap updated: 192.168.0.102:50010 is added to blk_1073744503_3679{blockUCState=UNDER_CONSTRUCTION, primaryNodeIndex=-1, replicas=[ReplicaUnderConstruction[[DISK]DS-eaca52a9-2713-4901-b978-e331c17800fc:NORMAL|RBW]]} size 0

DIR* completeFile: /tmp/.cloudera_health_monitoring_canary_files/.canary_file_2014_10_09-07_29_47 is closed by DFSClient_NONMAPREDUCE_592472068_72

BLOCK* addToInvalidates: blk_1073744503_3679 192.168.0.102:50010

BLOCK* BlockManager: ask 192.168.0.102:50010 to delete [blk_1073744503_3679]

Rescanning after 30001 milliseconds

Scanned 0 directive(s) and 0 block(s) in 0 millisecond(s).

Created 12-11-2015 01:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I found the fix for my issue.

As after format of namenode, checkpointing on snn was not happening bcoz of old namespace and blockpoolID on VERSION file.

After deleting the files under /data/dfs/snn. I restart the namenode and snn, later found it working fine.

Created 10-10-2014 09:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No One knows?????

Created 11-11-2014 10:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Standby Namenode (if HDFS-HA is enabled)

- Secondary Namenode (if HDFS-HA is *not* enabled)

Gautam Gopalakrishnan

Created 07-24-2020 12:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

we enabled HA . we are also getting same below error, How to fix this issue

The health test result for NAME_NODE_HA_CHECKPOINT_AGE has become concerning: The filesystem checkpoint is 3 minute(s), 36 second(s) old. This is 6.00% of the configured checkpoint period of 1 hour(s). 2,046,490 transactions have occurred since the last filesystem checkpoint. This is 204.65% of the configured checkpoint transaction target of 1,000,000. Warning threshold: 200.00%.

Created 09-15-2020 11:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Any solution this

Created 10-10-2014 09:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is what it states.

The filesystem checkpoint is 2 day(s), 59 minute(s) old. This is 4,898.53% of the configured checkpoint period of 1 hour(s). Critical threshold: 400.00%. 23,261 transactions have occurred since the last filesystem checkpoint. This is 2.33% of the configured checkpoint transaction target of 1,000,000.

Created 08-04-2015 02:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It seems like HA settings are enabled.

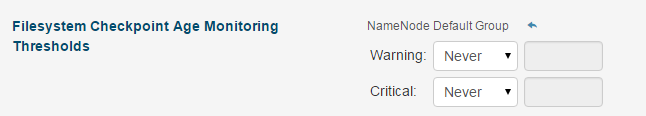

Please check if HDFS>Configuration>"Filesystem Checkpoint Age Monitoring Thresholds" is specified. If specified then change it to never as shown below. Save the settings you will not get the message again.

Created 08-04-2015 02:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please never disable that check. Checkpoints are very essential for the HDFS operation, and you do not want to be in a position with checkpoints failing for a technical reason and you never getting notified on that.

Instead, look at your Standby or Secondary NN to figure out what the error is, and/or seek help with that identified information.

Created 12-11-2015 01:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I found the fix for my issue.

As after format of namenode, checkpointing on snn was not happening bcoz of old namespace and blockpoolID on VERSION file.

After deleting the files under /data/dfs/snn. I restart the namenode and snn, later found it working fine.

Created 12-28-2016 05:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

what is it the files under snn ?

: )