Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Data Lake Architecture

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Data Lake Architecture

- Labels:

-

Apache HBase

-

Apache Hive

Created on 03-26-2017 11:08 AM - edited 09-16-2022 04:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

Can anyone advise me on how to organize data in my data lake? For instance, split data into categories, like Archived Data, that probably won't be used but it's needed, another division for raw data, and the last one for transformed data.

I'm using Hbase and Hive for now.

Thanks

Created 03-26-2017 05:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Let me put it in simple words.

Basically 4 layers are needed in Datalake.

Landing Zone: It contains all the raw data from all different source systems available. There is no cleansing and any logics applied on this layer. It just a one to one move from outside world into Hadoop. This raw data can be consumed by different application for analysis/predictive analysis as only raw data will give us many insights about the data.

Cleansing Zone: Here data's are properly arranged. For Example: Defining proper data type for the schema and cleansing, trimming works. There is no need for data model as well till this layer. If there are any data's which has to cleansed regularly and consumed by application then this layer would serve that purpose.

Transformed Zone: As the name suggest data modelling, proper schema are applied to build this layer. In short if there are any reports which has to run on a daily basis, on some conformed dimension which can serve for any specific purpose can be built in this layer. Also datamart which serves only for one/two particular needs can be built. For example: Conformed dimension like demographic, geography & data/time dimensions can be built in this layer which can satisfy your reporting as well as act as a source for machine learning algorithms as well.

https://hortonworks.com/blog/heterogeneous-storages-hdfs/

check for this links for archival storage. Archival can be built in landing zone itself once you have decided to move it to archive you are compress the data and push it to archive layer. Check the above links so that resources are properly used and allocated.

If needed check this book from oreilly. It covers a wide range of uses based data lake architecture.

http://www.oreilly.com/data/free/architecting-data-lakes.csp

Created on 03-26-2017 04:38 PM - edited 08-18-2019 03:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

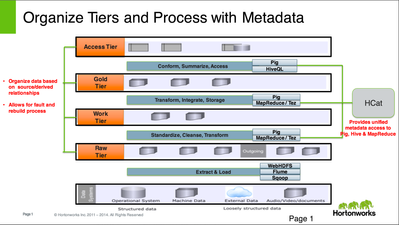

Typically, for data warehousing, we recommend logically organizing your data into tiers for processing.

The physical organization is a little different for everyone, but here is an example for Hive:

Created 03-27-2017 07:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thanks, this will help.

Created 03-26-2017 05:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Let me put it in simple words.

Basically 4 layers are needed in Datalake.

Landing Zone: It contains all the raw data from all different source systems available. There is no cleansing and any logics applied on this layer. It just a one to one move from outside world into Hadoop. This raw data can be consumed by different application for analysis/predictive analysis as only raw data will give us many insights about the data.

Cleansing Zone: Here data's are properly arranged. For Example: Defining proper data type for the schema and cleansing, trimming works. There is no need for data model as well till this layer. If there are any data's which has to cleansed regularly and consumed by application then this layer would serve that purpose.

Transformed Zone: As the name suggest data modelling, proper schema are applied to build this layer. In short if there are any reports which has to run on a daily basis, on some conformed dimension which can serve for any specific purpose can be built in this layer. Also datamart which serves only for one/two particular needs can be built. For example: Conformed dimension like demographic, geography & data/time dimensions can be built in this layer which can satisfy your reporting as well as act as a source for machine learning algorithms as well.

https://hortonworks.com/blog/heterogeneous-storages-hdfs/

check for this links for archival storage. Archival can be built in landing zone itself once you have decided to move it to archive you are compress the data and push it to archive layer. Check the above links so that resources are properly used and allocated.

If needed check this book from oreilly. It covers a wide range of uses based data lake architecture.

http://www.oreilly.com/data/free/architecting-data-lakes.csp