Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Exception while invoking getFileInfo of class ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Exception while invoking getFileInfo of class ClientNamenodeProtocolTranslatorPB

- Labels:

-

Cloudera Manager

-

HDFS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had 6 Zookeeper nodes and CM warned me that I should have 5 at most.

I stopped the entire cluster and deleted the zookeeper role from one of the 6 nodes (a "follower" was deleted).

Upon restarting the cluster, everything seemed just fine but now my attempts to used hdfs result in this error:

ubuntu@ip-10-0-0-157:~$ hdfs dfs -ls / 17/11/14 19:10:47 WARN retry.RetryInvocationHandler: Exception while invoking getFileInfo of class ClientNamenodeProtocolTranslatorPB after 1 fail over attempts. Trying to fail over after sleeping for 787ms. 17/11/14 19:10:48 WARN retry.RetryInvocationHandler: Exception while invoking getFileInfo of class ClientNamenodeProtocolTranslatorPB after 2 fail over attempts. Trying to fail over after sleeping for 1030ms. 17/11/14 19:10:49 WARN retry.RetryInvocationHandler: Exception while invoking getFileInfo of class ClientNamenodeProtocolTranslatorPB after 3 fail over attempts. Trying to fail over after sleeping for 2930ms.

Importantly, this error only affects the default usage above. If, instead, I specify the namenode then everything works normally.

ubuntu@ip-10-0-0-156:~$ hdfs dfs -ls hdfs://10.0.0.246:8020/ Found 3 items drwxr-xr-x - hdfs supergroup 0 2017-11-11 22:15 hdfs://10.0.0.246:8020/system drwxrwxrwt - hdfs supergroup 0 2016-02-07 15:08 hdfs://10.0.0.246:8020/tmp drwxr-xr-x - hdfs supergroup 0 2016-10-21 18:01 hdfs://10.0.0.246:8020/user

Note: I still have the old zookeeper node and can re-add that role to it if that might help.

Created 12-29-2017 09:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@epowell, Yes, you are correct. Client Configurations are managed separately from the configurations that servers use when CDH is managed by Cloudera Manager. Deploy Client Configuration for your cluster to make sure the /etc/hadoop/conf files contain the latest configuration items. Once that is done, you should be able to run commands just fine.

Ben

Created 11-14-2017 01:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The RetryInvocationHandler warning should be unrelated to the zookeeper issue though. Instead, it's probably that the first namenode is the standby NN. If you manually fail over, I think you wouldn't see the warning again.

You might also want to enable command line debug logs with the following command:

export HADOOP_ROOT_LOGGER=DEBUG,console

Created 11-14-2017 02:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much for your reply, @weichiu.

Yes, you are correct about having an odd number of ZK nodes. CM also warned me about this, which is part of the reason I wanted to reduce it down to 5 ZK nodes.

Regarding the namenode in standby, that is a very relevant topic for me because I just finished a difficult issue where one of my namenodes failed and I needed to switch to the standby node. You can see the forum post here. Everything was working good after this but I guess it is possible that I did something wrong.

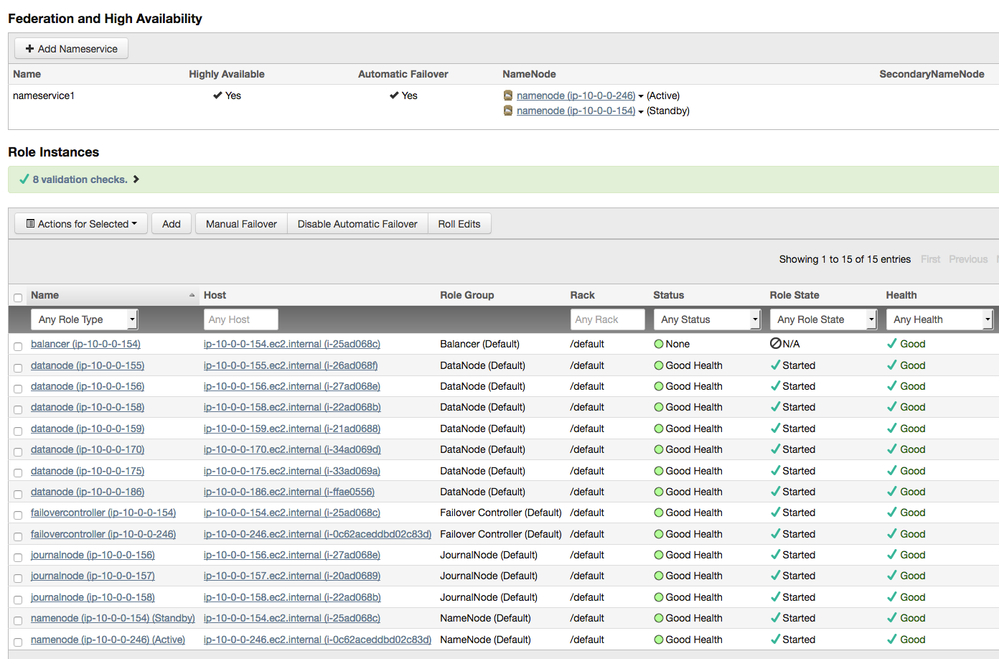

I have attached a screenshot of CM showing the active and standby namenodes. Are you suggesting that I should do a manual failover?

Created 11-14-2017 02:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Below is the same command after enabling the logging as you suggested.

It appears to be trying to contact 10.0.0.157, which was the active namenode prior to the issues which I mentioned in the linked post above.

The screenshot shows that 10.0.0.157 is no longer a namenode. This is surely part of the problem but I do not know how to resolve this.

ubuntu@ip-10-0-0-154:/backup/20171108$ hdfs dfs -ls /

17/11/14 22:03:06 DEBUG lib.MutableMetricsFactory: field org.apache.hadoop.metrics2.lib.MutableRate org.apache.hadoop.security.UserGroupInformation$UgiMetrics.loginSuccess with annotation @org.apache.hadoop.metrics2.annotation.Metric(valueName=Time, about=, value=[Rate of

successful kerberos logins and latency (milliseconds)], always=false, type=DEFAULT, sampleName=Ops)

17/11/14 22:03:06 DEBUG lib.MutableMetricsFactory: field org.apache.hadoop.metrics2.lib.MutableRate org.apache.hadoop.security.UserGroupInformation$UgiMetrics.loginFailure with annotation @org.apache.hadoop.metrics2.annotation.Metric(valueName=Time, about=, value=[Rate of

failed kerberos logins and latency (milliseconds)], always=false, type=DEFAULT, sampleName=Ops)

17/11/14 22:03:06 DEBUG lib.MutableMetricsFactory: field org.apache.hadoop.metrics2.lib.MutableRate org.apache.hadoop.security.UserGroupInformation$UgiMetrics.getGroups with annotation @org.apache.hadoop.metrics2.annotation.Metric(valueName=Time, about=, value=[GetGroups]

, always=false, type=DEFAULT, sampleName=Ops)

17/11/14 22:03:06 DEBUG impl.MetricsSystemImpl: UgiMetrics, User and group related metrics

17/11/14 22:03:06 DEBUG util.KerberosName: Kerberos krb5 configuration not found, setting default realm to empty

17/11/14 22:03:06 DEBUG security.Groups: Creating new Groups object

17/11/14 22:03:06 DEBUG util.NativeCodeLoader: Trying to load the custom-built native-hadoop library...

17/11/14 22:03:06 DEBUG util.NativeCodeLoader: Loaded the native-hadoop library

17/11/14 22:03:06 DEBUG security.JniBasedUnixGroupsMapping: Using JniBasedUnixGroupsMapping for Group resolution

17/11/14 22:03:06 DEBUG security.JniBasedUnixGroupsMappingWithFallback: Group mapping impl=org.apache.hadoop.security.JniBasedUnixGroupsMapping

17/11/14 22:03:07 DEBUG security.Groups: Group mapping impl=org.apache.hadoop.security.JniBasedUnixGroupsMappingWithFallback; cacheTimeout=300000; warningDeltaMs=5000

17/11/14 22:03:07 DEBUG security.UserGroupInformation: hadoop login

17/11/14 22:03:07 DEBUG security.UserGroupInformation: hadoop login commit

17/11/14 22:03:07 DEBUG security.UserGroupInformation: using local user:UnixPrincipal: ubuntu

17/11/14 22:03:07 DEBUG security.UserGroupInformation: UGI loginUser:ubuntu (auth:SIMPLE)

17/11/14 22:03:07 DEBUG hdfs.HAUtil: No HA service delegation token found for logical URI hdfs://nameservice1

17/11/14 22:03:07 DEBUG hdfs.NameNodeProxies: multipleLinearRandomRetry = null

17/11/14 22:03:07 DEBUG ipc.Server: rpcKind=RPC_PROTOCOL_BUFFER, rpcRequestWrapperClass=class org.apache.hadoop.ipc.ProtobufRpcEngine$RpcRequestWritable, rpcInvoker=org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker@75be16f5

17/11/14 22:03:07 DEBUG ipc.Client: The ping interval is 60000 ms.

17/11/14 22:03:07 DEBUG ipc.Client: Use SIMPLE authentication for protocol ClientNamenodeProtocolPB

17/11/14 22:03:07 DEBUG ipc.Client: Connecting to ip-10-0-0-157.ec2.internal/10.0.0.157:8020

17/11/14 22:03:07 DEBUG ipc.Client: closing ipc connection to ip-10-0-0-157.ec2.internal/10.0.0.157:8020: Connection refused

java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:567)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:207)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:528)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:492)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:510)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:604)

at org.apache.hadoop.ipc.Client$Connection.access$2100(Client.java:252)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1291)

at org.apache.hadoop.ipc.Client.call(Client.java:1209)

Created 11-14-2017 02:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From the stacktrace it looks like the NameNode 10.0.0.157 is done. Would you please check?

Created 11-14-2017 02:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10.0.0.157 is definitely done. The namenode role was removed from that host in the course of resolving this issue. In its place, I added a new host to the cluster and made it the namenode (10.0.0.246).

Here is the screenshot again. Let me know if you still cannot see it. It is visible from this link as well.

Created 11-15-2017 09:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have checked the configuration in hdfs-site.xml. It appears that the old namenodes are still being listed.

The commands below show the relevant settings in the hdfs-site.xml file found in the most recent NAMENODE directory on one of the hosts.

It shows that 10.0.0.154 and 10.0.0.157 are still listed as namenodes. However, according to CM, 10.0.0.246 should have replaced 10.0.0.157.

How should I address this issue?

root@ip-10-0-0-157:/home/ubuntu# cloudera_client_config() {

> local recent_config=$(ls -d1t /var/run/cloudera-scm-agent/process/*NAMENODE | head -1)

> grep -A2 -B1 "dfs.namenode.rpc-address" $nndir/hdfs-site.xml

> }

root@ip-10-0-0-157:/home/ubuntu# cloudera_client_config

<property>

<name>dfs.namenode.rpc-address.nameservice1.namenode451</name>

<value>ip-10-0-0-157.ec2.internal:8020</value>

</property>

--

<property>

<name>dfs.namenode.rpc-address.nameservice1.namenode374</name>

<value>ip-10-0-0-154.ec2.internal:8020</value>

</property>

Created 11-15-2017 12:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Since it is starting to look like a problem with the configuration files on the host, I am wondering if 'Deploy Client Configuration' could be useful. (That is the option in CM under the 'Actions' dropdown.)

Also in that dropdown is the option for 'View Client Configuration URLs'. Selecting the hdfs from the following menu showed this hdfs-site.xml (below). It contains the correct ip addresses for the current namenodes.

Is 'Deploy Client Configuration' the right solution to my issue?

<property>

<name>dfs.namenode.rpc-address.nameservice1.namenode472</name>

<value>ip-10-0-0-246.ec2.internal:8020</value>

</property>

<property>

<name>dfs.namenode.servicerpc-address.nameservice1.namenode472</name>

<value>ip-10-0-0-246.ec2.internal:8022</value>

</property>

<property>

<name>dfs.namenode.http-address.nameservice1.namenode472</name>

<value>ip-10-0-0-246.ec2.internal:50070</value>

</property>

<property>

<name>dfs.namenode.https-address.nameservice1.namenode472</name>

<value>ip-10-0-0-246.ec2.internal:50470</value>

</property>

<property>

<name>dfs.namenode.rpc-address.nameservice1.namenode374</name>

<value>ip-10-0-0-154.ec2.internal:8020</value>

</property>

<property>

Created 12-29-2017 09:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@epowell, Yes, you are correct. Client Configurations are managed separately from the configurations that servers use when CDH is managed by Cloudera Manager. Deploy Client Configuration for your cluster to make sure the /etc/hadoop/conf files contain the latest configuration items. Once that is done, you should be able to run commands just fine.

Ben

Created 12-29-2017 09:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you, Ben. Incidentally, I finally worked up the courage to click that Deploy Client Configuration button a couple days ago.

It worked perfectly.