Support Questions

- Cloudera Community

- Support

- Support Questions

- File too large Exception

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

File too large Exception

- Labels:

-

Apache Hadoop

Created on 11-16-2016 07:11 AM - edited 08-19-2019 04:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

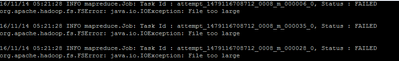

I am trying to process avro record using mapreduce where the key of the map is an avro record

public void map(AvroKey<GenericData.Record> key, NullWritable value, Context context)

The job fails if the number of columns to be processed in each record goes beyond a particular value.Say for example if the number of fields in each row is more than 100, my job fails.I tried to increase the map memory and java heap space in the cluster, but it didn't help.

Thanks in advance

Aparna

Created 01-05-2017 03:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

I was able to resolve the issue,the disk utilization in local directory (where logs and out files are created) in one of the node was more than the yarn.nodemanager.disk-health-checker.max-disk-utilization-per-disk-percentage setting. I freed up some space and also set the max-disk-utilization-percentage to much higher value.

Thanks

Aparna

Created 11-17-2016 01:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aparna,

Please go through this URL hope it will help you.

http://stackoverflow.com/questions/25242287/filenotfoundexception-file-too-large

Created 11-17-2016 02:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you tried this in Spark? or NiFi?

How much memory is configured in your app?

How much is configured in YARN for your job resources?

Can you post additional logs? code? submit details?

Why is the key an avro record and not the value?

You should make sure you have enough space in HDFS and also in the regular file system as some of the reduce stage will get mapped to regular disk.

Can you post hdfs and regular file system df

Created 11-23-2016 06:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

Please see my inline comments.

Have you tried this in Spark? or NiFi?

No

How much is configured in YARN for your job resources?

Memory allocated for yarn containers in each node - 200GB

Can you post additional logs? code? submit details?

I did not get any extra info other than FSError

Why is the key an avro record and not the value?

I am using AvroKeyInputFormat

You should make sure you have enough space in HDFS and also in the regular file system as some of the reduce stage will get mapped to regular disk.

I have enough space left in HDFS more precisely

HDFS -only 3% is being used and

Local FS -only 15% is being used

Ulimit

core file size (blocks, -c) 0

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 1032250

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 1024

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 10240

cpu time (seconds, -t) unlimited

max user processes (-u) 1024

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

Thanks

Created 01-05-2017 03:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

I was able to resolve the issue,the disk utilization in local directory (where logs and out files are created) in one of the node was more than the yarn.nodemanager.disk-health-checker.max-disk-utilization-per-disk-percentage setting. I freed up some space and also set the max-disk-utilization-percentage to much higher value.

Thanks

Aparna