Support Questions

- Cloudera Community

- Support

- Support Questions

- File transfer from FTP server to local server usin...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

File transfer from FTP server to local server using Nifi

- Labels:

-

Apache NiFi

Created 08-09-2018 07:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. I need to copy files from remote FTP server to my Local server . The FTP server has different files so i need to copy on my local server based on combination of fileName and currentDate .

2. Also i need to store the metadata of these input files (like fileName, createdDate, fileType, fileSize) . This metadata has to be inserted in Database table (for each input file which will be copy from FTP to local server) .

3. Now i need to schedule this entire process on 1st of every month.

Created on 08-09-2018 02:33 PM - edited 08-17-2019 08:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

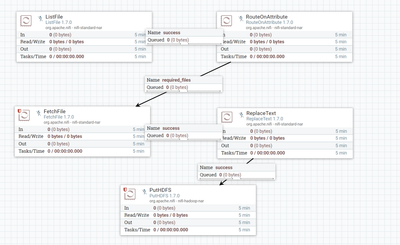

Flow:

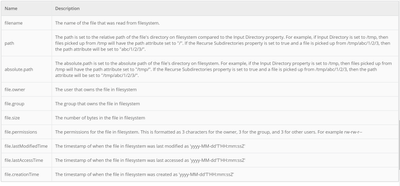

1. You can list out the files from the directory on every first day of month and check the filename attribute using RouteOnAttribute Processor to get only the current date files.

In RouteOnAttribute processor you can use either of the above attributes to making use of nifi expression language we can only filtering out only the required files.

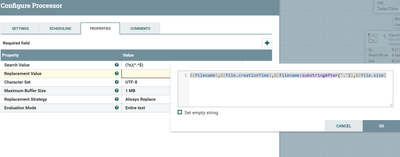

2.You can use ReplaceText processor to replace all this required metadata and store into HDFS/Hive..etc

i'm thinking filetype is csv,avro,json so i kept expression like ${filename:substringAfter('.')}

Replacement Value

${filename},${file.creationTime},${filename:substringAfter('.')},${file.size}To store the data to table you can use PutHDFS and create table on top of this directory.

3.You can use cron schedule to run the processor on first day of month and Execution in only on Primary node

-

If the Answer helped to resolve your issue, Click on Accept button below to accept the answer, That would be great help to Community users to find solution quickly for these kind of issues.

Created 08-09-2018 07:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Need ur help in this

Created 08-09-2018 02:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

-

1. The key term here is "copy". My understanding is that the Files consumed from the FTP server will not be deleted. So on the first of every Month you will list the exact same files over again. (Going to assume these files are maybe updated over the course of the month?).

---- Your best bet would be to use a combination of listFtp and fetchFTP processors. The listFTP processor can be configured with a file filter regex so that only files with matching that filter are listed. The output from this processor will be a 0 byte FlowFile for each File listed. At this point the actual content of these flowfiles have not been retrieved yet; however, each 0 byte FlowFile has numerous bits of metadata about the target file. These attributes can be used to filter out additional listed files by time constraint (perhaps using RouteOnAttribute processor). The listFTP processor also records state. On execution next month only files with a last modified date newer then the previous recorded state will be listed. The FetchFTP processor would then be used to fetch the actual content of those listed FlowFiles.

-

2. At this point you have all your FlowFiles (Each FlowFile consists of FlowFile content and FlowFile attributes). These attributes will include everything you need except file type at this point. Since NiFi is data agnostic, it does not at its core care about content type/format (specific processors that operate against the content will). You may consider trying to use the IdentifyMimeType processor to get the file types. Route the "success" relationship twice. Once down your database processing path and once down you local server path. On database path you want to take the attributes needed for your DB and replace the content with that information (examples: ReplaceText, AttributesToJson, etc...) and the put to your DB. On local server path you want to write original content to your local system directory (Example: putFile)

-

3. As far as scheduling, you just need to configure your original ListFTP processor using the Cron Driven scheduling strategy and configure a Quartz cron so that the processor is only scheduled to run on the 1st of every month.

-

Thanks,

Matt

-

If you found this Answer addressed your original question, please take a moment to login and click "Accept" below the answer.

Created on 08-09-2018 02:33 PM - edited 08-17-2019 08:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Flow:

1. You can list out the files from the directory on every first day of month and check the filename attribute using RouteOnAttribute Processor to get only the current date files.

In RouteOnAttribute processor you can use either of the above attributes to making use of nifi expression language we can only filtering out only the required files.

2.You can use ReplaceText processor to replace all this required metadata and store into HDFS/Hive..etc

i'm thinking filetype is csv,avro,json so i kept expression like ${filename:substringAfter('.')}

Replacement Value

${filename},${file.creationTime},${filename:substringAfter('.')},${file.size}To store the data to table you can use PutHDFS and create table on top of this directory.

3.You can use cron schedule to run the processor on first day of month and Execution in only on Primary node

-

If the Answer helped to resolve your issue, Click on Accept button below to accept the answer, That would be great help to Community users to find solution quickly for these kind of issues.