Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Files detected twice with ListFile processor

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Files detected twice with ListFile processor

- Labels:

-

Apache NiFi

Created on 06-14-2017 12:12 PM - edited 08-17-2019 09:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi everybody,

I use Nifi 1.0.0 on AIX server.

My ListFile processor gives the same file in two different dataflows. It schedules every 15 seconds.

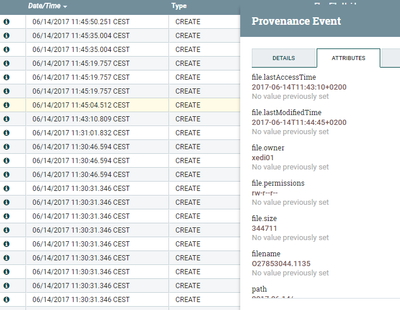

The file O27853044.1135 begins to fill at 11:35 and ends at 11:45.

Is it normal that the processor creates a dataflow at 11:42 ?

How avoid ListFile processor to create a dataflow before the end of file's update ?

Thanks for you help

Created 06-14-2017 12:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The ListFile processor will list all non-hidden file it sees in the target directory. It then will record the latest timestamp of batch of files it listed in state management. This timestamp is what is used to determine what new files to list in next run. Since the timestamp has changed, the same file will be listed again.

A few suggestion in preferred order would be:

1. Change how files are being written to this directory.

- The ListFile processor will ignore and hidden files. So File being written as ".myfile.txt" will be ignored until the filename has changed to just "myfile.txt".

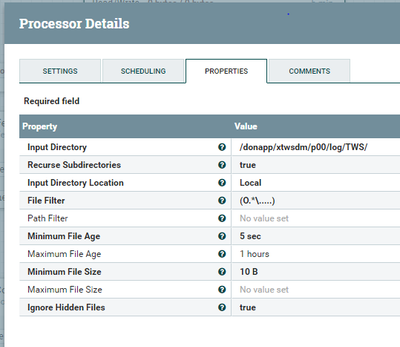

2. Change the "Minimum File Age" setting on the processor to a high enough value to allows source system to complete file writes to this directory.

3. Add a detectDuplicate processor after your listFile processor to detect duplicate listed files and remove them from the your dataflow before the FetchFile processor.

Thanks,

Matt

Created 06-14-2017 12:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The ListFile processor will list all non-hidden file it sees in the target directory. It then will record the latest timestamp of batch of files it listed in state management. This timestamp is what is used to determine what new files to list in next run. Since the timestamp has changed, the same file will be listed again.

A few suggestion in preferred order would be:

1. Change how files are being written to this directory.

- The ListFile processor will ignore and hidden files. So File being written as ".myfile.txt" will be ignored until the filename has changed to just "myfile.txt".

2. Change the "Minimum File Age" setting on the processor to a high enough value to allows source system to complete file writes to this directory.

3. Add a detectDuplicate processor after your listFile processor to detect duplicate listed files and remove them from the your dataflow before the FetchFile processor.

Thanks,

Matt

Created 06-14-2017 12:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm going to try number 2.

And could you give me an example of properties for the number 3 and detectduplicate processor ?

Thanks, TV

Created on 06-14-2017 01:59 PM - edited 08-17-2019 09:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With number 3, I am assuming that every file has a unique filename from which to determine if the same filename has ever been listed more then once. If that is not the case, then you would need to use detectDuplicate after fetching the actual data (less desirable since you will have wasted the resources to potential fetch the same files twice before deleting the duplicate.

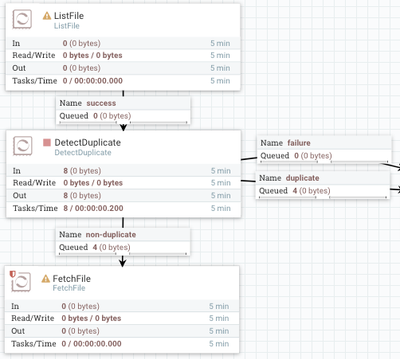

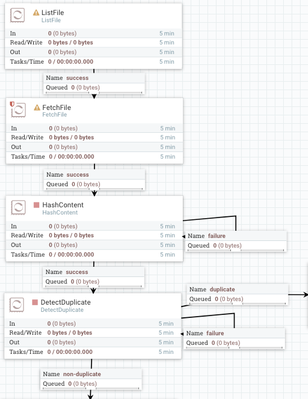

Let assume every file has a unique filename. If so the detect duplicate flow would look like this:

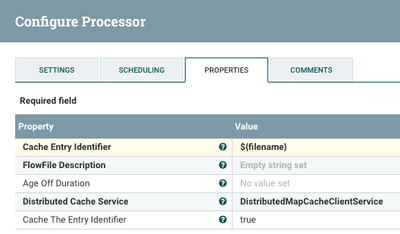

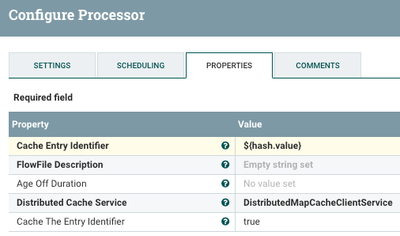

with the DetectDuplicate configured as follows:

You will also need to add two controller services to your NiFi:

- DistributedMapCacheServer

- DistributedMapCacheClientService

The value associated to the "filename" attribute on the FlowFile is checked against entries in the DistributedMapCacheServer. If filename does not exist, it is added. If it exists already then FlowFile is routed to duplicate relationship.

In scenario 2 where filenames may be reused we need to detect if the content after fetch is a duplicate or not. IN this case the flow may look like this:

After fetching the content of a FlowFile, the "HashContent" processor is used to create a hash of the content and write it to a FlowFile attribute (default is hash.value). The detectDuplicate processor then configured to look for FlowFile with the same hash.value to determine if they are duplicates.

FlowFiles where the content hash already exist in the distributedMapCacheServer, those FlowFile are routed to duplicate where you can delete them if you like.

If you found this answer addressed your original question, please mark it as accepted by clicking

Thanks,

Matt

Created 12-18-2017 02:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank You Matt, I too was facing similar issue and your suggestion worked.

Created 06-14-2017 02:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The second suggestion works as well.

I kepp the third one for a next usage.

Thanks for all Matt

TV.