@kalaicool261 There is nothing like terminal memory. This seems a engine memory issue.

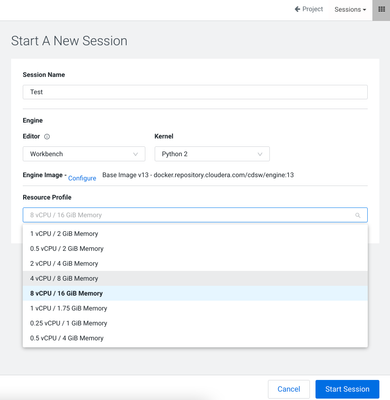

You should launch the session (engine) with larger memory and CPU from the drop down menu on Session launch page and see if the helps.

Cheers!

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.