Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Getting user not found issue when starting spa...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Getting user not found issue when starting spark job

- Labels:

-

Apache Spark

Created on

09-23-2019

01:56 PM

- last edited on

11-20-2019

10:23 PM

by

VidyaSargur

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Facing a problem where randomly we will get "User not found" error when trying to start a container in checking namenode logs I can see the following:

2019-09-15 23:55:58,529 INFO containermanager.ContainerManagerImpl (ContainerManagerImpl.java:startContainerInternal(810)) - Start request for container_e65_1565485836435_8640_02_000003 by user pyqy0srv0z50

2019-09-15 23:55:58,529 INFO application.ApplicationImpl (ApplicationImpl.java:transition(304)) - Adding container_e65_1565485836435_8640_02_000003 to application application_1565485836435_8640

2019-09-15 23:55:58,533 INFO container.ContainerImpl (ContainerImpl.java:handle(1163)) - Container container_e65_1565485836435_8640_02_000003 transitioned from NEW to LOCALIZING

2019-09-15 23:55:58,533 INFO containermanager.AuxServices (AuxServices.java:handle(215)) - Got event CONTAINER_INIT for appId application_1565485836435_8640

2019-09-15 23:55:58,533 INFO yarn.YarnShuffleService (YarnShuffleService.java:initializeContainer(192)) - Initializing container container_e65_1565485836435_8640_02_000003

2019-09-15 23:55:58,533 INFO yarn.YarnShuffleService (YarnShuffleService.java:initializeContainer(284)) - Initializing container container_e65_1565485836435_8640_02_000003

2019-09-15 23:55:58,533 INFO localizer.LocalizedResource (LocalizedResource.java:handle(203)) - Resource hdfs://prod/user/pyqy0srv0z50/.sparkStaging/application_1565485836435_8640/__spark_conf__.zip transitioned from INIT to DOWNLOAD

ING

2019-09-15 23:55:58,533 INFO localizer.ResourceLocalizationService (ResourceLocalizationService.java:handle(712)) - Created localizer for container_e65_1565485836435_8640_02_000003

2019-09-15 23:55:58,535 INFO localizer.ResourceLocalizationService (ResourceLocalizationService.java:writeCredentials(1194)) - Writing credentials to the nmPrivate file /app/data/hadoop/disk14/yarn/local/nmPrivate/container_e65_15654858

36435_8640_02_000003.tokens. Credentials list:

2019-09-15 23:55:58,693 WARN privileged.PrivilegedOperationExecutor (PrivilegedOperationExecutor.java:executePrivilegedOperation(171)) - Shell execution returned exit code: 255. Privileged Execution Operation Output:

main : command provided 0

main : run as user is pyqy0srv0z50

main : requested yarn user is pyqy0srv0z50

User pyqy0srv0z50 not found

Full command array for failed execution:

2019-09-15 23:55:58,694 WARN nodemanager.LinuxContainerExecutor (LinuxContainerExecutor.java:startLocalizer(269)) - Exit code from container container_e65_1565485836435_8640_02_000003 startLocalizer is : 255

org.apache.hadoop.yarn.server.nodemanager.containermanager.linux.privileged.PrivilegedOperationException: ExitCodeException exitCode=255:

at org.apache.hadoop.yarn.server.nodemanager.containermanager.linux.privileged.PrivilegedOperationExecutor.executePrivilegedOperation(PrivilegedOperationExecutor.java:177)

at org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor.startLocalizer(LinuxContainerExecutor.java:264)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ResourceLocalizationService$LocalizerRunner.run(ResourceLocalizationService.java:1114)

Caused by: ExitCodeException exitCode=255:

at org.apache.hadoop.util.Shell.runCommand(Shell.java:944)

at org.apache.hadoop.util.Shell.run(Shell.java:848)

at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:1142)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.linux.privileged.PrivilegedOperationExecutor.executePrivilegedOperation(PrivilegedOperationExecutor.java:151)

... 2 more

2019-09-15 23:55:58,694 INFO localizer.ResourceLocalizationService (ResourceLocalizationService.java:run(1134)) - Localizer failed

java.io.IOException: Application application_1565485836435_8640 initialization failed (exitCode=255) with output: main : command provided 0

main : run as user is pyqy0srv0z50

main : requested yarn user is pyqy0srv0z50

User pyqy0srv0z50 not found

at org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor.startLocalizer(LinuxContainerExecutor.java:273)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ResourceLocalizationService$LocalizerRunner.run(ResourceLocalizationService.java:1114)

Caused by: org.apache.hadoop.yarn.server.nodemanager.containermanager.linux.privileged.PrivilegedOperationException: ExitCodeException exitCode=255:

at org.apache.hadoop.yarn.server.nodemanager.containermanager.linux.privileged.PrivilegedOperationExecutor.executePrivilegedOperation(PrivilegedOperationExecutor.java:177)

at org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor.startLocalizer(LinuxContainerExecutor.java:264)

... 1 more

Caused by: ExitCodeException exitCode=255:

at org.apache.hadoop.util.Shell.runCommand(Shell.java:944)

at org.apache.hadoop.util.Shell.run(Shell.java:848)

at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:1142)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.linux.privileged.PrivilegedOperationExecutor.executePrivilegedOperation(PrivilegedOperationExecutor.java:151)

... 2 more

2019-09-15 23:55:58,694 INFO container.ContainerImpl (ContainerImpl.java:handle(1163)) - Container container_e65_1565485836435_8640_02_000003 transitioned from LOCALIZING to LOCALIZATION_FAILED

2019-09-15 23:55:58,697 INFO container.ContainerImpl (ContainerImpl.java:handle(1163)) - Container container_e65_1565485836435_8640_02_000003 transitioned from LOCALIZATION_FAILED to DONE

Created 09-30-2019 08:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @paleerbccm

The issue here is that this user ID doesn't exist on one of your YARN NodeManager machines. That probably also explains the randomness of the issue. So long as a container doesn't end up allocated on that machine then you won't run into any problems.

You need to find where the containers fail to launch with that exception, then SSH into the host machine and confirm the problem by running:

id pyqy0srv0z50

You will then need to be sure to create this user on that machine and make sure that the user's group membership matches whatever you have on all your other hosts.

Created 11-20-2019 12:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 11-20-2019 12:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@paleerbccm it's still the same issue but the log you're sharing doesn't show the details we would need. Your problem is that when the container for the ApplicationMaster attempts to launch on a particular host machine as the ptzs0srv0z50, this shell command fails because the user ID doesn't exist on this machine.

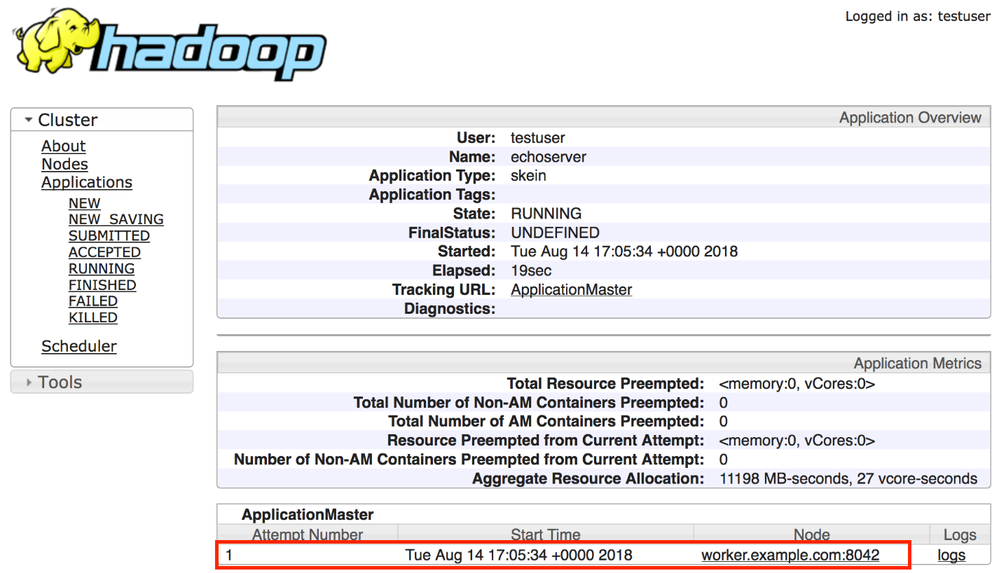

What you need to do is identify where the Application Master attempted to run. You can do this from the Resource Manager's WebUI. Please refer to the following screenshot for example and note the highlighted red box:

You'll see in this example that the first ApplicationMaster attempt was on the host machine worker.example.com. You would then need to SSH into that host machine and run the following command to see if this user actually exists or not:

id ptzs0srv0z50

Created 11-20-2019 12:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have run the id command for the user on both nodes.

Both show the user is valid.

Created 11-20-2019 12:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This issue would really require further debugging. For whatever reason, at that particular time something happened with the user ID resolution. We've seen customers before that had similar issues when tools like SSSD is being used:

https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/6/html/deployment_guide/sssd-...

One idea here is to create a shell script that runs the command 'id ptz0srv0z50' and 'id -Gn ptz0srv0z50' in a loop based on some interval. say 10, 20 or 30 seconds and when the problem occurs just go over the output of that shell script and see if you notice anything different in the output at the time of the issue.