Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: HDF: NiFi Kafka authentication via SASL_PLAINT...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HDF: NiFi Kafka authentication via SASL_PLAINTEXT, how to propagate logged in user?

Created 11-08-2017 10:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

there is HDF setup done (HDF3.0) and now SASL_PLAINTEXT needs to be added to Kafka listeners (without Kerberos, just the plain sasl). To be enable to authenticate there needs to be user:pw tuples being provided in the .jaas file. But this looks very static.

How can the enduser (who is logged in into NiFi) being used in a Kafka Processor to authenticate against Kafka ?

Is there a possibility with user defined properties to ensure that the current user is being used for authenticating against Kafka / or to dynamically decide which .jaas file needs to be used based on the current logged in user ?

Kerberos and SSL are currently not an option, hence need a solution for SASL_PLAINTEXT 😉

Thanks in advance...

Created 11-08-2017 02:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

The end-user in NiFi is only the person configuring the processor. Once the person starts the processor and leaves the application, there is no longer a user, it is now being executed by the framework, and there could be several users who could come in stop it and make changes and start it again. So processors are not going to execute based on the end-user that configured them.

You are correct though that there are improvements that need to be made around SASL_PLAINTEXT. Currently the processors set Kafka's sasl.mechanism property to GSSAPI behind the scenes, and you need to add a user-defined property of sasl.mechanism = PLAIN in order to change this, so that should be exposed as a formal property. Also, currently the 0.10 processors support creating a dynamic JAAS file based on properties in the processors, but I believe it only works for Kerberos and we should be able to do the same for PLAIN.

Created 11-08-2017 02:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

The end-user in NiFi is only the person configuring the processor. Once the person starts the processor and leaves the application, there is no longer a user, it is now being executed by the framework, and there could be several users who could come in stop it and make changes and start it again. So processors are not going to execute based on the end-user that configured them.

You are correct though that there are improvements that need to be made around SASL_PLAINTEXT. Currently the processors set Kafka's sasl.mechanism property to GSSAPI behind the scenes, and you need to add a user-defined property of sasl.mechanism = PLAIN in order to change this, so that should be exposed as a formal property. Also, currently the 0.10 processors support creating a dynamic JAAS file based on properties in the processors, but I believe it only works for Kerberos and we should be able to do the same for PLAIN.

Created 11-09-2017 10:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Bryan, many thanks for your explanation.

Do you have any resources/hints regarding "creating a dynamic JAAS file", how this would look like ? ....assuming Kerberos is enabled 😉

...or do you mean by 'dynamic' the possibility to specify principal&keytab within the Kafka processor?

Thanks!

Created on 06-27-2019 10:46 AM - edited 08-18-2019 01:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Bryan,

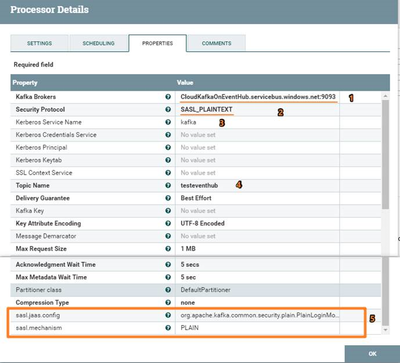

Thanks for your inputs, that helped me understand the properties to setup with SASL_PLAINTEXT .

I'm currently working on a project, that using NiFi publishKafka_0_10 processor with eventHub, from Microsoft doc (

we need to map below configurations to the properties in publishKafka_0_10 processor

bootstrap.servers={YOUR.EVENTHUBS.FQDN}:9093

security.protocol=SASL_SSL

sasl.mechanism=PLAIN

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="$ConnectionString" password="{YOUR.EVENTHUBS.CONNECTION.STRING}";

I've tried to use SASL_PLAINTTEXT(as SSL is not an option in our test environment), and configured as bleow. However, it still cannot connect to eventHub, keep prompting me error "TimeoutException: Failed to update metadata after 5000 m"

Can you please help review the properties I setup, perhaps there are something wrong in it, i've struggled on this few days, looking forward to your response. Thanks!

Created on 06-27-2019 10:46 AM - edited 08-18-2019 01:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Bryan,

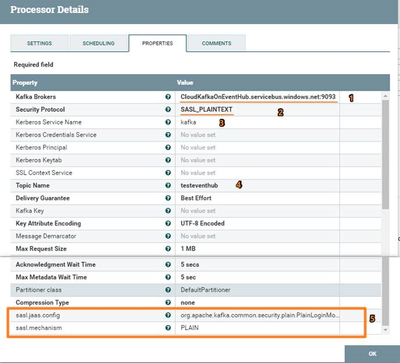

Thanks for your inputs, that helped me understand the properties to setup with SASL_PLAINTEXT .

I'm currently working on a project, that using NiFi publishKafka_0_10 processor with eventHub, from Microsoft doc (

we need to map below configurations to the properties in publishKafka_0_10 processor

bootstrap.servers={YOUR.EVENTHUBS.FQDN}:9093

security.protocol=SASL_SSL

sasl.mechanism=PLAIN

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="$ConnectionString" password="{YOUR.EVENTHUBS.CONNECTION.STRING}";

I've tried to use SASL_PLAINTTEXT(as SSL is not an option in our test environment), and configured as bleow. However, it still cannot connect to eventHub, keep prompting me error "TimeoutException: Failed to update metadata after 5000 m"

Can you please help review the properties I setup, perhaps there are something wrong in it, i've struggled on this few days, looking forward to your response. Thanks!