Support Questions

- Cloudera Community

- Support

- Support Questions

- HDFS - Dropping HIVE table is not freeing up memor...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HDFS - Dropping HIVE table is not freeing up memory within HDFS

- Labels:

-

Apache Hadoop

-

Apache Hive

Created 04-04-2017 02:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

I have been using Hive on sandbox for a college project. Everything was working fine up until yesterday, when I noticed that the memory was running out due to the data I am using being rather large.

To free up memory I dropped a table I no longer need and then went into Files_View/user/admin/.Trash/<table name> and deleted the table from the trash folder.

However after doing so the HDFS memory is still full and has not reduced at all.

I then checked the following Files_View/Apps/hive/warehouse/<database im using>/<table_name> to ensure that the table was deleted and it is gone.

Does anyone know how I can permanently delete the table so that the memory also frees up within the HDFS?

Thanks in advance.

Created 04-04-2017 03:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Maeve Ryan What is the error you are getting ? How are you checking the size of hdfs ?

Created on 04-04-2017 05:47 PM - edited 08-18-2019 01:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

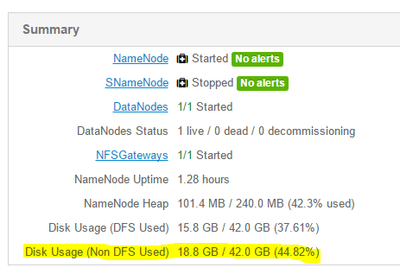

Please see attached screenshot.

As you can see 18.8GB is being used. This was the same amount before I deleted the table. I would have expected this to decrease by about 9GB once I droped and removed the table from trash.

Created 04-04-2017 07:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Maeve Ryan

It seems Disk Usage (Non DFS Used) is higher than DFS Used. DFS Used is your data in data node where as Non DFS Used is not exactly the data but some kind of log files which will be held by Yarn or other hadoop services. Check for huge files available in the disk. Then remove those files which will free free up your memory .Verify whats the size of DFS used before and after deleting the data file from HDFS. That will give an amount of memory available after the deletion.

Memory calculation for non DFS:

Non DFS Used = Configured Capacity - Remaining Space - DFS Used

du -hsx * | sort -rh | head -10 helps to get the top 10 huge files.

Created 06-02-2023 08:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

how can I check for the largest file in a certain tenant in hadoop?