Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: HDFS NameNode won't leave safemode

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HDFS NameNode won't leave safemode

Created on 11-15-2017 09:56 AM - edited 08-17-2019 11:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've setup a HDP 2.6.3 Hadoop Cluster with Ambari 2.5.2 (was HDP 2.5.2 and Ambari 2.4.2 earlier, but had the same situation). When I start all services via the Ambari UI, the process stucks at starting the NameNode service. The output always sais:

2017-11-15 09:23:25,594 - Waiting for this NameNode to leave Safemode due to the following conditions: HA: False, isActive: True, upgradeType: None

2017-11-15 09:23:25,594 - Waiting up to 19 minutes for the NameNode to leave Safemode...

2017-11-15 09:23:25,595 - Execute['/usr/hdp/current/hadoop-hdfs-namenode/bin/hdfs dfsadmin -fs hdfs://my-host.com:8020 -safemode get | grep 'Safe mode is OFF''] {'logoutput': True, 'tries': 115, 'user': 'hdfs', 'try_sleep': 10}

2017-11-15 09:23:27,811 - Retrying after 10 seconds. Reason: Execution of '/usr/hdp/current/hadoop-hdfs-namenode/bin/hdfs dfsadmin -fs hdfs://my-host.com:8020 -safemode get | grep 'Safe mode is OFF'' returned 1.

2017-11-15 09:23:40,148 - Retrying after 10 seconds. Reason: Execution of '/usr/hdp/current/hadoop-hdfs-namenode/bin/hdfs dfsadmin -fs hdfs://my-host.com:8020 -safemode get | grep 'Safe mode is OFF'' returned 1.

2017-11-15 09:23:52,525 - Retrying after 10 seconds. Reason: Execution of '/usr/hdp/current/hadoop-hdfs-namenode/bin/hdfs dfsadmin -fs hdfs://my-host.com:8020 -safemode get | grep 'Safe mode is OFF'' returned 1.

2017-11-15 09:24:04,853 - Retrying after 10 seconds. Reason: Execution of '/usr/hdp/current/hadoop-hdfs-namenode/bin/hdfs dfsadmin -fs hdfs://my-host.com:8020 -safemode get | grep 'Safe mode is OFF'' returned 1.

2017-11-15 09:24:17,238 - Retrying after 10 seconds. Reason: Execution of '/usr/hdp/current/hadoop-hdfs-namenode/bin/hdfs dfsadmin -fs hdfs://my-host.com:8020 -safemode get | grep 'Safe mode is OFF'' returned 1.

2017-11-15 09:24:29,566 - Retrying after 10 seconds. Reason: Execution of '/usr/hdp/current/hadoop-hdfs-namenode/bin/hdfs dfsadmin -fs hdfs://my-host.com:8020 -safemode get | grep 'Safe mode is OFF'' returned 1.

...I think this issue occurs since I setup the cluster ~6 months ago.

I always try to leave the safemode manually using the command:

hdfs dfsadmin -safemode leave

But this command doesn't help very often, mostly it shows that safemode is still ON. So I have to force the safemode exit with

hdfs dfsadmin -safemode forceExit

Afterwards the NameNode start resumes and all other services start also fine. When I forget to type the forceExit command, the NameNode service start times out and the following service starts fail also.

Can someone explain, why Ambari / NameNode can't leave the safemode automatically? What could here be the reason?

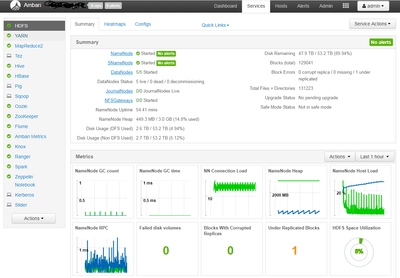

Here a screenshot of my HDFS overview on Ambari, after a successful start of the services (after forceExit):

Any help would be appreciated, thank you!

Created 11-15-2017 03:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think your cluster is kerberized. The cause the nameNode is switching to safe mode is due to the communication time out in between the KDC server. The error should appear in the /var/log/hadoop-hdfs log

You should find an error stack like below.

Caused by: javax.security.auth.login.LoginException: Receive timed out at com.sun.security.auth.module.Krb5LoginModule.attemptAuthentication(Krb5LoginModule.java:808) at com.sun.security.auth.module.Krb5LoginModule.login(Krb5LoginModule.java:617) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at javax.security.auth.login.LoginContext.invoke(LoginContext.java:755) at javax.security.auth.login.LoginContext.access$000(LoginContext.java:195) at

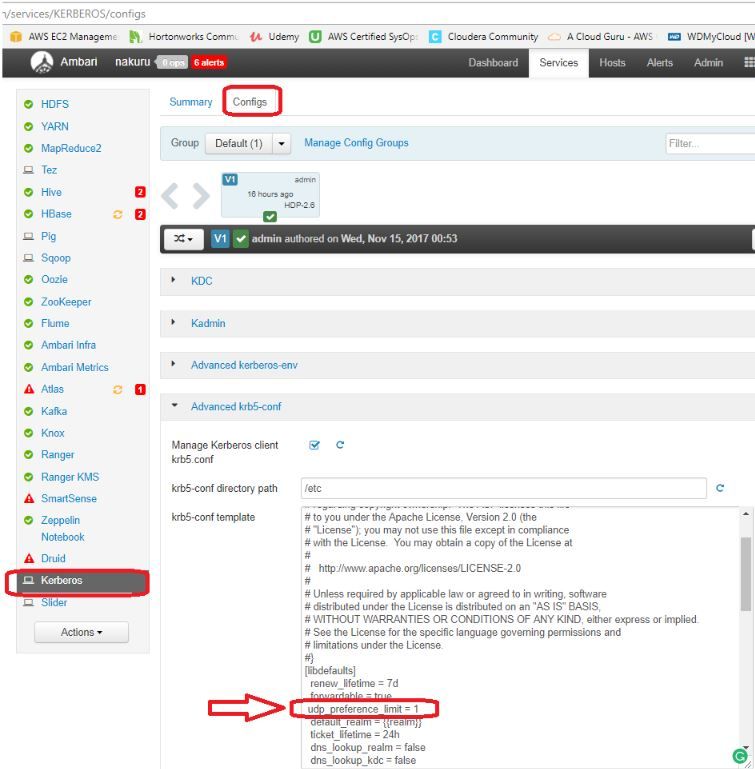

The solution on this problem will be adding a line to krb5.conf under the [libdefaults] section: udp_preference_limit = 1

You shouldn't edit the local /etc/krb5.conf but you have to use the Ambari UI go to Ambari > kerberos > Configs > Advanced krb5-conf to make the change.

This ensures that the new setting available on all nodes within the cluster. See atached screenshot save and restart all required services

Please let me know if that helped.

Created 11-16-2017 08:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the fast answer! I added the property to my Kerberos settings in Ambari, but the problem still exists. Also my /var/log/hadoop-hdfs directory on the NameNode host exists, but it is empty!

Created 08-24-2018 11:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try this:

sudo -u hdfs hadoop dfsadmin -safemode leave

Created 08-27-2018 06:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Daniel Muller, can you grep "Safe mode is" from hdfs namenode log? That will tell the reason why namenode does not exit safemode directly.