Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: HDFS capacity is 0 but all DataNode are live

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HDFS capacity is 0 but all DataNode are live

- Labels:

-

Apache Hadoop

Created on 07-05-2018 05:03 PM - edited 08-18-2019 01:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

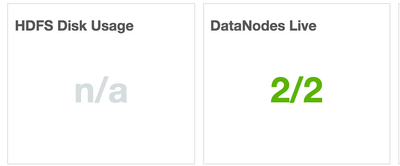

I have 2 DataNodes and both are live. However, the dashboard shows that the HDFS Disk Usage is n/a, and capacity is zero (see the screenshot).

I tried to put some files to hdfs through "hadoop fs -put hello.txt /", and got the error

File /hello.txt._COPYING_ could only be replicated to 0 nodes instead of minReplication (=1). There are 2 datanode(s) running and no node(s) are excluded in this operation.

I guess this means the NameNode knows the existence of 2 DataNodes, and none is excluded from the "-put" operation?

I have checked the "dfs.datanode.data.dir", and it is pointing to the correct directory with 500G available space.

How can I resolve this issue?

Created 07-06-2018 06:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @Yun Ding !

Could you check the following outputs?

hdfs dfs -du -h /

hdfs dfsadmin -report

lsblk df -h

And also check the value for this parameter on Ambari:

dfs.datanode.du.reserved

PS: Just in case, check the permission for the dfs.datanode.data.dir directory, should it be owned by hdfs:hadoop.

Hope this helps!

Created 07-06-2018 06:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @Yun Ding !

Could you check the following outputs?

hdfs dfs -du -h /

hdfs dfsadmin -report

lsblk df -h

And also check the value for this parameter on Ambari:

dfs.datanode.du.reserved

PS: Just in case, check the permission for the dfs.datanode.data.dir directory, should it be owned by hdfs:hadoop.

Hope this helps!

Created 07-06-2018 03:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

it was due to the permission for the dfs.datanode.data.dir directory. Thanks!

Created 07-06-2018 03:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good to know! 🙂

Created 01-13-2022 10:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi there,

is it dfs_datanode_data_dir_perm?

what's your previous value for it when it couldn't write?