Support Questions

- Cloudera Community

- Support

- Support Questions

- Hadoop daemons not running. (Ambari installation)

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Hadoop daemons not running. (Ambari installation)

- Labels:

-

Apache Ambari

-

Apache Hadoop

Created 02-03-2016 12:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have successfully installed Ambari Service manually. I have installed most of the services which were listed in Ambari. However I do not see the hadoop deamons running. I don't even find the hadoop directory under /bin directory. I have the following questions:

1) When Ambari server is setup on a Centos machine, where does Ambari install Hadoop?

2) Under which folder Hadoop is installed?

3) Why is hadoop deamons not started automatically?

4) If hadoop is not installed, what are the next steps?

Please can someone help me, because I do not find anything documentation that helps me understand this?

Created 02-03-2016 12:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

/usr/hdp/current --> you can see files there

You have to check on services from ambari console

Created 02-03-2016 12:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

/usr/hdp/current --> you can see files there

You have to check on services from ambari console

Created 02-03-2016 12:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Pradeep kumar In my case it's under

[root@sandbox usersync]# ls -l /usr/hdp/

total 12

drwxr-xr-x 39 root root 4096 2015-10-27 13:22 2.3.2.0-2950

drwxr-xr-x 37 root root 4096 2016-02-01 11:49 2.3.4.0-3485

drwxr-xr-x 2 root root 4096 2015-10-27 12:30 current

[root@sandbox usersync]#

Created 02-03-2016 12:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Naveen.

I do not find the start-dfs.sh file under any folder under "/usr/hdp/" folder. I have checked the services in the ambari dashboard, where I can see that the MapReduce service is working. The configuration section also didn't help me much. My biggest issue is that, I don't see the hadoop running on this server. the JPS command is not working and when I looked through the folder, I also didn't find the start-dfs.sh file.

Created on 02-03-2016 01:06 PM - edited 08-19-2019 03:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Pradeep kumar Let's focus on ambari.

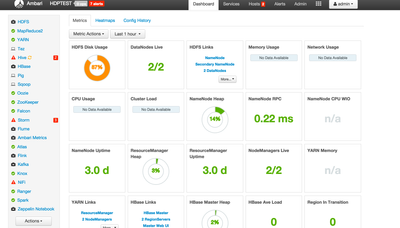

Thats my ambari dashboard...You need to click

replace ambariserver with your ambari host and then you will have full control on cluster start and stop

admin/admin is default login

Created 02-03-2016 01:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Naveen,

I think you have not understood my question correctly. I am able to access Ambari Dashboard. I am able to see all nodes in my cluster and all nodes are working fine. Even the MapReduce Service is show as working fine (green). The question is, how do I ensure that hadoop is working fine? how do I do a smoke test to see if hadoop is working? This is where my problem started. On the command prompt if I type jps command, it doesn't detect it as a valid command. So the problem is about how to check if hadoop daemons are running and hadoop system in place?

Created 02-03-2016 01:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Pradeep kumar Thank you for clarifying.

This will help a lot http://docs.hortonworks.com/HDPDocuments/HDP2/HDP-2.3.4/bk_installing_manually_book/content/ch_getti...

Every component has its own smoke test

Created 02-03-2016 01:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 02-03-2016 02:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Neeraj. I have gone through the smoke test link you have given and also a few other documentation. It seems, they are confusing me further. Maybe I am not able to understand the documents and it is completely my mistake. But can you tell me in one simple sentence, why I cannot find the jps command and why I cannot find start-dfs.sh command. Please avoid giving any URLs if possible. Where is my understanding wrong?.

Created 02-03-2016 02:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can configure jps in your OS via java config in RHEL its alternatives command. Then as hdfs user you can run jps. Start-dfs is not necessary as that will be controlled by Ambari @Pradeep kumar