Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Halting due to Out Of Memory Error...Exit code...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Halting due to Out Of Memory Error...Exit code 255 - hive - tez - java heap - GC

- Labels:

-

Apache Hive

Created on 09-12-2021 07:36 AM - edited 09-12-2021 07:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello All,

Can some one please help me fix this OOM error||heap issue , while running query from hive

Exception: java.lang.OutOfMemoryError thrown from the UncaughtExceptionHandler in thread "client DomainSocketWatcher"

-----------

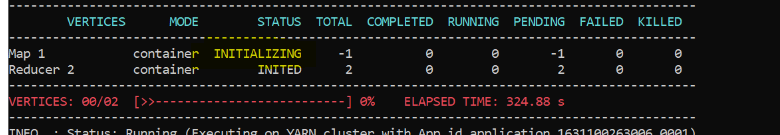

Map1 - stays in initializing state and not moving to running state with OOM error

ERROR:

java.sql.SQLExceptionPyRaisable: java.sql.SQLException: Error while processing statement: FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.tez.TezTask. Application application_1616507270167_1067 failed 2 times due to AM Container for appattempt_1616507270167_1067_000002 exited with exitCode: 255

Failing this attempt.Diagnostics: [2021-09-07 09:49:05.213]Exception from container-launch.

Container exited with a non-zero exit code 255. Error file: prelaunch.err.

Exit code: 255

--------------------------------------------------------------------------

java.sql.SQLExceptionPyRaisable Traceback (most recent call last)

/opt/conda/lib/python3.6/site-packages/jaydebeapi/__init__.py in execute(self, operation, parameters)

500 try:

--> 501 is_rs = self._prep.execute()

502 except:

--------------------------

--------

[Eden: 0.0B(163.0M)->0.0B(163.0M) Survivors: 0.0B->0.0B Heap: 3275.3M(3276.0M)->3275.3M(3276.0M)]

[Times: user=0.01 sys=0.00, real=0.00 secs]

326.881: [Full GC (Allocation Failure)

--------------------

exec /bin/bash -c "$JAVA_HOME/bin/java -Xmx3276m -Djava.io.tmpdir=$PWD/tmp -server

-------

config

-XX:+PrintGCDetails -verbose:gc -XX:+PrintGCTimeStamps -XX:+UseNUMA -XX:+UseG1GC -XX:+ResizeTLAB{{heap_dump_opts}}

Hive - used for querying

Tez - application type

Yarn - scheduler

Created 09-14-2021 04:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @manojamr

Step 1: Could you run the following commands to gather column statistics for all the table that is involved in the query.

analyze table <TABLE-NAME> compute statistics;

analyze table <TABLE-NAME> compute statistics for columns;

Reference: https://cwiki.apache.org/confluence/display/Hive/StatsDev

Step 2: set the following property in session level

set hive.tez.container.size=10240 ;

set hive.tez.java.opts=-Xmx8192m;

set tez.runtime.io.sort.mb=4096;

set tez.task.resource.memory.mb=7680;

set tez.am.resource.memory.mb=10240;

set tez.am.launch.cmd-opts=-Xmx8192m;

If Step2 got succes ignore step 3.

Step 3:

Re-run the job at the beeline session-level.

If the job fails again, I would request the below details.

1. Complete query,

2. Beeline console output,

3. QueryId of the job

4. HS2 and HMS logs and

5. Application logs.

Created 09-14-2021 04:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @manojamr

Step 1: Could you run the following commands to gather column statistics for all the table that is involved in the query.

analyze table <TABLE-NAME> compute statistics;

analyze table <TABLE-NAME> compute statistics for columns;

Reference: https://cwiki.apache.org/confluence/display/Hive/StatsDev

Step 2: set the following property in session level

set hive.tez.container.size=10240 ;

set hive.tez.java.opts=-Xmx8192m;

set tez.runtime.io.sort.mb=4096;

set tez.task.resource.memory.mb=7680;

set tez.am.resource.memory.mb=10240;

set tez.am.launch.cmd-opts=-Xmx8192m;

If Step2 got succes ignore step 3.

Step 3:

Re-run the job at the beeline session-level.

If the job fails again, I would request the below details.

1. Complete query,

2. Beeline console output,

3. QueryId of the job

4. HS2 and HMS logs and

5. Application logs.

Created 09-15-2021 04:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Shifu - Thanks for the inputs

Finally by modifying these parameters job started running

set tez.am.java.opts=-Xmx14745m;

set tez.am.resource.memory.mb=16384;

However this hive-tez job is taking almost 9.5 hours to get the query executed completely .

Total number of records in the table approx (1.2 billion) - 1137722667

Below is the query used . If you have any suggestion which could improve the query execution time

that would be great

-----------------------------------------------------------------

select station_id, parameter_name, unit_name, statistic_name, latitude, longitude, from_unixtime(UNIX_TIMESTAMP(observed_date, "yyyy-MM-dd'T'HH:mm:ss'Z'"),"yyyy-MM-dd") as observed_date, avg(value) as value from today_data.price where parameter_name= 'AIR_TEMPERATURE' and unit_name = 'DEGREES_CELSIUS' and statistic_name='MEAN' and year(from_unixtime(UNIX_TIMESTAMP(observed_date, "yyyy-MM-dd'T'HH:mm:ss'Z'"),"yyyy-MM-dd HH:mm:ss")) = 2021 group by station_id, parameter_name, unit_name, statistic_name, latitude, longitude, from_unixtime(UNIX_TIMESTAMP(observed_date, "yyyy-MM-dd'T'HH:mm:ss'Z'"),"yyyy-MM-dd");

Created 09-17-2021 06:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @manojamr

I am glad to know your original issue got resolved.

As per your last comment, your Query took 9.5 hours to get complete.

In this case, we may need to check whether there is a delay or hungriness, or resource crunch or it is normal.

To figure out that we may need beeline console output, QueryId, Application log, all HS2 and HMS logs. It would be great if you create a case with Cloudera so we would be happy to assist you.

If you are happy with the reply, mark it Accept as Solution