Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Hands-on Spark Tutorial: Permission Denied and...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Hands-on Spark Tutorial: Permission Denied and no such file or directory

- Labels:

-

Apache Spark

Created on 12-22-2015 11:21 PM - edited 08-19-2019 05:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was able to download and bring up the sandbox. I also changed the password root user. I am also able navigate to http://127.0.0.1:8088/ and see the hadoop cluster. Now I am trying to go through following tutorial:

http://hortonworks.com/blog/hands-on-tour-of-apach...

but running in to permissions issues or message like no such file or directory.

I was able to run this command and download data:

wget <a href="http://en.wikipedia.org/wiki/Hortonworks">http://en.wikipedia.org/wiki/Hortonworks</a>

but when I run the next step:

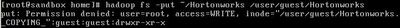

hadoop fs -put ~/Hortonworks /user/guest/Hortonworks

I get following message:

When I try to run hadoop fs -ls , I get the message the no such file or directory exists:

I then tried to create the /home/root directory but that failed too:

Any thoughts on what may be happening here?

Thanks

Created 12-23-2015 02:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do this

sudo su - hdfs

hdfs dfs -mkdir /user/root

hdfs dfs -chown root:hdfs /user/root

exit

and run hdfs commands

Created 12-23-2015 02:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do this

sudo su - hdfs

hdfs dfs -mkdir /user/root

hdfs dfs -chown root:hdfs /user/root

exit

and run hdfs commands

Created 12-23-2015 02:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thanks Neeraj. That worked. Appreciate it.

Created 12-31-2015 09:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Specifically for /user/guest/Hortonworks do this:

sudo su -hdfs hadoop fs -chmod -R 777 /user/guest exit

Created 03-03-2016 02:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the Sandbox, the /user/guest does not actually exist. You can create /user/guest/ using the following command:

hadoop fs -mkdir /user/guest/

Alternatively, you can use /tmp directory:

hadoop fs -put ~/Hortonworks /tmp

The tutorial has been updated to use /tmp directory to simplify the flow.

Created 03-03-2016 04:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The tutorial needs to be fixed.

Created 03-03-2016 05:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Best is to use the ambari-qa user. This is special user with super powers 🙂

su ambari-qa

Overall, please understand the Hadoop security model. Spend some time to understand the user permissions. This is mostly like unix. The service accounts hdfs, yarn etc are service accounts that are part of hadoop group.

Spend some time on the Hadoop HDFS section. This will help your understanding better.