Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Having Spark 1.6.0 and 2.1 in the same CDH

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Having Spark 1.6.0 and 2.1 in the same CDH

- Labels:

-

Apache Spark

Created on 07-21-2017 01:16 PM - edited 09-16-2022 04:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Guys,

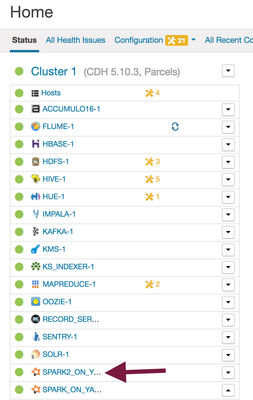

I'm planning to upgrade my CDH version to 5.10.2 and some of our Developers needs Spark 2.1 to use it in spark streaming.

I'm planning to manage the 2 versions using Cloudera manager, 1.6 will be intergrated one and the Spark 2.1 with parcels.

My questions:

1- Should i use the spark2 as a service? will they let me have 2 spark services, the reqular one and the spark 2.1 one?

2- is it preferable to istall the roles and gateways for spark on the same servers of the reqular one? i assume the history and spark server can be different servers and using different port for the history server, how it will looks like when i add 2 gateways on the same DN?

3- Is it compleicated to be managed?

4- Is there away that 2 versions conflicted and affecting the current Spark jobs?

Created 07-26-2017 01:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If not, then it will not pickup the csd file and it will not be available as a service to install.

If you have, for the cluster with the parcels distributed and activated, choose 'Add a Service' from the cluster action menu. Is it available in that list of services?

Created 07-25-2017 09:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 07-25-2017 11:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes

Created 07-26-2017 06:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

root@aopr-dhc001 bin]# spark2-shell

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/fs/FSDataInputStream

at org.apache.spark.deploy.SparkSubmitArguments$$anonfun$mergeDefaultSparkProperties$1.apply(SparkSubmitArguments.scala:118)

at org.apache.spark.deploy.SparkSubmitArguments$$anonfun$mergeDefaultSparkProperties$1.apply(SparkSubmitArguments.scala:118)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.deploy.SparkSubmitArguments.mergeDefaultSparkProperties(SparkSubmitArguments.scala:118)

at org.apache.spark.deploy.SparkSubmitArguments.<init>(SparkSubmitArguments.scala:104)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:119)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.fs.FSDataInputStream

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:331)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 7 more

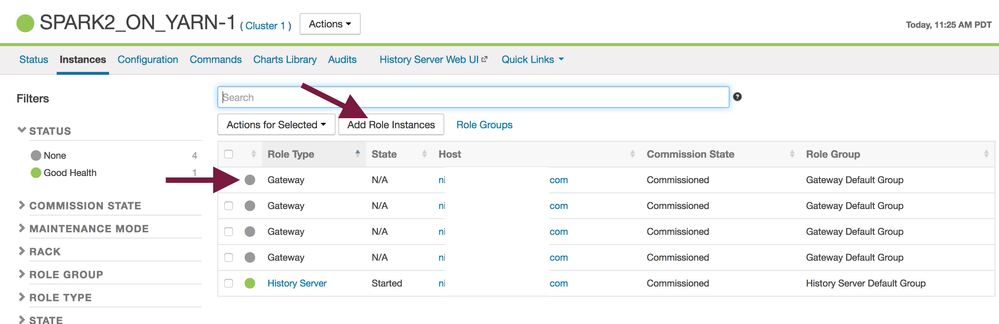

This error is almost always a result of not having Spark 2 gateway role installed on the host from where you've running spark2-shell (CM > Spark2 > Instances). I'd also double check that the client configuration is correctly deployed (CM > Cluster Name Drop Down menu> Deploy Client Configuration).

Created 07-26-2017 06:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 07-26-2017 07:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I assume you meant Spark2 gateway is deployed on all nodes (?)

Please share the output of the following command from aopr-dhc001 (gateway node)

alternatives --display spark2-conf

and a print-screen of the CM > Hosts > All Hosts > aopr-dhc001 > Expand Roles (showing spark2 gateway role)

Created 07-26-2017 11:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

spark2-conf - status is auto.

link currently points to

/liveperson/hadoop/parcels/SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904/etc/spark2/conf.dist

/liveperson/hadoop/parcels/SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904/etc/spark2/conf.dist

- priority 10

Current `best' version is

/liveperson/hadoop/parcels/SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904/etc/spark2/conf.dist.

=================

aopr-dhc001.lpdomain.com 10.16.144.131 9 Role(s)

HDFS DataNode

HDFS Gateway

Impala Daemon

Kudu Tablet Server

Spark (Standalone) Gateway

Spark (Standalone) Worker

Spark Gateway

YARN (MR2 Included) Gateway

YARN (MR2 Included) NodeManager

Created 07-26-2017 11:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The roles only show Spark gateway foraopr-dhc001 (and not Spark2 gateway) ! What you really need is a Spark2 Gateway (to invoke spark2-shell). Assuming you are using Cloudera Manager to manage your environment, please navigate to CM > Spark2 > Instances > Add Role Instances > Add "aopr-dhc001" in the gateway followed by deploying client configuration.

I have attached a few screenshots from my lab to clarify what I mean.

After installing the Spark2 gateway and deploying client configurations, your alternatives will automatically point to /etc/spark2/conf (required for running spark2-shell)

# alternatives --display spark2-conf

spark2-conf - status is auto.

link currently points to /etc/spark2/conf.cloudera.spark2_on_yarn

/opt/cloudera/parcels/SPARK2-2.2.0.cloudera1-1.cdh5.12.0.p0.142354/etc/spark2/conf.dist - priority 10

/etc/spark2/conf.cloudera.spark2_on_yarn - priority 51

Current `best' version is /etc/spark2/conf.cloudera.spark2_on_yarn.

Hope this helps.

Created 07-26-2017 01:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

something going wrong and i'm may missed it.

The service i added to the cluster is called spark (Standalone), i don't see it as spark2 on Yarn,

Also when i navigate the history server it show me the version is spark 1.6

Created 07-26-2017 01:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

parcels are distributed

What i'm missing?

see below:

[root@aopr-dhc001 parcels]# ll

total 20

lrwxrwxrwx 1 cloudera-scm cloudera-scm 27 Jul 25 00:52 CDH ->

CDH-5.10.2-1.cdh5.10.2.p0.5

drwxr-xr-x 11 cloudera-scm cloudera-scm 4096 Jun 27 01:39

CDH-5.10.2-1.cdh5.10.2.p0.5

lrwxrwxrwx 1 cloudera-scm cloudera-scm 33 Jul 25 00:52 GPLEXTRAS ->

GPLEXTRAS-5.10.2-1.cdh5.10.2.p0.5

drwxr-xr-x 4 cloudera-scm cloudera-scm 4096 Jun 27 01:52

GPLEXTRAS-5.10.2-1.cdh5.10.2.p0.5

drwxr-xr-x 9 cloudera-scm cloudera-scm 4096 Nov 22 2016

IMPALA_KUDU-2.7.0-3.cdh5.9.0.p0.10

lrwxrwxrwx 1 cloudera-scm cloudera-scm 28 Jul 25 00:54 KUDU ->

KUDU-1.3.0-1.cdh5.11.0.p0.12

drwxr-xr-x 9 cloudera-scm cloudera-scm 4096 Apr 12 17:37

KUDU-1.3.0-1.cdh5.11.0.p0.12

lrwxrwxrwx 1 cloudera-scm cloudera-scm 43 Jul 25 01:01 SPARK2 ->

SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904

drwxr-xr-x 6 cloudera-scm cloudera-scm 4096 Mar 29 02:57

SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904

=====================================================================

[root@aovr-cmc101 ~]# cd /opt/cloudera/csd/

[root@aovr-cmc101 csd]# ll

total 36

-rwxrwxrwx 1 fawzea noc 19899 Nov 7 2016 KUDU-1.0.0.jar

-rw-r--r-- 1 cloudera-scm cloudera-scm 16109 Jul 25 00:43

SPARK2_ON_YARN-2.1.0.cloudera1.jar

Created 07-26-2017 01:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If not, then it will not pickup the csd file and it will not be available as a service to install.

If you have, for the cluster with the parcels distributed and activated, choose 'Add a Service' from the cluster action menu. Is it available in that list of services?