Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Hive query failed with java.io.IOException: Ca...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Hive query failed with java.io.IOException: Cannot find password option fs.s3a.bucket.sg-cdp.fs.s3a.server-side-encryption-algorithm

Created 05-23-2023 02:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I am running CDH 7.1.7p0. I have a external ORC database on S3 bucket. I did not configure this fs.s3a.server-side-encryption-algorithm in hadoop core-site.xml. However, I will randomly get this error when running hive query. Rerun the same query may success or fail with same error message.

ERROR : Vertex failed, vertexName=Map 14, vertexId=vertex_1684599244994_0012_7_02, diagnostics=[Vertex vertex_1684599244994_0012_7_02 [Map 14] killed/failed due to:ROOT_INPUT_INIT_FAILURE, Vertex Input: ss1 initializer failed, vertex=vertex_1684599244994_0012_7_02 [Map 14], java.lang.RuntimeException: ORC split generation failed with exception: java.lang.RuntimeException: java.io.IOException: Cannot find password option fs.s3a.bucket.sg-cdp.fs.s3a.server-side-encryption-algorithm

The database and query was part of the hive benchmark tool from github:

https://github.com/hortonworks/hive-testbench

Can you help me to resolve this problem. Thank you.

Here are the s3a settings in core-site.xml, and you can see there is no encryption related setting.

<name>fs.s3a.endpoint</name>

<name>fs.s3a.access.key</name>

<name>fs.s3a.secret.key</name>

<name>fs.s3a.impl</name>

<value>org.apache.hadoop.fs.s3a.S3AFileSystem</value>

<name>fs.s3a.path.style.access</name>

<name>fs.s3a.connection.ssl.enabled</name>

<name>fs.s3a.ssl.channel.mode</name>

<name>fs.s3a.threads.max</name>

<name>fs.s3a.connection.maximum</name>

<name>fs.s3a.retry.limit</name>

<name>fs.s3a.retry.interval</name>

<name>fs.s3a.committer.name</name>

<name>fs.s3a.committer.magic.enabled</name>

<name>fs.s3a.committer.threads</name>

<name>fs.s3a.committer.staging.unique-filenames</name>

<name>fs.s3a.committer.staging.conflict-mode</name>

Created on 06-02-2023 06:06 AM - edited 06-02-2023 06:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry about the typo in the article. "

- Uncomment the property "Generate HADOOP_CREDSTORE_PASSWORD" from Hive service and Hive on Tez service. This is the flag to enable or disable the generation of HADOOP_CREDSTORE_PASSWORD (generate_jceks_password)."

It is not uncomment and must be uncheck. So please uncheck "Generate HADOOP_CREDSTORE_PASSWORD" in Hive and Hive on Tez, save and restart Hive,Hive on Tez

Created 05-23-2023 03:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ac-ntap Welcome to the Cloudera Community!

To help you get the best possible solution, I have tagged our CDP experts @venkatsambath and @zzeng who may be able to assist you further

Please keep us updated on your post, and we hope you find a satisfactory solution to your query.

Regards,

Diana Torres,Senior Community Moderator

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Learn more about the Cloudera Community:

Created 05-23-2023 03:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you Diana. Will wait for @venkatsambath and @zzeng to provide suggestion to resolve this issue.

Created 05-23-2023 04:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ac-ntap Can you check the steps in the article https://my.cloudera.com/knowledge/How-to-configure-HDFS-and-Hive-to-use-different-JCEKS-and?id=32605... and let me know if that helps.

Created 05-23-2023 04:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, since I am running tpcds test instead of production work, I am only using these 2 entries in core-

site.xml to specify the access key and secret key.

<name>fs.s3a.access.key</name>

<name>fs.s3a.secret.key</name>

Using above method for credential, I am able to use hive to create DB, tables and load data.

Currently I can list DB and tables in s3 bucket. And the DB listed below were created by Hive when I ran tpcds-setup.sh

[root@ce-n3 sample-queries-tpcds]# hdfs dfs -ls s3a://sg-cdp/

Found 4 items

drwxrwxrwx - hdfs hdfs 0 2023-05-23 19:48 s3a://sg-cdp/external

drwxrwxrwx - hdfs hdfs 0 2023-05-23 19:48 s3a://sg-cdp/managed

drwxrwxrwx - hdfs hdfs 0 2023-05-23 19:48 s3a://sg-cdp/tpcds-generate

drwxrwxrwx - hdfs hdfs 0 2023-05-23 19:48 s3a://sg-cdp/user

[root@ce-n3 sample-queries-tpcds]# hdfs dfs -ls s3a://sg-cdp/external

Found 3 items

drwxrwxrwx - hdfs hdfs 0 2023-05-23 19:48 s3a://sg-cdp/external/m1.db

drwxrwxrwx - hdfs hdfs 0 2023-05-23 19:48 s3a://sg-cdp/external/tpcds_bin_partitioned_external_orc_2.db

drwxrwxrwx - hdfs hdfs 0 2023-05-23 19:48 s3a://sg-cdp/external/tpcds_text_2.db

Is configuring S3 credential using JCEKS a must?

I run into this problem occasionally only when running queries to retrieve data,

Cannot find password option fs.s3a.bucket.sg-cdp.fs.s3a.server-side-encryption-algorithm

1) I did not configure any encryption, why it is expecting password?

2) If hive is expecting password, then all hive queries to access the S3 bucket should fail. However, it only randomly fails and rerun the exact same query few times will success.

Appreciate your help.

Created 05-24-2023 06:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Since the issue is intermittent it is unclear yet what is triggering the problem. It is not a must to have jceks.

a. Do you see any pattern on the failure, like the issue happens only when the failing task runs on a particular node.

b. How are you managing the edited core-site.xml, is it through cloudera-manager? May i know the safety valve used.

c. Can you attach the complete application log for the failed run and successful run?

Created 05-24-2023 07:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

d. Additionally please try disabling "Generate HADOOP_CREDSTORE_PASSWORD" in CM > Hive and Hive on Tez > configuration just to make sure the jceks generated by hive is not interfering with the creds you use.

Created 05-24-2023 12:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is no pattern when the problem triggers. I have a script to run all 99 tpcds queries and 15 run successfully while other failed with this password error.

I modify all settings via Cloudera Manager GUI. I did not manually edit any core-site.xml, hive-site.xml or tez-site.xml.

I tried to uncheck this setting 'generate_jceks_password' from Hive and Hive on Tez from Cloudera manager, this setting was checked by default. Restarted both of them. But still get this error randomly.

I tried this procedure

but must uncheck 'generate_jceks_password' in order to get hive metastore up. If checked, hive metastore went down because hive canary test fails. Again, the password error returns randomly.

Here are the log message:

ERROR : Vertex failed, vertexName=Map 1, vertexId=vertex_1684599244994_0003_6_18, diagnostics=[Vertex vertex_1684599244994_0003_6_18 [Map 1] killed/failed due to:ROOT_INPUT_INIT_FAILURE, Vertex Input: reason initializer failed, vertex=vertex_1684599244994_0003_6_18 [Map 1], java.lang.RuntimeException: ORC split generation failed with exception: java.lang.RuntimeException: java.io.IOException: Cannot find password option fs.s3a.bucket.sg-cdp.fs.s3a.server-side-encryption-algorithm

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat.generateSplitsInfo(OrcInputFormat.java:1943)

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat.getSplits(OrcInputFormat.java:2030)

at org.apache.hadoop.hive.ql.io.HiveInputFormat.addSplitsForGroup(HiveInputFormat.java:542)

at org.apache.hadoop.hive.ql.io.HiveInputFormat.getSplits(HiveInputFormat.java:850)

at org.apache.hadoop.hive.ql.exec.tez.HiveSplitGenerator.initialize(HiveSplitGenerator.java:250)

at org.apache.tez.dag.app.dag.RootInputInitializerManager.lambda$runInitializer$3(RootInputInitializerManager.java:203)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1898)

at org.apache.tez.dag.app.dag.RootInputInitializerManager.runInitializer(RootInputInitializerManager.java:196)

at org.apache.tez.dag.app.dag.RootInputInitializerManager.runInitializerAndProcessResult(RootInputInitializerManager.java:177)

at org.apache.tez.dag.app.dag.RootInputInitializerManager.lambda$createAndStartInitializing$2(RootInputInitializerManager.java:171)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at com.google.common.util.concurrent.TrustedListenableFutureTask$TrustedFutureInterruptibleTask.runInterruptibly(TrustedListenableFutureTask.java:125)

at com.google.common.util.concurrent.InterruptibleTask.run(InterruptibleTask.java:69)

at com.google.common.util.concurrent.TrustedListenableFutureTask.run(TrustedListenableFutureTask.java:78)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750)

Caused by: java.util.concurrent.ExecutionException: java.lang.RuntimeException: java.io.IOException: Cannot find password option fs.s3a.bucket.sg-cdp.fs.s3a.server-side-encryption-algorithm

at java.util.concurrent.FutureTask.report(FutureTask.java:122)

at java.util.concurrent.FutureTask.get(FutureTask.java:192)

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat.generateSplitsInfo(OrcInputFormat.java:1882)

... 18 more

Caused by: java.lang.RuntimeException: java.io.IOException: Cannot find password option fs.s3a.bucket.sg-cdp.fs.s3a.server-side-encryption-algorithm

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat.lambda$generateSplitsInfo$0(OrcInputFormat.java:1847)

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat$FileGenerator.getAcidState(OrcInputFormat.java:1276)

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat$FileGenerator.callInternal(OrcInputFormat.java:1302)

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat$FileGenerator.access$1800(OrcInputFormat.java:1226)

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat$FileGenerator$1.run(OrcInputFormat.java:1263)

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat$FileGenerator$1.run(OrcInputFormat.java:1260)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1898)

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat$FileGenerator.call(OrcInputFormat.java:1260)

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat$FileGenerator.call(OrcInputFormat.java:1226)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

... 3 more

Caused by: java.io.IOException: Cannot find password option fs.s3a.bucket.sg-cdp.fs.s3a.server-side-encryption-algorithm

at org.apache.hadoop.fs.s3a.S3AUtils.lookupPassword(S3AUtils.java:966)

at org.apache.hadoop.fs.s3a.S3AUtils.getPassword(S3AUtils.java:946)

at org.apache.hadoop.fs.s3a.S3AUtils.getPassword(S3AUtils.java:927)

at org.apache.hadoop.fs.s3a.S3AUtils.lookupPassword(S3AUtils.java:905)

at org.apache.hadoop.fs.s3a.S3AUtils.lookupPassword(S3AUtils.java:870)

at org.apache.hadoop.fs.s3a.S3AUtils.getEncryptionAlgorithm(S3AUtils.java:1605)

at org.apache.hadoop.fs.s3a.S3AFileSystem.initialize(S3AFileSystem.java:363)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:3443)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:158)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:3503)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:3471)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:518)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:361)

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat.lambda$generateSplitsInfo$0(OrcInputFormat.java:1845)

https://my.cloudera.com/knowledge/How-to-configure-HDFS-and-Hive-to-use-different-JCEKS-and?id=32605...

Created 05-24-2023 08:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is this the complete exception stack trace you have in logs. The stack is supposed to have traces of getPasswordFromCredentialProviders which I dont find and further more stack trace. Can you attach the entire application log.

Created 05-29-2023 10:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

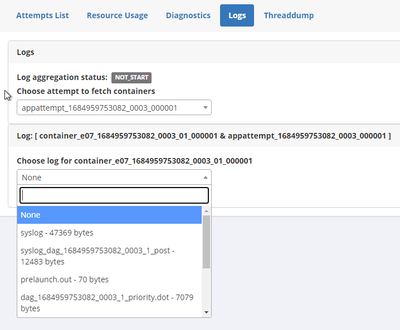

Do you mean the yarn application log, which log do you want me to attach here?

Currently when I enter hive to access hive, it shows:

Connecting to jdbc:hive2://ce-n1.rtp.lab.xyz.com:2181,ce-n2.rtp.lab.xyz.com:2181,ce-n3.rtp.lab.xyz.com:2181/default;password=root;serviceDiscoveryMode=zooKeeper;user=root;zooKeeperNamespace=hiveserver2

I tried to use beeline -u 'jdbc:hive2://localhost:10000' but the password problem still randomly comes up. Not sure the main problem is about this msg:

Caused by: java.nio.file.AccessDeniedException: /var/run/cloudera-scm-agent/process/1546336141-hive_on_tez-HIVESERVER2/creds.localjceks

ERROR : Status: Failed

ERROR : Vertex failed, vertexName=Map 10, vertexId=vertex_1684959753082_0005_1_01, diagnostics=[Vertex vertex_1684959753082_0005_1_01 [Map 10] killed/failed due to:ROOT_INPUT_INIT_FAILURE, Vertex Input: date_dim initializer failed, vertex=vertex_1684959753082_0005_1_01 [Map 10], java.lang.RuntimeException: ORC split generation failed with exception: java.lang.RuntimeException: java.io.IOException: Cannot find password option fs.s3a.bucket.sg-cdp.fs.s3a.server-side-encryption-algorithm

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat.generateSplitsInfo(OrcInputFormat.java:1943)

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat.getSplits(OrcInputFormat.java:2030)

at org.apache.hadoop.hive.ql.io.HiveInputFormat.addSplitsForGroup(HiveInputFormat.java:542)

at org.apache.hadoop.hive.ql.io.HiveInputFormat.getSplits(HiveInputFormat.java:850)

at org.apache.hadoop.hive.ql.exec.tez.HiveSplitGenerator.initialize(HiveSplitGenerator.java:250)

at org.apache.tez.dag.app.dag.RootInputInitializerManager.lambda$runInitializer$3(RootInputInitializerManager.java:203)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1898)

at org.apache.tez.dag.app.dag.RootInputInitializerManager.runInitializer(RootInputInitializerManager.java:196)

at org.apache.tez.dag.app.dag.RootInputInitializerManager.runInitializerAndProcessResult(RootInputInitializerManager.java:177)

at org.apache.tez.dag.app.dag.RootInputInitializerManager.lambda$createAndStartInitializing$2(RootInputInitializerManager.java:171)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at com.google.common.util.concurrent.TrustedListenableFutureTask$TrustedFutureInterruptibleTask.runInterruptibly(TrustedListenableFutureTask.java:125)

at com.google.common.util.concurrent.InterruptibleTask.run(InterruptibleTask.java:69)

at com.google.common.util.concurrent.TrustedListenableFutureTask.run(TrustedListenableFutureTask.java:78)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750)

Caused by: java.util.concurrent.ExecutionException: java.lang.RuntimeException: java.io.IOException: Cannot find password option fs.s3a.bucket.sg-cdp.fs.s3a.server-side-encryption-algorithm

at java.util.concurrent.FutureTask.report(FutureTask.java:122)

at java.util.concurrent.FutureTask.get(FutureTask.java:192)

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat.generateSplitsInfo(OrcInputFormat.java:1882)

... 18 more

Caused by: java.lang.RuntimeException: java.io.IOException: Cannot find password option fs.s3a.bucket.sg-cdp.fs.s3a.server-side-encryption-algorithm

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat.lambda$generateSplitsInfo$0(OrcInputFormat.java:1847)

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat$FileGenerator.getAcidState(OrcInputFormat.java:1276)

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat$FileGenerator.callInternal(OrcInputFormat.java:1302)

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat$FileGenerator.access$1800(OrcInputFormat.java:1226)

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat$FileGenerator$1.run(OrcInputFormat.java:1263)

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat$FileGenerator$1.run(OrcInputFormat.java:1260)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1898)

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat$FileGenerator.call(OrcInputFormat.java:1260)

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat$FileGenerator.call(OrcInputFormat.java:1226)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

... 3 more

Caused by: java.io.IOException: Cannot find password option fs.s3a.bucket.sg-cdp.fs.s3a.server-side-encryption-algorithm

at org.apache.hadoop.fs.s3a.S3AUtils.lookupPassword(S3AUtils.java:966)

at org.apache.hadoop.fs.s3a.S3AUtils.getPassword(S3AUtils.java:946)

at org.apache.hadoop.fs.s3a.S3AUtils.getPassword(S3AUtils.java:927)

at org.apache.hadoop.fs.s3a.S3AUtils.lookupPassword(S3AUtils.java:905)

at org.apache.hadoop.fs.s3a.S3AUtils.lookupPassword(S3AUtils.java:870)

at org.apache.hadoop.fs.s3a.S3AUtils.getEncryptionAlgorithm(S3AUtils.java:1605)

at org.apache.hadoop.fs.s3a.S3AFileSystem.initialize(S3AFileSystem.java:363)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:3443)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:158)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:3503)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:3471)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:518)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:361)

at org.apache.hadoop.hive.ql.io.orc.OrcInputFormat.lambda$generateSplitsInfo$0(OrcInputFormat.java:1845)

... 16 more

Caused by: java.io.IOException: Configuration problem with provider path.

at org.apache.hadoop.conf.Configuration.getPasswordFromCredentialProviders(Configuration.java:2428)

at org.apache.hadoop.conf.Configuration.getPassword(Configuration.java:2347)

at org.apache.hadoop.fs.s3a.S3AUtils.lookupPassword(S3AUtils.java:961)

... 29 more

Caused by: java.nio.file.AccessDeniedException: /var/run/cloudera-scm-agent/process/1546336141-hive_on_tez-HIVESERVER2/creds.localjceks

at sun.nio.fs.UnixException.translateToIOException(UnixException.java:84)

at sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:102)

at sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:107)

at sun.nio.fs.UnixFileSystemProvider.newByteChannel(UnixFileSystemProvider.java:214)

at java.nio.file.Files.newByteChannel(Files.java:361)

at java.nio.file.Files.newByteChannel(Files.java:407)

at java.nio.file.spi.FileSystemProvider.newInputStream(FileSystemProvider.java:384)

at java.nio.file.Files.newInputStream(Files.java:152)

at org.apache.hadoop.security.alias.LocalKeyStoreProvider.getInputStreamForFile(LocalKeyStoreProvider.java:76)

at org.apache.hadoop.security.alias.AbstractJavaKeyStoreProvider.locateKeystore(AbstractJavaKeyStoreProvider.java:325)

at org.apache.hadoop.security.alias.AbstractJavaKeyStoreProvider.<init>(AbstractJavaKeyStoreProvider.java:86)

at org.apache.hadoop.security.alias.LocalKeyStoreProvider.<init>(LocalKeyStoreProvider.java:56)