Support Questions

- Cloudera Community

- Support

- Support Questions

- How is the memory allocated in CDP Machine Learnin...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How is the memory allocated in CDP Machine Learning session for Spark?

Created 01-12-2023 05:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

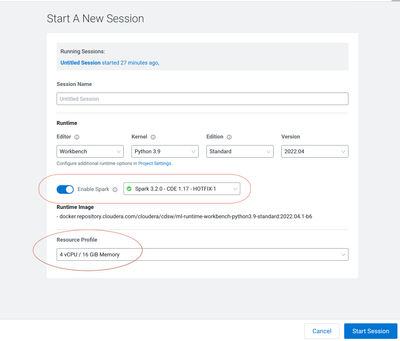

In CDP Public Cloud Machine Learning, we can create a new session with reserved resource, for example 4vCPU and 16 GiB Memory.

We can also create spark session inside the machine learning workbench with some memory configuration. For example:

spark = (SparkSession.builder.appName(appName).config("spark.driver.memory", "16G").config("spark.executor.instances", "10").config("spark.executor.cores", "4").config("spark.executor.memory", "20G").getOrCreate())

My question is, how will the memory be allocated to Spark session now? Is the reserved resource (4vCPU and 16 GiB Memory) in machine learning session the maximal limitation for total spark memory usage? How many work nodes and executors can I configure for the spark session?

Created 01-13-2023 01:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Ryan_2002

Thanks for using Cloudera Community. To your Q, the Driver Cap is the Engine/Resource Profile & the Executor's Resource Usage is defined by the SparkSession or "spark-defaults.conf" file within the Project wherein the Workbench Session is being created.

Your Team can review the Pods in the User's Namespace & see the same i.e. upon a Workbench Session Creation, an Engine Pod is started with "Limits" set toEngine/Resource Profile Settings. After SparkSession is initialised, additional Pods are generated within the User's Namespace based on the Execution's Configs passed via SparkSession or "spark-defaults.conf" file.

You may configure the Executor's Configs as per your usage yet the same depends on the CML Workspace AutoScale Range & InstanceType. Say, an InstanceType supporting 8 vCPU & Executors requesting 8 vCPU won't work. Similarly, AutoScale Max of 5 yet requesting Executors collectively utilising the Resource Limit of 5 Nodes.

Hope the above helps answer your Post's queries. If Yes, Kindly mark the Post as Solved. If No, Feel free to share your concerns & we shall address accordingly.

Regards, Smarak

Created 01-13-2023 01:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Ryan_2002

Thanks for using Cloudera Community. To your Q, the Driver Cap is the Engine/Resource Profile & the Executor's Resource Usage is defined by the SparkSession or "spark-defaults.conf" file within the Project wherein the Workbench Session is being created.

Your Team can review the Pods in the User's Namespace & see the same i.e. upon a Workbench Session Creation, an Engine Pod is started with "Limits" set toEngine/Resource Profile Settings. After SparkSession is initialised, additional Pods are generated within the User's Namespace based on the Execution's Configs passed via SparkSession or "spark-defaults.conf" file.

You may configure the Executor's Configs as per your usage yet the same depends on the CML Workspace AutoScale Range & InstanceType. Say, an InstanceType supporting 8 vCPU & Executors requesting 8 vCPU won't work. Similarly, AutoScale Max of 5 yet requesting Executors collectively utilising the Resource Limit of 5 Nodes.

Hope the above helps answer your Post's queries. If Yes, Kindly mark the Post as Solved. If No, Feel free to share your concerns & we shall address accordingly.

Regards, Smarak

Created 01-13-2023 01:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Smarak,

thanks for your answer. That helps me!