Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How to append HDFS file using putHDFS where Ni...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to append HDFS file using putHDFS where NiFi is hosted on clustered mode?

- Labels:

-

Apache NiFi

Created 04-17-2018 08:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

My NiFi is hosted on 3 node cluster, my requirement is to append data at the end of the file, since my NiFi is working on clustered mode how we should make sure that only one node should write data to the file? There should not be any conflict in write operation.

Thanks,

Created 04-17-2018 01:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unfortunately, you can only have one client writing/appending to the same file in HDFS at a time. The nature of this append capability in HDFS does not mesh well with the NIFi architecture of concurrent parallel operations across multiple nodes. NiFi nodes each run their own copy of the dataflows and work on their own unique set of FlowFiles. While NiFi nodes do communicate health and status heartbeats to the elected cluster coordinator, dataflow specific information like which node is currently appending to a very specific filename in the same target HDFS cluster is not shared. And from a performance design aspect, it makes sense not to do this.

-

So, aside from the above work-around which reduces the likelihood of conflict, you can also:

1. After whatever preprocessing you perform on the data in NiFi before pushing to HDFS, route all data to the a dedicated node (with a failover node, think postHTTP with failure feeding another postHTTP) in your cluster for the final step of appending to your target HDFS.

2. Install an edge standalone instance of NiFi that simply receives the processed data from your NiFi cluster and writes/appends it to HDFS.

-

Thanks,

Matt

Created on 04-17-2018 11:31 AM - edited 08-17-2019 07:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

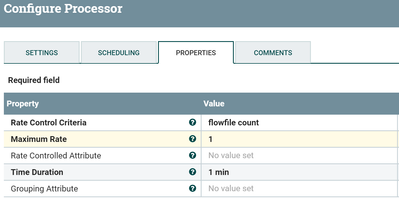

You can use Control Rate processor before PutHDFS processor and configure Control Rate Processor to release flowfile for the desired time like 1 min ..etc.

if you need to append data to the file then we need to make sure we are having same filename to get same filename every time we can use Update attribute processor to change the filename and in PutHDFS processor we need to configure the below property

Conflict Resolution Strategy

append //if processor finds same filename it appends the data to the file.

Control Rate Processor configs:-

By using these configurations we are releasing 1 flowfile for every one minute so at any point of time we are going to have one node write/append data to the file.

Flow:-

other Processors --> ControlRate Processor --> PutHDFS

Created 04-17-2018 11:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Shu, Thanks for your answer,

We are currently doing the same thing we save setup time driven flags to make sure only one node can write the data to HDFS. I was expecting something else apart from workaround as there will be performance complications. If there is any recommended way of doing this please let me know.

Created 04-17-2018 01:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unfortunately, you can only have one client writing/appending to the same file in HDFS at a time. The nature of this append capability in HDFS does not mesh well with the NIFi architecture of concurrent parallel operations across multiple nodes. NiFi nodes each run their own copy of the dataflows and work on their own unique set of FlowFiles. While NiFi nodes do communicate health and status heartbeats to the elected cluster coordinator, dataflow specific information like which node is currently appending to a very specific filename in the same target HDFS cluster is not shared. And from a performance design aspect, it makes sense not to do this.

-

So, aside from the above work-around which reduces the likelihood of conflict, you can also:

1. After whatever preprocessing you perform on the data in NiFi before pushing to HDFS, route all data to the a dedicated node (with a failover node, think postHTTP with failure feeding another postHTTP) in your cluster for the final step of appending to your target HDFS.

2. Install an edge standalone instance of NiFi that simply receives the processed data from your NiFi cluster and writes/appends it to HDFS.

-

Thanks,

Matt

Created 04-17-2018 01:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Matt Clarke, seems quite refined approach. happy to see your response.