Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How to copy files from remote system to HDFS ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to copy files from remote windows system to HDFS

- Labels:

-

Apache Flume

-

HDFS

Created on 06-21-2016 11:11 PM - edited 09-16-2022 03:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Currently i am using spooldir(source) for copying the files from local file system to HDFS, but i want to copy files from remote windows system.

So can some one suggest which source option can i use to copy the files from remote windows system to HDFS using flume where i can specify the username and password.

Created on 06-06-2019 11:09 PM - edited 06-07-2019 12:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I added above values and that was causing https to shutdown. After deleting those values , it started and working fine now.

Thanks @Harsh J for your reply.

Created 07-06-2016 08:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It may be a bit of a long shot, but you could mount the directories of your remote server in your local server using samba and afterwards copy the files to hdfs from the command line.

Created 08-09-2016 01:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the role HDFS there is a "NFS gateway service" that let you mount an NFS image of the HDFS.

That is one way (you can directly copy file to it). (Check the performance).

Hue (web ui) also let you upload files into HDFS (this is a more manual approach).

In our enterprise, for an automated process, we are using a custom Java application that is using the HCatWriter API for writting into Hive tables.

But you can also use the httpFs or the webHdfs.

Created 05-28-2019 03:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

http://httpfs.server.com:14000/webhdfs/v1/user/rakesh/abc.csv?op=CREATE&user.name=hdfs

I am able to create only small files.

How to increase the buffer size for uploading GB's of file??

Created 05-28-2019 07:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you provide some more information

Created 06-05-2019 03:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HTTPFS:

**************working with private ip and public ip irrespective of file size********

curl -X PUT -L -b cookie.jar "http://192.168.1.3:14000/webhdfs/v1/user/abc.csv?op=CREATE&data=true&user.name=hdfs" --header "Content-Type:application/octet-stream" --header "Transfer-Encoding:chunked" -T "abc.csv"

Above command is for a non-kerberized cluster. I enabled Kerberos and what parameters should I pass to put a file to hdfs?

Created 06-05-2019 06:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

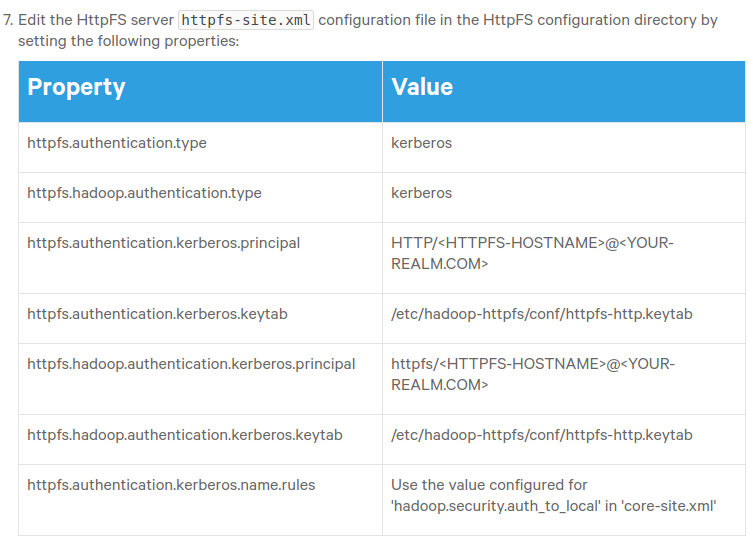

HTTPFS with Kerberos requires SPNEGO authentication to be used. Per https://www.cloudera.com/documentation/enterprise/latest/topics/cdh_sg_httpfs_security.html, for curl (after kinit) this can be done by passing the below two parameters:

"""

The '--negotiate' option enables SPNEGO in curl.

The '-u :' option is required but the username is ignored (the principal that has been specified for kinit is used).

"""

Created 06-06-2019 10:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

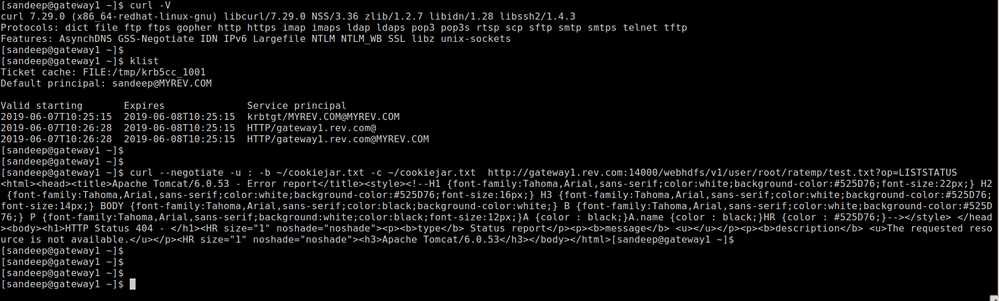

I am getting 404 error when i tried to get filestatus using httpfs_ip:14000

But with webhdfs port 50070, i am getting the result. Below is successfull command but for httpfs port 14000 it's not working.

*****Working***

WEBHDFS(50070):

curl -i --negotiate -u : "http://gateway1.rev.com:50070/webhdfs/v1/user/root/ratemp/?op=LISTSTATUS"

*****Not working****

HTTPFS(14000):

curl --negotiate -u : -b ~/cookiejar.txt -c ~/cookiejar.txt http://gateway1.rev.com:14000/webhdfs/v1/user/root/ratemp/test.txt?op=LISTSTATUS

I am using coudera manager and is it requied to change

Created on 06-06-2019 11:09 PM - edited 06-07-2019 12:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I added above values and that was causing https to shutdown. After deleting those values , it started and working fine now.

Thanks @Harsh J for your reply.