Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How to define HDFS storage tiers and storage p...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to define HDFS storage tiers and storage polices in CDH 5.4.x

Created on 06-24-2015 05:18 AM - edited 09-16-2022 02:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In order to use Hadoop 2.6 storage policies you must specify the type for each mount point in dfs.datanode.data.dir the type (DISK, ARCHIVE, RAM_DISK, SSD). If editing the hdfs-site.xml file directly I would do -

<property>

<name>dfs.datanode.data.dir</name>

<value>[ARCHIVE]file:///mnt/archive/dfs/dn,[SSD]file:///mnt/flash/dfs/dn,[DISK]file:///mnt/disk/dfs/dn</value>

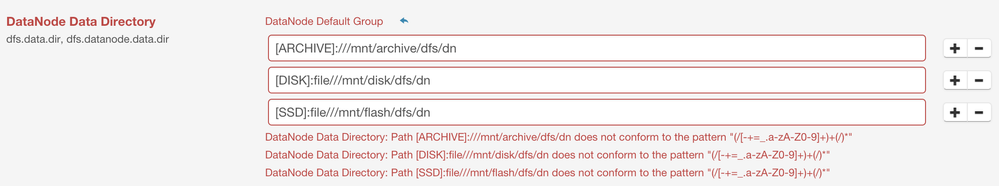

</property>However, if I try and use this format in the CM GUI I get the following error -

- DataNode Data Directory: Path [ARCHIVE]:///mnt/archive/dfs/dn does not conform to the pattern "(/[-+=_.a-zA-Z0-9]+)+(/)*"

- DataNode Data Directory: Path [DISK]:file///mnt/disk/dfs/dn does not conform to the pattern "(/[-+=_.a-zA-Z0-9]+)+(/)*"

- DataNode Data Directory: Path [SSD]:file///mnt/flash/dfs/dn does not conform to the pattern "(/[-+=_.a-zA-Z0-9]+)+(/)*"

Does anyone know what is the correct format to specify storage tiers in the GUI or how to manually bypass the GUI and configure this.

Thank you

Daniel

Created 06-24-2015 10:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 06-24-2015 10:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 06-25-2015 01:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks - that worked.

I'm assuming that I should leave the mounts in the dfs.datanode.data.dir section, so that CM knows to monitor the mounts.

Created 06-25-2015 01:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Glad to hear it worked! Feel free to also mark the discussion as solved so others looking at similar issues may find this thread faster.

Created on 04-18-2016 09:58 AM - edited 04-18-2016 09:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Some more questions based on this thread

Once storage configuration is defined and SSDs/ Disks are identified by HDFS,

- does all drives (SSDs+ DIsks) are used and single virtual storage ?

- if yes does it mean while running jobs/queries some data blocks would be fetched from Disks while others from SSDs?

- or two different virtual storage hot and cold??

- If Yes, while copying/generating data in HDFS, will there be 3 copies of data across disks+storage or 3 copies in Disks and 3 copies in SSDs ; total 6 copies?

- how do I force data to be used from SSDs only or DISKs only; while submitting any Jobs/queries using various tools(hive, Impala, spark etc)