Support Questions

- Cloudera Community

- Support

- Support Questions

- How to read json from S3 then edit json using Nifi

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to read json from S3 then edit json using Nifi

- Labels:

-

Apache NiFi

Created on 06-22-2022 06:14 AM - edited 06-22-2022 05:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am using Nifi 1.6.0. I am trying to copy an S3 file into redshift. The json file on S3 looks like this:

[

{"a":1,"b":2},

{"a":3,"b":4}

]

However this gives an error because of '[' and ']' in the file (https://stackoverflow.com/a/45348425).

I need to convert the json from above format to a format which looks like this:

{"a":1,"b":2}

{"a":3,"b":4}

So basically what I am trying to do is to remove '[' and ']' and replace '},' with '}'.

The file has over 14 million rows (30 MB in size)

How can I achieve this using Nifi?

Created on 06-22-2022 07:31 PM - edited 06-22-2022 07:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

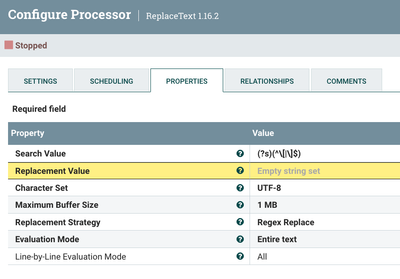

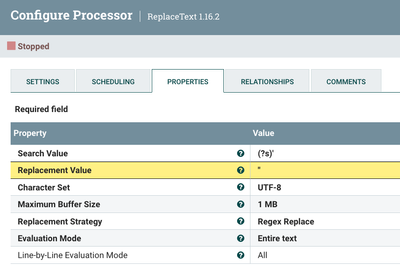

Try this:

This is the regex:

(?s)(^\[|\]$)You may have to increase the buffer size to accommodate your file.

Cheers,

André

Was your question answered? Please take some time to click on "Accept as Solution" below this post.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 06-22-2022 04:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problem with your file is not the square brackets ([ ]). The issue is that single quotes are not a valid JSON quote. The correct JSON would be:

[

{"a":1,"b":2},

{"a":3,"b":4}

]

The content above is processed correctly by NiFi if you use record-based processors (like, for example QueryRecord).

Ideally your source data should be fixed to provide a valid JSON. For example, if those files are being generated by a Python script like this:

my_object = [{'a': 1, 'b': 2}, {'a': 3, 'b': 4}]

print(my_object)The Python script should be updated to do this instead:

import json

my_object = [{'a': 1, 'b': 2}, {'a': 3, 'b': 4}]

print(json.dumps(my_object))

If you cannot have the source data fixed, you can try to replace the single quotes with double quotes using a ReplaceText processor with the following configuration.

This is a simplistic approach, though, and can cause problems in some complex JSON objects if you have strings with unexpected sequences of characters in them.

Cheers,

André

Was your question answered? Please take some time to click on "Accept as Solution" below this post.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 06-22-2022 05:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@araujo

Sorry, my bad. I made a mistake in the json representation mentioned in the question. The records actually have double quotes ("). I have updated the question.

Created 06-22-2022 05:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you share a screenshot of the part of you flow that reads and processes the JSON content?

André

Was your question answered? Please take some time to click on "Accept as Solution" below this post.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 06-22-2022 05:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@araujo

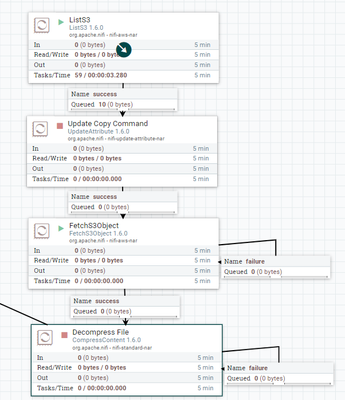

The jsons files are compressed and are placed in an S3 bucket. Here's the flow I have created to fetch s3 file and decompress it.

Created 06-22-2022 06:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you also share the screenshot of the part of the flow where the error is happening? I think that's after the Decompress File step, right?

André

Was your question answered? Please take some time to click on "Accept as Solution" below this post.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 06-22-2022 06:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@araujo , Right now there is no error in Nifi flow. But when I tried to execute a copy command (from an SQL query editor) on the file, it gave me an error "Invalid JSONPath format: Member is not an object. ". When I searched about this error, I found this - https://stackoverflow.com/a/45348425

This is why I wanted to convert the file.

Created 06-22-2022 06:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok. Can you share a screenshot of the part of the flow that insert the data into your database?

Cheers,

André

Was your question answered? Please take some time to click on "Accept as Solution" below this post.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 06-22-2022 06:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

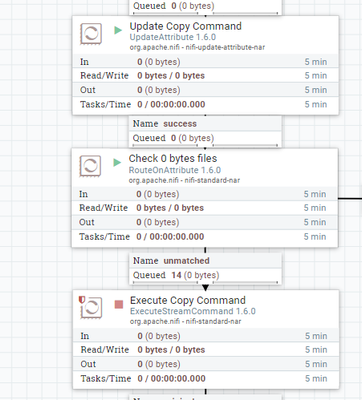

I have not created that part of the flow yet, but that will be an ExecuteStreamCommand processor which will run a psql command with the copy sql query:

It will look something like this:

Created 06-22-2022 06:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You should look into using PutDatabaseRecord instead of ExecuteStreamCommand. This would be more efficient and it would eliminate the need to manually remove those brackets.

Cheers,

André

Was your question answered? Please take some time to click on "Accept as Solution" below this post.

If you find a reply useful, say thanks by clicking on the thumbs up button.