Support Questions

- Cloudera Community

- Support

- Support Questions

- Hue - Sentry tables - Tables dissapear when enable...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Hue - Sentry tables - Tables dissapear when enable sentry in Hive

- Labels:

-

Apache Hive

-

Apache Sentry

-

Cloudera Hue

Created on 05-12-2016 12:36 AM - edited 09-16-2022 03:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello!

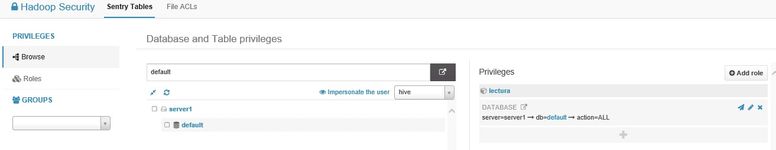

I have a 6 nodes Cloudera 5.7 kerberized cluster, and I'm trying to manage sentry roles with hue, but when I try to manage them, I only can see the databases.

I have enabled sentry with cloudera manager as shown in this documentation: http://www.cloudera.com/documentation/enterprise/latest/topics/cm_sg_sentry_service.html

If I try to run a query I get the following error:

Your query has the following error(s): Error while compiling statement: FAILED: SemanticException No valid privileges User hive does not have privileges for SWITCHDATABASE The required privileges: Server=server1->Db=*->Table=+->Column=*->action=insert;Server=server1->Db=*->Table=+->Column=*->action=select;

I tried granting select and ALL permissions to the usergroup in the database.. and the error persist.. And I also still unavailable to grant permissions on tables.

Any clue with what may be happening?

Thank you in advance!

Here is the configuration of my cluster

- sentry-site.xml

<?xml version="1.0" encoding="UTF-8"?> <!--Autogenerated by Cloudera Manager--> <configuration> <property> <name>sentry.service.server.rpc-address</name> <value>node01.test.com</value> </property> <property> <name>sentry.service.server.rpc-port</name> <value>8038</value> </property> <property> <name>sentry.service.server.principal</name> <value>sentry/_HOST@test.COM</value> </property> <property> <name>sentry.service.security.mode</name> <value>kerberos</value> </property> <property> <name>sentry.service.admin.group</name> <value>hive,impala,hue</value> </property> <property> <name>sentry.service.allow.connect</name> <value>hive,impala,hue,hdfs</value> </property> <property> <name>sentry.store.group.mapping</name> <value>org.apache.sentry.provider.common.HadoopGroupMappingService</value> </property> <property> <name>sentry.service.server.keytab</name> <value>sentry.keytab</value> </property> <property> <name>sentry.store.jdbc.url</name> <value>jdbc:mysql://node01.test.com:3306/sentry?useUnicode=true&characterEncoding=UTF-8</value> </property> <property> <name>sentry.store.jdbc.driver</name> <value>com.mysql.jdbc.Driver</value> </property> <property> <name>sentry.store.jdbc.user</name> <value>sentry</value> </property> <property> <name>sentry.store.jdbc.password</name> <value>********</value> </property> <property> <name>cloudera.navigator.client.config</name> <value>{{CMF_CONF_DIR}}/navigator.client.properties</value> </property> <property> <name>hadoop.security.credential.provider.path</name> <value>localjceks://file/{{CMF_CONF_DIR}}/creds.localjceks</value> </property> </configuration>- hive-site.xml

<?xml version="1.0" encoding="UTF-8"?> <!--Autogenerated by Cloudera Manager--> <configuration> <property> <name>hive.metastore.uris</name> <value>thrift://node01.test.com:9083</value> </property> <property> <name>hive.metastore.client.socket.timeout</name> <value>300</value> </property> <property> <name>hive.metastore.warehouse.dir</name> <value>/user/hive/warehouse</value> </property> <property> <name>hive.warehouse.subdir.inherit.perms</name> <value>true</value> </property> <property> <name>hive.log.explain.output</name> <value>false</value> </property> <property> <name>hive.auto.convert.join</name> <value>true</value> </property> <property> <name>hive.auto.convert.join.noconditionaltask.size</name> <value>20971520</value> </property> <property> <name>hive.optimize.bucketmapjoin.sortedmerge</name> <value>false</value> </property> <property> <name>hive.smbjoin.cache.rows</name> <value>10000</value> </property> <property> <name>mapred.reduce.tasks</name> <value>-1</value> </property> <property> <name>hive.exec.reducers.bytes.per.reducer</name> <value>67108864</value> </property> <property> <name>hive.exec.copyfile.maxsize</name> <value>33554432</value> </property> <property> <name>hive.exec.reducers.max</name> <value>1099</value> </property> <property> <name>hive.vectorized.groupby.checkinterval</name> <value>4096</value> </property> <property> <name>hive.vectorized.groupby.flush.percent</name> <value>0.1</value> </property> <property> <name>hive.compute.query.using.stats</name> <value>false</value> </property> <property> <name>hive.vectorized.execution.enabled</name> <value>true</value> </property> <property> <name>hive.vectorized.execution.reduce.enabled</name> <value>false</value> </property> <property> <name>hive.merge.mapfiles</name> <value>true</value> </property> <property> <name>hive.merge.mapredfiles</name> <value>false</value> </property> <property> <name>hive.cbo.enable</name> <value>false</value> </property> <property> <name>hive.fetch.task.conversion</name> <value>minimal</value> </property> <property> <name>hive.fetch.task.conversion.threshold</name> <value>268435456</value> </property> <property> <name>hive.limit.pushdown.memory.usage</name> <value>0.1</value> </property> <property> <name>hive.merge.sparkfiles</name> <value>true</value> </property> <property> <name>hive.merge.smallfiles.avgsize</name> <value>16777216</value> </property> <property> <name>hive.merge.size.per.task</name> <value>268435456</value> </property> <property> <name>hive.optimize.reducededuplication</name> <value>true</value> </property> <property> <name>hive.optimize.reducededuplication.min.reducer</name> <value>4</value> </property> <property> <name>hive.map.aggr</name> <value>true</value> </property> <property> <name>hive.map.aggr.hash.percentmemory</name> <value>0.5</value> </property> <property> <name>hive.optimize.sort.dynamic.partition</name> <value>false</value> </property> <property> <name>hive.execution.engine</name> <value>mr</value> </property> <property> <name>spark.executor.memory</name> <value>912680550</value> </property> <property> <name>spark.driver.memory</name> <value>966367641</value> </property> <property> <name>spark.executor.cores</name> <value>1</value> </property> <property> <name>spark.yarn.driver.memoryOverhead</name> <value>102</value> </property> <property> <name>spark.yarn.executor.memoryOverhead</name> <value>153</value> </property> <property> <name>spark.dynamicAllocation.enabled</name> <value>true</value> </property> <property> <name>spark.dynamicAllocation.initialExecutors</name> <value>1</value> </property> <property> <name>spark.dynamicAllocation.minExecutors</name> <value>1</value> </property> <property> <name>spark.dynamicAllocation.maxExecutors</name> <value>2147483647</value> </property> <property> <name>hive.metastore.execute.setugi</name> <value>true</value> </property> <property> <name>hive.support.concurrency</name> <value>true</value> </property> <property> <name>hive.zookeeper.quorum</name> <value>node01.test.com,node02.test.com,node03.test.com</value> </property> <property> <name>hive.zookeeper.client.port</name> <value>2181</value> </property> <property> <name>hbase.zookeeper.quorum</name> <value>node01.test.com,node02.test.com,node03.test.com</value> </property> <property> <name>hbase.zookeeper.property.clientPort</name> <value>2181</value> </property> <property> <name>hive.zookeeper.namespace</name> <value>hive_zookeeper_namespace_hive</value> </property> <property> <name>hive.cluster.delegation.token.store.class</name> <value>org.apache.hadoop.hive.thrift.MemoryTokenStore</value> </property> <property> <name>hive.server2.thrift.min.worker.threads</name> <value>5</value> </property> <property> <name>hive.server2.thrift.max.worker.threads</name> <value>100</value> </property> <property> <name>hive.server2.thrift.port</name> <value>10000</value> </property> <property> <name>hive.entity.capture.input.URI</name> <value>true</value> </property> <property> <name>hive.server2.enable.doAs</name> <value>false</value> </property> <property> <name>hive.server2.session.check.interval</name> <value>900000</value> </property> <property> <name>hive.server2.idle.session.timeout</name> <value>43200000</value> </property> <property> <name>hive.server2.idle.session.timeout_check_operation</name> <value>true</value> </property> <property> <name>hive.server2.idle.operation.timeout</name> <value>21600000</value> </property> <property> <name>hive.server2.webui.host</name> <value>0.0.0.0</value> </property> <property> <name>hive.server2.webui.port</name> <value>10002</value> </property> <property> <name>hive.server2.webui.max.threads</name> <value>50</value> </property> <property> <name>hive.server2.webui.use.ssl</name> <value>false</value> </property> <property> <name>hive.aux.jars.path</name> <value>{{HIVE_HBASE_JAR}}</value> </property> <property> <name>hive.metastore.sasl.enabled</name> <value>true</value> </property> <property> <name>hive.server2.authentication</name> <value>kerberos</value> </property> <property> <name>hive.metastore.kerberos.principal</name> <value>hive/_HOST@TEST.COM</value> </property> <property> <name>hive.server2.authentication.kerberos.principal</name> <value>hive/_HOST@TEST.COM</value> </property> <property> <name>hive.server2.authentication.kerberos.keytab</name> <value>hive.keytab</value> </property> <property> <name>hive.server2.webui.use.spnego</name> <value>true</value> </property> <property> <name>hive.server2.webui.spnego.keytab</name> <value>hive.keytab</value> </property> <property> <name>hive.server2.webui.spnego.principal</name> <value>HTTP/node01.test.com@TEST.COM</value> </property> <property> <name>cloudera.navigator.client.config</name> <value>{{CMF_CONF_DIR}}/navigator.client.properties</value> </property> <property> <name>hive.metastore.event.listeners</name> <value>com.cloudera.navigator.audit.hive.HiveMetaStoreEventListener</value> </property> <property> <name>hive.server2.session.hook</name> <value>org.apache.sentry.binding.hive.HiveAuthzBindingSessionHook</value> </property> <property> <name>hive.sentry.conf.url</name> <value>file:///{{CMF_CONF_DIR}}/sentry-site.xml</value> </property> <property> <name>hive.metastore.filter.hook</name> <value>org.apache.sentry.binding.metastore.SentryMetaStoreFilterHook</value> </property> <property> <name>hive.exec.post.hooks</name> <value>com.cloudera.navigator.audit.hive.HiveExecHookContext,org.apache.hadoop.hive.ql.hooks.LineageLogger</value> </property> <property> <name>hive.security.authorization.task.factory</name> <value>org.apache.sentry.binding.hive.SentryHiveAuthorizationTaskFactoryImpl</value> </property> <property> <name>spark.shuffle.service.enabled</name> <value>true</value> </property> <property> <name>hive.service.metrics.file.location</name> <value>/var/log/hive/metrics-hiveserver2/metrics.log</value> </property> <property> <name>hive.server2.metrics.enabled</name> <value>true</value> </property> <property> <name>hive.service.metrics.file.frequency</name> <value>30000</value> </property> </configuration>

Created 05-12-2016 06:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I finally solved it

The problem was that I had no role at all, and roles created by hue didnt work for some reason.

So I created an admin role via beeline:

- Obtained hive ticket

kinit -k -t run/cloudera-scm-agent/process/218-hive-HIVESERVER2/hive.keytab hive/node01.test.com@TEST.COM - Connected to hive with beeline using hive keytab.

beeline>!connect jdbc:hive2://node01.test.com:10000/default;principal=hive/node01.test.com@TEST.COM - Created the role admin.

beeline>Create role admin; - Granted priviledges to admin role.

GRANT ALL ON SERVER server1 TO ROLE admin WITH GRANT OPTION; - Assign the role to a group.

GRANT ROLE admin TO GROUP administrators;

After these steps all users within the group administrators are allowed to manage hive priviledges

Created 05-12-2016 06:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I finally solved it

The problem was that I had no role at all, and roles created by hue didnt work for some reason.

So I created an admin role via beeline:

- Obtained hive ticket

kinit -k -t run/cloudera-scm-agent/process/218-hive-HIVESERVER2/hive.keytab hive/node01.test.com@TEST.COM - Connected to hive with beeline using hive keytab.

beeline>!connect jdbc:hive2://node01.test.com:10000/default;principal=hive/node01.test.com@TEST.COM - Created the role admin.

beeline>Create role admin; - Granted priviledges to admin role.

GRANT ALL ON SERVER server1 TO ROLE admin WITH GRANT OPTION; - Assign the role to a group.

GRANT ROLE admin TO GROUP administrators;

After these steps all users within the group administrators are allowed to manage hive priviledges

Created 05-12-2016 06:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm happy to see you solved the issue. I'm even happier that you shared the steps and marked it as solved so it can help others. Thanks!!

Cy Jervis, Manager, Community Program

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.