Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Increase size of HDFS.

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Increase size of HDFS.

- Labels:

-

Apache Ambari

-

Apache Hadoop

-

HDFS

Created on 07-20-2020 01:05 PM - edited 07-20-2020 08:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

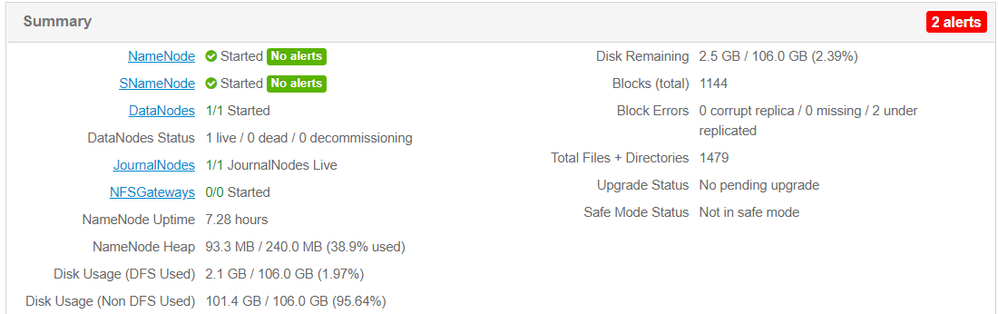

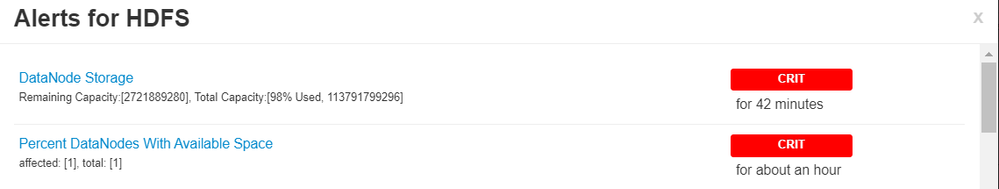

I am running Hortonworks Sandbox HDP 2.6.5 on VirtualBox. I have increased the size of my virtual hard disk (.vdi) to 500 GB. However, when I login to Ambari and view the size of my disk, it shows 106 GB only. What should I do to increase the HDFS capacity from 106 GB to 500 GB?

Created on 07-23-2020 06:18 AM - edited 07-23-2020 06:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Since the solution is scattered across many posts, I'm posting a short summary of what I did.

I am running HDP 2.6.5 image on VirtualBox.

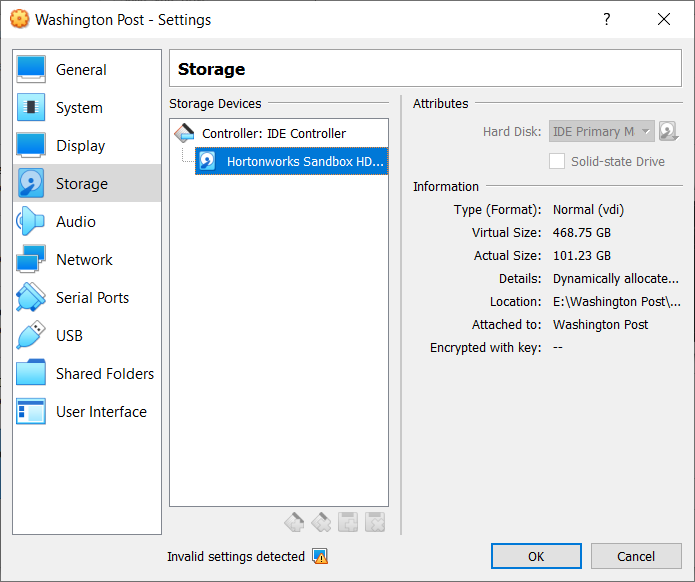

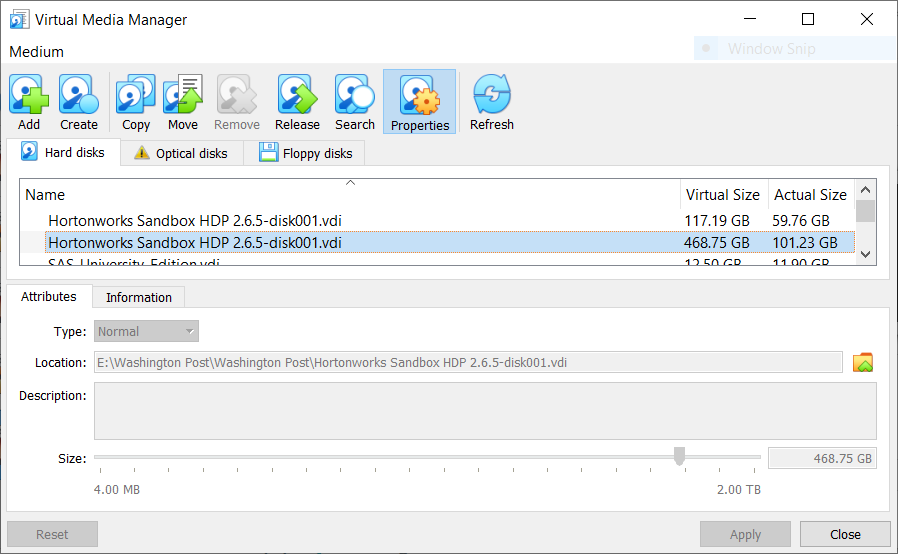

- Increased my virtual hard disk through Virtual Media Manager

- In the guest OS,

- Partitioned the unused space

- Formatted the new partition as an ext4 file system

- Mounted the file system

- Update the /etc/fstab (I couldn't do it, as I did not find that file

- In Ambari, under DataNode directory config, added the newly mounted file system as a comma separated value

- Restarted HDFS (my cluster did not have any files, therefore I did not run the below)

Thanks to @Shelton for his guidance.

sudo -u hdfs hdfs balancer

Created 07-20-2020 02:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

AFAIK these sandboxes dynamically allocated storage. You can try that by generate and load data for TPC-DS

General usage is

tpcds-setup.sh scale_factor [directory]

For example below will generate 200 GB of TPC-DS data in /user/data [HDFS]

./tpcds-setup.sh 200 /user/data

This should prove that the disk allocation is dynamic see below links

https://github.com/hortonworks/hive-testbench/blob/hive14/tpch-build.sh and https://github.com/hortonworks/hive-testbench/blob/hive14/tpch-setup.sh to build

Hope that helps

Created 07-20-2020 04:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'll look into it. I'll have to install gcc and then later Maven to run those shell scripts. Thanks for your input.

Created 07-20-2020 08:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't think it is dynamically allocated, or at least it doesn't seem to be working. I've run out of space trying to load a ~70 GB file. How can I increase the capacity?

Created 07-21-2020 01:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you share how method you used to extend you VM disk? Whats the VM disk file extension vmdk or vdi? Note virtualbox does not allow resizing on vmdk images.

Does you disk show Dynamically allocated storage as shown below?

Please revert

Created on 07-21-2020 06:42 AM - edited 07-21-2020 06:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is a VDI. I have used Virtual Media Manager to increase the size of my disk. How can i get HDFS to expand and make use of the unallocated space?

I'm assuming this is how one would do it

1. Create a new partition in the Guest OS and assign a mount point to it.

2. Add that path to the DataNode directories

(or)

Extend the current partition to fill the unused disk space so that DataNode automatically increases the HDFS size?

Created 07-22-2020 09:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So, I've been able to create

- a new partition and

- format it as an ext4 filesystem

- mount it

How do I add add this new partition to my datanode? Is it as simple as putting the drive path in Amabari DataNode config?

Created 07-22-2020 09:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To increase the HDFS capacity add capacity by giving dfs.datanode.data.dir more mount points or directories the new disk need to be mounted/formatted prior to adding the mount point in Ambari.

In HDP using Ambari, you should add the new mount point to the list of dirs in the dfs.datanote.data.dir property. Depending the version of Ambari or in advanced section, the property is in hdfs-site.xml. the more new disk you provide through comma separated list the more capacity you will have. Preferably every machine should have same disk and mount point structure

You will need to run the HDFS balancer re-balances data across the DataNodes, moving blocks from overutilized to underutilized nodes

Running the balancer without parameters:

sudo -u hdfs hdfs balancerRunning the balancer with a default threshold of 10%, meaning that the script will ensure that disk usage on each DataNode differs from the overall usage in the cluster by no more than 10%.

You can use a different threshold

sudo -u hdfs hdfs balancer -threshold 5This specifies that each Datanode's disk usage must be (or will be adjusted to be) within 5% of the cluster's overall usage

This process can take long depending on data in your cluster

Hope that helps

Created 07-22-2020 07:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your inputs. I have been able to expand the size of my HDFS finally.

Created 07-23-2020 12:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @focal_fossa, I'm happy to see you resolved your issue. Can you please mark the appropriate reply as the solution? It will make it easier for others to find the answer in the future.

Regards,

Vidya Sargur,Community Manager

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Learn more about the Cloudera Community: