Community Articles

- Cloudera Community

- Support

- Community Articles

- How to increase the size of the base Docker for Ma...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 12-07-2016 01:26 AM - edited 08-17-2019 08:12 AM

Objective

If you are managing multiple copies of the HDP sandbox for Docker (see my article here:How to manage multiple copies of the HDP Docker Sandbox.), you may find yourself running out of storage within your Docker VM image on your laptop. There is a way to increase the available storage space of the Docker VM image. Increasing the storage space will allow you to have more copies of sandbox containers in addition to other images and containers.

This tutorial will guide you through the process of increasing the size of the base Docker for Mac VM image. We will increase the size from 64GB to 120GB. This tutorial is the second in a two part series. The first tutorial in the series is: How to move Docker for Mac vm image from internal to external hard drive.

For a tutorial on increasing the base Docker VM image on CentOS 7, read my tutorial here: How to modify the default Docker configuration on CentOS 7 to import HDP sandbox

Prerequisites

- You should have already completed the following tutorial Installing Docker Version of Sandbox on Mac

- You should have already completed the following tutorial How to move Docker for Mac vm image from internal to external hard drive

- You should have already installed Homebrew Homebrew

- You should have an external hard drive available.

Scope

- Mac OS X 10.11.6 (El Capitan)

- Docker for Mac 1.12.1

- HDP 2.5 Docker Sandbox

- Homebrew 1.1.0

Steps

Install qemu

The Docker virtual machine image is a qcow2 format, which requires qemu to manage. Before we can manipulate our image file, we need to install qemu.

brew install qemu

brew install qemu ==> Installing dependencies for qemu: jpeg, libpng, libtasn1, gmp, nettle, gnutls, gettext, libffi, pcre, glib, pixman ==> Installing qemu dependency: jpeg ==> Downloading https://homebrew.bintray.com/bottles/jpeg-8d.el_capitan.bottle.2.tar.gz ######################################################################## 100.0% ==> Pouring jpeg-8d.el_capitan.bottle.2.tar.gz /usr/local/Cellar/jpeg/8d: 19 files, 713.8K ==> Installing qemu dependency: libpng ==> Downloading https://homebrew.bintray.com/bottles/libpng-1.6.26.el_capitan.bottle.tar.gz ######################################################################## 100.0% ==> Pouring libpng-1.6.26.el_capitan.bottle.tar.gz /usr/local/Cellar/libpng/1.6.26: 26 files, 1.2M ==> Installing qemu dependency: libtasn1 ==> Downloading https://homebrew.bintray.com/bottles/libtasn1-4.9.el_capitan.bottle.tar.gz ######################################################################## 100.0% ==> Pouring libtasn1-4.9.el_capitan.bottle.tar.gz /usr/local/Cellar/libtasn1/4.9: 58 files, 437K ==> Installing qemu dependency: gmp ==> Downloading https://homebrew.bintray.com/bottles/gmp-6.1.1.el_capitan.bottle.tar.gz ######################################################################## 100.0% ==> Pouring gmp-6.1.1.el_capitan.bottle.tar.gz /usr/local/Cellar/gmp/6.1.1: 17 files, 3.2M ==> Installing qemu dependency: nettle ==> Downloading https://homebrew.bintray.com/bottles/nettle-3.3.el_capitan.bottle.tar.gz ######################################################################## 100.0% ==> Pouring nettle-3.3.el_capitan.bottle.tar.gz /usr/local/Cellar/nettle/3.3: 81 files, 2.0M ==> Installing qemu dependency: gnutls ==> Downloading https://homebrew.bintray.com/bottles/gnutls-3.4.16.el_capitan.bottle.tar.gz ######################################################################## 100.0% ==> Pouring gnutls-3.4.16.el_capitan.bottle.tar.gz ==> Using the sandbox /usr/local/Cellar/gnutls/3.4.16: 1,115 files, 6.9M ==> Installing qemu dependency: gettext ==> Downloading https://homebrew.bintray.com/bottles/gettext-0.19.8.1.el_capitan.bottle.tar.gz ######################################################################## 100.0% ==> Pouring gettext-0.19.8.1.el_capitan.bottle.tar.gz ==> Caveats This formula is keg-only, which means it was not symlinked into /usr/local. macOS provides the BSD gettext library and some software gets confused if both are in the library path. Generally there are no consequences of this for you. If you build your own software and it requires this formula, you'll need to add to your build variables: LDFLAGS: -L/usr/local/opt/gettext/lib CPPFLAGS: -I/usr/local/opt/gettext/include ==> Summary /usr/local/Cellar/gettext/0.19.8.1: 1,934 files, 16.9M ==> Installing qemu dependency: libffi ==> Downloading https://homebrew.bintray.com/bottles/libffi-3.0.13.el_capitan.bottle.tar.gz ######################################################################## 100.0% ==> Pouring libffi-3.0.13.el_capitan.bottle.tar.gz ==> Caveats This formula is keg-only, which means it was not symlinked into /usr/local. Some formulae require a newer version of libffi. Generally there are no consequences of this for you. If you build your own software and it requires this formula, you'll need to add to your build variables: LDFLAGS: -L/usr/local/opt/libffi/lib ==> Summary /usr/local/Cellar/libffi/3.0.13: 15 files, 374.7K ==> Installing qemu dependency: pcre ==> Downloading https://homebrew.bintray.com/bottles/pcre-8.39.el_capitan.bottle.tar.gz ######################################################################## 100.0% ==> Pouring pcre-8.39.el_capitan.bottle.tar.gz /usr/local/Cellar/pcre/8.39: 203 files, 5.4M ==> Installing qemu dependency: glib ==> Downloading https://homebrew.bintray.com/bottles/glib-2.50.1.el_capitan.bottle.tar.gz ######################################################################## 100.0% ==> Pouring glib-2.50.1.el_capitan.bottle.tar.gz /usr/local/Cellar/glib/2.50.1: 427 files, 22.3M ==> Installing qemu dependency: pixman ==> Downloading https://homebrew.bintray.com/bottles/pixman-0.34.0.el_capitan.bottle.tar.gz ######################################################################## 100.0% ==> Pouring pixman-0.34.0.el_capitan.bottle.tar.gz /usr/local/Cellar/pixman/0.34.0: 12 files, 1.2M ==> Installing qemu ==> Downloading https://homebrew.bintray.com/bottles/qemu-2.7.0.el_capitan.bottle.tar.gz ######################################################################## 100.0% ==> Pouring qemu-2.7.0.el_capitan.bottle.tar.gz /usr/local/Cellar/qemu/2.7.0: 126 files, 139.8M

NOTE: This may take several minutes.

Export Docker containers

Before we make any changes to our Docker vm image, we should backup any containers we want to save. This is not a typical Docker use case as most Docker containers are emphemeral. However, we want to save any configuration changes we've made to our sandbox containers.

To get a list of containers use the docker ps -a command. This will show all containers, running or not. You should see something similar to this:

docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 15411dc968ad fc813bdc4bdd "/usr/sbin/sshd -D" 4 weeks ago Exited (0) 23 hours ago hdp25-atlas-demo

As you can see I have a single container which I use for Atlas demos. If you followed my article above for managing multiple copies of the sandbox, you will note the name of the sandbox is based on my project directory. If you have not followed that tutorial, then the NAMES column will display sandbox which is the default container name. I want to save that container to avoid having to redo any configuration and setup tasks that I've already completed.

Using the docker export command, we can create a saved image of our container. This image can be imported into Docker later. To read more about the docker export command look here: docker export. You should give the --output parameter a filename that makes sense. You should see something similar to this:

cd ~ docker export --output="hdp25-atlas-demo.tar" hdp25-atlas-demo

NOTE: This may take several minutes.

You can check the size of the container export. You should see something like this:

ls -lah hdp25-atlas-demo.tar -rw------- 1 myoung staff 14G Nov 9 11:57 hdp25-atlas-demo.tar

You shouldn't need to save your Docker images as they can easily be imported again and contain no custom configurations.

Stop Docker for Mac

Let check the storage size available with our current virtual machine image.

docker run --rm alpine df -h Filesystem Size Used Available Use% Mounted on none 59.0G 35.7G 20.3G 64% / tmpfs 5.9G 0 5.9G 0% /dev tmpfs 5.9G 0 5.9G 0% /sys/fs/cgroup /dev/vda2 59.0G 35.7G 20.3G 64% /etc/resolv.conf /dev/vda2 59.0G 35.7G 20.3G 64% /etc/hostname /dev/vda2 59.0G 35.7G 20.3G 64% /etc/hosts shm 64.0M 0 64.0M 0% /dev/shm tmpfs 5.9G 0 5.9G 0% /proc/kcore tmpfs 5.9G 0 5.9G 0% /proc/timer_list tmpfs 5.9G 0 5.9G 0% /proc/sched_debug

Notice that our / partition is 59GB in size. Another 5.9GB is used for the other partitions. That brings us up to our 64GB file size.

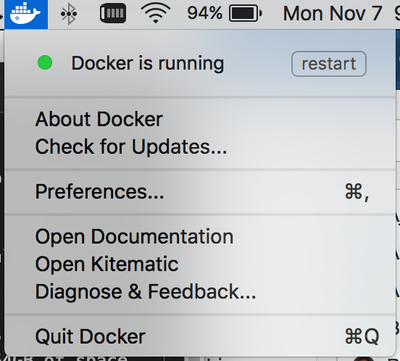

Before we can make any changes to the Docker virtual machine image, we need to stop Docker for Mac. There should be a Docker for Mac icon in the menu bar. You should see something similar to this:

You can also check via the command line via the ps -ef | grep -i com.docker. You should see something similar to this:

ps -ef | grep -i com.docker

0 123 1 0 8:45AM ?? 0:00.01 /Library/PrivilegedHelperTools/com.docker.vmnetd

502 967 876 0 8:46AM ?? 0:00.08 /Applications/Docker.app/Contents/MacOS/com.docker.osx.hyperkit.linux -watchdog fd:0

502 969 967 0 8:46AM ?? 0:00.04 /Applications/Docker.app/Contents/MacOS/com.docker.osx.hyperkit.linux -watchdog fd:0

502 971 967 0 8:46AM ?? 0:07.96 com.docker.db --url fd:3 --git /Users/myoung/Library/Containers/com.docker.docker/Data/database

502 975 967 0 8:46AM ?? 0:03.40 com.docker.osx.hyperkit.linux

502 977 975 0 8:46AM ?? 0:00.03 /Applications/Docker.app/Contents/MacOS/com.docker.osx.hyperkit.linux

502 12807 967 0 9:17PM ?? 0:00.08 com.docker.osxfs --address fd:3 --connect /Users/myoung/Library/Containers/com.docker.docker/Data/@connect --control fd:4 --volume-control fd:5 --database /Users/myoung/Library/Containers/com.docker.docker/Data/s40

502 12810 967 0 9:17PM ?? 0:00.12 com.docker.slirp --db /Users/myoung/Library/Containers/com.docker.docker/Data/s40 --ethernet fd:3 --port fd:4 --vsock-path /Users/myoung/Library/Containers/com.docker.docker/Data/@connect --max-connections 900

502 12811 967 0 9:17PM ?? 0:00.19 com.docker.driver.amd64-linux -db /Users/myoung/Library/Containers/com.docker.docker/Data/s40 -osxfs-volume /Users/myoung/Library/Containers/com.docker.docker/Data/s30 -slirp /Users/myoung/Library/Containers/com.docker.docker/Data/s50 -vmnet /var/tmp/com.docker.vmnetd.socket -port /Users/myoung/Library/Containers/com.docker.docker/Data/s51 -vsock /Users/myoung/Library/Containers/com.docker.docker/Data -docker /Users/myoung/Library/Containers/com.docker.docker/Data/s60 -addr fd:3 -debug

502 12812 12811 0 9:17PM ?? 0:00.02 /Applications/Docker.app/Contents/MacOS/com.docker.driver.amd64-linux -db /Users/myoung/Library/Containers/com.docker.docker/Data/s40 -osxfs-volume /Users/myoung/Library/Containers/com.docker.docker/Data/s30 -slirp /Users/myoung/Library/Containers/com.docker.docker/Data/s50 -vmnet /var/tmp/com.docker.vmnetd.socket -port /Users/myoung/Library/Containers/com.docker.docker/Data/s51 -vsock /Users/myoung/Library/Containers/com.docker.docker/Data -docker /Users/myoung/Library/Containers/com.docker.docker/Data/s60 -addr fd:3 -debug

502 12814 12811 0 9:17PM ?? 0:16.48 /Applications/Docker.app/Contents/MacOS/com.docker.hyperkit -A -m 12G -c 6 -u -s 0:0,hostbridge -s 31,lpc -s 2:0,virtio-vpnkit,uuid=1f629fed-1ef6-4f34-8fce-753347e3b941,path=/Users/myoung/Library/Containers/com.docker.docker/Data/s50,macfile=/Users/myoung/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/mac.0 -s 3,virtio-blk,file:///Users/myoung/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/Docker.qcow2,format=qcow -s 4,virtio-9p,path=/Users/myoung/Library/Containers/com.docker.docker/Data/s40,tag=db -s 5,virtio-rnd -s 6,virtio-9p,path=/Users/myoung/Library/Containers/com.docker.docker/Data/s51,tag=port -s 7,virtio-sock,guest_cid=3,path=/Users/myoung/Library/Containers/com.docker.docker/Data,guest_forwards=2376;1525 -l com1,autopty=/Users/myoung/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/tty,log=/Users/myoung/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/console-ring -f kexec,/Applications/Docker.app/Contents/Resources/moby/vmlinuz64,/Applications/Docker.app/Contents/Resources/moby/initrd.img,earlyprintk=serial console=ttyS0 com.docker.driver="com.docker.driver.amd64-linux", com.docker.database="com.docker.driver.amd64-linux" ntp=gateway mobyplatform=mac -F /Users/myoung/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/hypervisor.pid

502 13790 876 0 9:52PM ?? 0:00.01 /Applications/Docker.app/Contents/MacOS/com.docker.frontend {"action":"vmstateevent","args":{"vmstate":"running"}}

502 13791 13790 0 9:52PM ?? 0:00.01 /Applications/Docker.app/Contents/MacOS/com.docker.frontend {"action":"vmstateevent","args":{"vmstate":"running"}}

502 13793 13146 0 9:52PM ttys000 0:00.00 grep -i com.dockerNow we will stop Docker for Mac. Using the menu shown above, click on the Quit Docker menu option. This will stop Docker for Mac. You should notice the Docker for Mac icon is no longer visible. Now let's confirm the Docker processes we saw before are no longer running.

ps -ef | grep -i com.docker 0 123 1 0 8:45AM ?? 0:00.01 /Library/PrivilegedHelperTools/com.docker.vmnetd 502 13815 13146 0 9:54PM ttys000 0:00.00 grep -i com.docker

NOTE: It may take a few seconds before Docker for Mac is completely stopped. It is ok for the com.docker.vmnetd to still be running.

Create new Docker template image

Docker uses a template image file. That file is copied from /Application/Docker.app/Contents/Resources/moby/data.qcow2 to ~/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/Docker.qcow2 when Docker sees you are missing the Docker.qcow2 file. We are going to create a new template image file. In my case I want to create a file that is 120GB in size. You should see something similar to this:

cd ~ qemu-img create -f qcow2 ~/data.qcow2 120G Formatting '/Users/myoung/data.qcow2', fmt=qcow2 size=128849018880 encryption=off cluster_size=65536 lazy_refcounts=off refcount_bits=16

Now let's see how big the file is. You should see something similar to this:

ls -lah data.qcow2 -rw-r--r-- 1 myoung staff 194K Nov 9 19:30 data.qcow2

That is interesting; the file is only 194KB in size. Why? That is because the qcow2 image format is a sparse file. The image file grows as you add content to it, such as images and containers. You can read more here Sparse Files. The file will continue to grow until it reaches 120GB in size.

NOTE: On Linux systems, this process is more involved than it is for the Mac. You have to change the Docker configuration to define a new setting --storage-opt=dm.basesize=30G, or whatever size is appropriate for your environment. There is a link to my Linux article on this topic at the top of the page.

Backup default template image file

We should backup our default template image file just to be safe.

mv /Application/Docker.app/Contents/Resources/moby/data.qcow2 /Application/Docker.app/Contents/Resources/moby/data.qcow2.backup

Let's make sure the file exists.

ls -lah /Applications/Docker.app/Contents/Resources/moby/data.qcow2.backup -rw-r--r-- 1 myoung admin 320K Nov 8 12:36 /Applications/Docker.app/Contents/Resources/moby/data.qcow2.backup

Now we can cp our new template file into place.

cp data.qcow2 /Applications/Docker.app/Contents/Resources/moby/data.qcow2

Again, let's make sure the file exists.

ls -lah /Applications/Docker.app/Contents/Resources/moby/data.qcow2* -rw-r--r-- 1 myoung admin 194K Nov 9 19:38 /Applications/Docker.app/Contents/Resources/moby/data.qcow2 -rw-r--r-- 1 myoung admin 320K Nov 8 12:36 /Applications/Docker.app/Contents/Resources/moby/data.qcow2.backup

Delete current Docker vm image file

Now we need delete the current Docker vm image file located at ~/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/Docker.qcow2. We need to do this because we need to create a new image file using the template baseline image we just created. We backed up our containers in previous steps, so we shouldn't have to worry about losing anything. You can decide to backup this file just to be safe.

mv ~/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/Docker.qcow2 ~/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/Docker.qcow2.backup

NOTE: You must have sufficient storage space to hold two copies of the Docker.qcow2 file.

Now let's make sure the file has been moved.

ls -lah ~/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/Docker.qcow2* -rw-r--r-- 1 myoung staff 64G Nov 9 19:30 /Users/myoung/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/Docker.qcow2.backup

Now restart Docker for Mac

Now we can restart Docker for Mac. This is done by running the application from the Applications folder in the Finder. You should see something similar to this:

Double-click on the Docker application to start it. You should notice the Docker for Mac icon is now back in the menu menu bar. You can also check via ps -ef | grep -i com.docker. You should see something similar to this:

ps -ef | grep -i com.docker

0 123 1 0 8:45AM ?? 0:00.01 /Library/PrivilegedHelperTools/com.docker.vmnetd

502 14476 14465 0 10:42PM ?? 0:00.03 /Applications/Docker.app/Contents/MacOS/com.docker.osx.hyperkit.linux -watchdog fd:0

502 14479 14476 0 10:42PM ?? 0:00.01 /Applications/Docker.app/Contents/MacOS/com.docker.osx.hyperkit.linux -watchdog fd:0

502 14480 14476 0 10:42PM ?? 0:00.29 com.docker.db --url fd:3 --git /Users/myoung/Library/Containers/com.docker.docker/Data/database

502 14481 14476 0 10:42PM ?? 0:00.08 com.docker.osxfs --address fd:3 --connect /Users/myoung/Library/Containers/com.docker.docker/Data/@connect --control fd:4 --volume-control fd:5 --database /Users/myoung/Library/Containers/com.docker.docker/Data/s40

502 14482 14476 0 10:42PM ?? 0:00.04 com.docker.slirp --db /Users/myoung/Library/Containers/com.docker.docker/Data/s40 --ethernet fd:3 --port fd:4 --vsock-path /Users/myoung/Library/Containers/com.docker.docker/Data/@connect --max-connections 900

502 14483 14476 0 10:42PM ?? 0:00.05 com.docker.osx.hyperkit.linux

502 14484 14476 0 10:42PM ?? 0:00.08 com.docker.driver.amd64-linux -db /Users/myoung/Library/Containers/com.docker.docker/Data/s40 -osxfs-volume /Users/myoung/Library/Containers/com.docker.docker/Data/s30 -slirp /Users/myoung/Library/Containers/com.docker.docker/Data/s50 -vmnet /var/tmp/com.docker.vmnetd.socket -port /Users/myoung/Library/Containers/com.docker.docker/Data/s51 -vsock /Users/myoung/Library/Containers/com.docker.docker/Data -docker /Users/myoung/Library/Containers/com.docker.docker/Data/s60 -addr fd:3 -debug

502 14485 14483 0 10:42PM ?? 0:00.01 /Applications/Docker.app/Contents/MacOS/com.docker.osx.hyperkit.linux

502 14486 14484 0 10:42PM ?? 0:00.01 /Applications/Docker.app/Contents/MacOS/com.docker.driver.amd64-linux -db /Users/myoung/Library/Containers/com.docker.docker/Data/s40 -osxfs-volume /Users/myoung/Library/Containers/com.docker.docker/Data/s30 -slirp /Users/myoung/Library/Containers/com.docker.docker/Data/s50 -vmnet /var/tmp/com.docker.vmnetd.socket -port /Users/myoung/Library/Containers/com.docker.docker/Data/s51 -vsock /Users/myoung/Library/Containers/com.docker.docker/Data -docker /Users/myoung/Library/Containers/com.docker.docker/Data/s60 -addr fd:3 -debug

502 14488 14484 0 10:42PM ?? 0:07.90 /Applications/Docker.app/Contents/MacOS/com.docker.hyperkit -A -m 12G -c 6 -u -s 0:0,hostbridge -s 31,lpc -s 2:0,virtio-vpnkit,uuid=1f629fed-1ef6-4f34-8fce-753347e3b941,path=/Users/myoung/Library/Containers/com.docker.docker/Data/s50,macfile=/Users/myoung/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/mac.0 -s 3,virtio-blk,file:///Users/myoung/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/Docker.qcow2,format=qcow -s 4,virtio-9p,path=/Users/myoung/Library/Containers/com.docker.docker/Data/s40,tag=db -s 5,virtio-rnd -s 6,virtio-9p,path=/Users/myoung/Library/Containers/com.docker.docker/Data/s51,tag=port -s 7,virtio-sock,guest_cid=3,path=/Users/myoung/Library/Containers/com.docker.docker/Data,guest_forwards=2376;1525 -l com1,autopty=/Users/myoung/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/tty,log=/Users/myoung/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/console-ring -f kexec,/Applications/Docker.app/Contents/Resources/moby/vmlinuz64,/Applications/Docker.app/Contents/Resources/moby/initrd.img,earlyprintk=serial console=ttyS0 com.docker.driver="com.docker.driver.amd64-linux", com.docker.database="com.docker.driver.amd64-linux" ntp=gateway mobyplatform=mac -F /Users/myoung/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/hypervisor.pid

502 14559 14465 0 10:46PM ?? 0:00.01 /Applications/Docker.app/Contents/MacOS/com.docker.frontend {"action":"vmstateevent","args":{"vmstate":"running"}}

502 14560 14559 0 10:46PM ?? 0:00.01 /Applications/Docker.app/Contents/MacOS/com.docker.frontend {"action":"vmstateevent","args":{"vmstate":"running"}}

502 14562 13146 0 10:46PM ttys000 0:00.00 grep -i com.dockerYou should notice the Docker processes are running again. You can also check the timestamp of files in the Docker image directory:

ls -lah ~/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/Docker.qcow2* -rw-r--r-- 1 myoung staff 179M Nov 9 19:46 /Users/myoung/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/Docker.qcow2 -rw-r--r-- 1 myoung staff 64G Nov 9 19:45 /Users/myoung/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/Docker.qcow2.backup

You should notice our backup file exists and is 64GB. The new file was created and is 179MB. As mentioned above, the new file will continue to grow as you use Docker.

Let's check our available disk space in our Docker vm.

docker run --rm alpine df -h Unable to find image 'alpine:latest' locally latest: Pulling from library/alpine 3690ec4760f9: Pull complete Digest: sha256:1354db23ff5478120c980eca1611a51c9f2b88b61f24283ee8200bf9a54f2e5c Status: Downloaded newer image for alpine:latest Filesystem Size Used Available Use% Mounted on none 114.1G 66.7M 108.2G 0% / tmpfs 5.9G 0 5.9G 0% /dev tmpfs 5.9G 0 5.9G 0% /sys/fs/cgroup /dev/vda2 114.1G 66.7M 108.2G 0% /etc/resolv.conf /dev/vda2 114.1G 66.7M 108.2G 0% /etc/hostname /dev/vda2 114.1G 66.7M 108.2G 0% /etc/hosts shm 64.0M 0 64.0M 0% /dev/shm tmpfs 5.9G 0 5.9G 0% /proc/kcore tmpfs 5.9G 0 5.9G 0% /proc/timer_list tmpfs 5.9G 0 5.9G 0% /proc/sched_debug

Notice the image for alpine:latest didn't exist and it was downloaded. That is because we are using a new vm image. Also notice we have 114.1GB size of our / partition. And we have another 5.9GB in use for the other partitions. That brings our total space up to 120GB.

Import Docker container

Now that Docker for Mac is running, we need to import the saved container images we created from earlier. We will use the docker import command to do this. To read more about the docker import command look here: docker import.

cd ~ docker import hdp25-atlas-demo.tar atlas-demo:latest sha256:c793528e25bd95564c8cbf4b1487c47107d0cc300b1929b8a0fdeff93ed84eae

NOTE: This may take up to an hour for this first import.

Let's look at our list of images now.

docker images REPOSITORY TAG IMAGE ID CREATED SIZE atlas-demo latest ad47bef4e526 2 minutes ago 13.93 GB alpine latest baa5d63471ea 3 weeks ago 4.803 MB

You should notice the repository and tag line up with what we provided to the import command.

Create new sandbox container

Our saved container was imported as an image. If your container was created using project directories based on this tutorial How to manage multiple copies of the HDP Docker Sandbox, then you will need to make a change before you can start the container.

You need to modify the create-container.sh script. That script is creating a container based on an image called sandbox. You need to modify that script to instead create a container based on an image called atlas-demo. This assumes you called your repository atlas-demo when you imported the image. Here is a copy of my modified create-container.sh script:

docker run -v `pwd`:/mount -v ${PROJ_DIR}:/hadoop --name ${PROJ_DIR} --hostname "sandbox.hortonworks.com" --privileged -d -p 6080:6080 -p 9090:9090 -p 9000:9000 -p 8000:8000 -p 8020:8020 -p 42111:42111 -p 10500:10500 -p 16030:16030 -p 8042:8042 -p 8040:8040 -p 2100:2100 -p 4200:4200 -p 4040:4040 -p 8050:8050 -p 9996:9996 -p 9995:9995 -p 8080:8080 -p 8088:8088 -p 8886:8886 -p 8889:8889 -p 8443:8443 -p 8744:8744 -p 8888:8888 -p 8188:8188 -p 8983:8983 -p 1000:1000 -p 1100:1100 -p 11000:11000 -p 10001:10001 -p 15000:15000 -p 10000:10000 -p 8993:8993 -p 1988:1988 -p 5007:5007 -p 50070:50070 -p 19888:19888 -p 16010:16010 -p 50111:50111 -p 50075:50075 -p 50095:50095 -p 18080:18080 -p 60000:60000 -p 8090:8090 -p 8091:8091 -p 8005:8005 -p 8086:8086 -p 8082:8082 -p 60080:60080 -p 8765:8765 -p 5011:5011 -p 6001:6001 -p 6003:6003 -p 6008:6008 -p 1220:1220 -p 21000:21000 -p 6188:6188 -p 61888:61888 -p 2181:2181 -p 2222:22 atlas-demo /usr/sbin/sshd -DNotice the last line uses atlas-demo instead of sandbox. Once you run this command, your container will be created and running. You do not need to modify the start-container.sh, ssh-container.sh or stop-container.sh script. They all use the ${PROJ_DIR} which is one of the reasons these scripts are so handy.

Connect to the sandbox

Now that the container is started, we can connect to it. We can use our helper script ssh-container.sh to make it easy:

./ssh-container.sh

When you created a new sandbox container before, you were prompted to reset the root password. You were not prompted this time, because our existing configuration is part the image now.

Start the sandbox processes

When the container starts up, it doesn't automatically start the sandbox processes. You can do that by running the /etc/inid./startup_script. You should see something similar to this:

[root@sandbox ~]# /etc/init.d/startup_script start Starting tutorials... [ Ok ] Starting startup_script... Starting HDP ... Starting mysql [ OK ] Starting Flume [ OK ] Starting Postgre SQL [ OK ] Starting Ranger-admin [WARNINGS] find: failed to restore initial working directory: Permission denied Starting data node [ OK ] Starting name node [ OK ] Safe mode is OFF Starting Oozie [ OK ] Starting Ranger-usersync [ OK ] Starting Zookeeper nodes [ OK ] Starting NFS portmap [ OK ] Starting Hdfs nfs [ OK ] Starting Hive server [ OK ] Starting Hiveserver2 [ OK ] Starting Ambari server [ OK ] Starting Ambari agent [ OK ] Starting Node manager [ OK ] Starting Yarn history server [ OK ] Starting Webhcat server [ OK ] Starting Spark [ OK ] Starting Mapred history server [ OK ] Starting Zeppelin [ OK ] Starting Resource manager [ OK ] Safe mode is OFF Starting sandbox... /etc/init.d/startup_script: line 97: /proc/sys/kernel/hung_task_timeout_secs: No such file or directory Starting shellinaboxd: [ OK ]

NOTE: You can ignore any warnings or errors that are displayed.

Now the sandbox process are running and you can access the Ambari interface if http://localhost:8080. Log in with the raj_ops username and password. You should notice all of the configurations you previously had are still there. In my case I turned Zeppelin maintenance mode on and maintenance mode off for some other services. They were as I expected them.

Create new sandbox containers

If you want to create new sandbox containers using the baseline image, make sure you have imported the default sandbox image. Then create those containers using sandbox instead of the atlas-demo label.

Review

If you successfully followed along with this tutorial, we were able backup our existing containers to images. We created a new baseline Docker vm image increasing our storage space from 64GB to 120GB. We deleted our existing Docker vm image and recreated it using the new baseline image. We imported our saved containers as new images. And finally we were able to create new containers using the saved container image which contained all of your previous settings and configurations.