Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Initial job has not accepted any resources, wh...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Initial job has not accepted any resources, whereas resources are available

- Labels:

-

Apache Spark

-

Apache YARN

-

Cloudera Manager

Created on 11-29-2016 03:42 PM - edited 08-19-2019 02:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

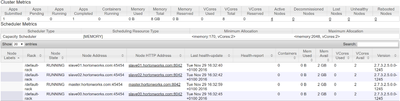

i am not able to submit a Spark job. The error is: YarnScheduler: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

I submit the application with the following command: spark-submit --class util.Main --master yarn-client --executor-memory 512m --executor-cores 2 my.jar my_config

I installed Apache Ambari Version2.4.1.0 and ResourceManager version:2.7.3.2.5.0.0 on Ubuntu 14.04.

Which should be the cause of the issue?

Thanks

Created 12-01-2016 11:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

Fixing the following issue fixed also this one:

https://community.hortonworks.com/questions/68989/datanodes-status-not-consistent.html#answer-69461

Regards

Alessandro

Created 11-29-2016 06:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your cluster has allocated the resources that you have asked for the Spark. It looks like you have a backlog of jobs that are already running. May be there is a job that is stuck in the queue. Kill all the previous applications and see if the job runs. You can also kill all the yarn applications and resubmit the jobs.

$ yarn application -list $ yarn application -kill $application_id

Created 11-30-2016 09:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Vedant,

Thanks for the reply. I already executed the two above commands and YARN is listing 0 application. Then i executed again the job and i am facing two different situations:

1

The job hangs on:

INFO Client: Application report for application_1480498999425_0002 (state: ACCEPTED)

2

The job starts (RUNNING STATUS) but when executing the first spark jobs it stops with the following error:

YarnScheduler: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

Thanks Alessandro

Created 11-30-2016 11:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've seen this issue quite often when folks are first setting up their cluster. Make sure you have node managers on all of your data nodes. In addition check the YARN configuration for MAX container size. If it is less than what you are requesting, resources will never get allocated. And finally, check the default number of executors. Try specifying --num-executors 2. If you request more resources than your cluster has (more vcores or ram), then you will get this issue.

Created 12-01-2016 11:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

Fixing the following issue fixed also this one:

https://community.hortonworks.com/questions/68989/datanodes-status-not-consistent.html#answer-69461

Regards

Alessandro