Support Questions

- Cloudera Community

- Support

- Support Questions

- Installing HDFS Google Cloud Connector

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Installing HDFS Google Cloud Connector

- Labels:

-

Apache YARN

-

Cloudera Manager

-

HDFS

-

MapReduce

Created on 06-16-2017 06:57 PM - edited 09-16-2022 04:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I'm looking to ingest a large amount of data from a public google cloud bucket, but our cluster is currently missing the google cloud connector. I thought copying the connector jar to /opt/cloudera/parcels/CDH/lib/hadoop would be sufficient, but after running the below command I am receiving the following error: No FileSystem for scheme: gs.

hdfs dfs -cp gs://gnomad-public/release-170228/gnomad.genomes.r2.0.1.sites.vds /my/local/hdfs/filesystem

Are any additional steps beyond this necessary?

Created 06-16-2017 08:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

https://github.com/GoogleCloudPlatform/bigdata-interop/blob/master/gcs/INSTALL.md

Created 06-16-2017 08:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

https://github.com/GoogleCloudPlatform/bigdata-interop/blob/master/gcs/INSTALL.md

Created 06-16-2017 09:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Appreciate the quick response! I actually was originally following the guide you posted. In particular, I modified core-site.xml located at /etc/hadoop/conf as shown below. However, when running hdfs dfs -ls gs://gnomad-public (a public gcloud bucket), I get the following ClassNotFound: java.lang.RuntimeException: java.lang.ClassNotFoundException: Class com.google.cloud.hadoop.fs.gcs.GoogleHadoopFileSystem

It appears that this issue is tied to the connector jar not being part of the classpath, which is odd as I created a symbolic link to it both in /opt/cloudera/parcels/CDH/lib/hadoop and /opt/cloudera/parcels/CDH/lib/hadoop-mapreduce. (The file is actually located in /opt/cloudera/parcels/CDH/jars - this is where all of the default connector jars were initially placed and I figured following the default placement would be the best practice).

<!--GCloud connection modification here!-->

<property>

<name>fs.gs.impl</name>

<value>com.google.cloud.hadoop.fs.gcs.GoogleHadoopFileSystem</value>

<description>The FileSystem for gs: (GCS) uris.</description>

</property>

<property>

<name>fs.AbstractFileSystem.gs.impl</name>

<value>com.google.cloud.hadoop.fs.gcs.GoogleHadoopFS</value>

<description>

The AbstractFileSystem for gs: (GCS) uris. Only necessary for use with Hadoop 2.

</description>

</property>

<property>

<name>fs.gs.project.id</name>

<value>PROJECTNAME</value>

<description>

Required. Google Cloud Project ID with access to configured GCS buckets.

</description>

</property>

<property>

<name>google.cloud.auth.service.account.enable</name>

<value>true</value>

<description>

Whether to use a service account for GCS authorizaiton. If an email and

keyfile are provided (see google.cloud.auth.service.account.email and

google.cloud.auth.service.account.keyfile), then that service account

willl be used. Otherwise the connector will look to see if it running on

a GCE VM with some level of GCS access in it's service account scope, and

use that service account.

</description>

</property>

<property>

<name>google.cloud.auth.service.account.json.keyfile</name>

<value>'/etc/hadoop/conf/KEYFILENAME.json'</value>

<description>

The JSON key file of the service account used for GCS

access when google.cloud.auth.service.account.enable is true.

</description>

</property>

Created 06-16-2017 09:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created on 06-18-2017 07:25 AM - edited 06-18-2017 07:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

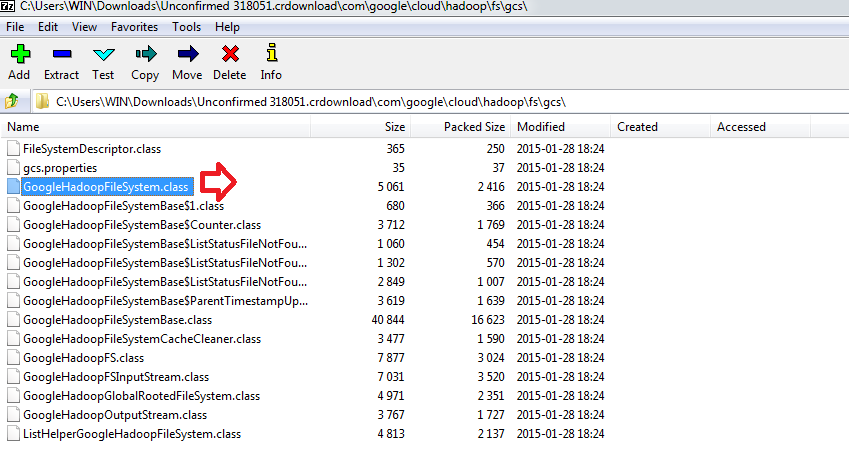

Based on the eror that you have , the jar file is clearing missing a .class file (GoogleHadoopFileSystem)

you can extract the jar using 7 zip any tool to inspect .

.class file path that it is missing .

com.google.cloud.hadoop.fs.gcs.GoogleHadoopFileSystem

Solution is to download a fresh required jar , extra and check if it is having the all the .class file and add that to the path . this should resolve the error.

Created 06-18-2017 07:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 06-19-2017 09:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Went ahead and downloaded a fresh .jar and followed the steps in the guide posted above - got it working! Appreciate the help.