Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Issue with Nifi Merge Content : Files stay in ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Issue with Nifi Merge Content : Files stay in the queue infinitely !

- Labels:

-

Apache NiFi

Created on 03-10-2017 02:05 PM - edited 08-18-2019 05:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

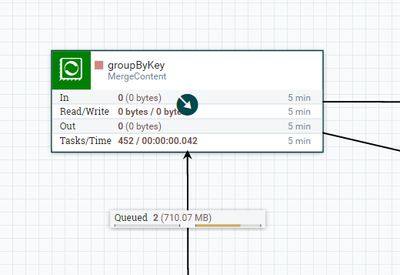

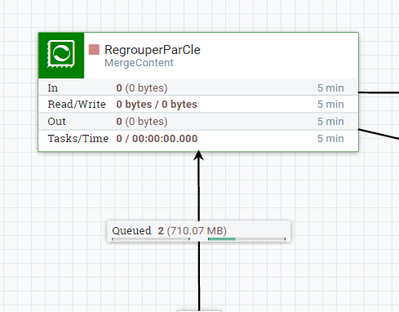

I have a flow where I am using the Merge Content Processor. I noticed lately that some flowfiles stay infinitely in the queue just before the Merge Content. I can't figure out the issue so I am asking for your help !

This is the part of the flow that I am talking about :

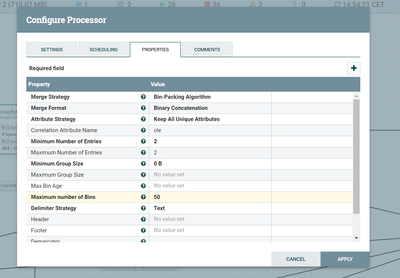

The configuration of the merge content processor is here (merging in the attribute called "cle" and its value is the same for the 2 flowfiles in the queue ! But still they don't merge ) :

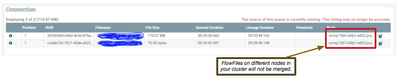

Finally here is the content of the queue :

Is this due to the first flowfile size (710 MB) ? is there a maximum size for a bin ? If yes why isn't it merged after reaching that size ?

Thank you for your help !

Created on 03-14-2017 05:48 PM - edited 08-18-2019 05:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Each Node in a NiFi cluster runs its own copy of the dataflow and works on its own set of FlowFiles.

Looking at the screenshot you have above of your queue list, you can see that the two FlowFiles are not on the same node. So each node is running a MergeContent processor and each node is waiting for another FlowFile to complete their bins. You will need to look back earlier in your dataflow to see how your data is being ingested by your nodes to make sure that the matching sets of files end up on the same node for merging.

Thanks,

Matt

Created on 03-10-2017 03:05 PM - edited 08-18-2019 05:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

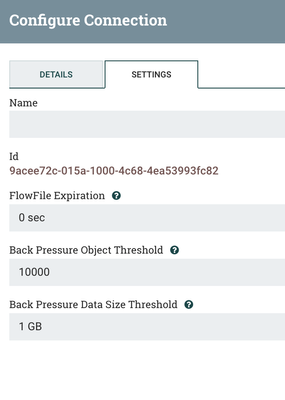

A queue has a limit in size (1 GB) or 10,000 files by default.

To change the settings go to setting tab on "Configure" of that queue. See screenshot attached.

If it helps, please vote/accept response.

It is also possible that downstream you may have another queue or processor stuck due to this limit set by default. You have to increase there and let the processor start processing to reduce the amount in the queue before your queue report may start to drain. Imagine all this flow like a river with all kind of streams and obstructions...

Created on 03-13-2017 07:46 AM - edited 08-18-2019 05:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Constantin Stanca, I changed the back pressure data size to 2GB but the two flowfiles still don't merge ...

Created 03-14-2017 06:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Mohammed El Moumni

Queue thresholds are per node and will cause a queue to no longer accept additional FlowFiles, It will not prevent downstream processor from processing FlowFiles that are already in that queue.

Had he received two 700MB CSV files on one node, then the 1GB threshold would have been exceeded thus preventing any additional FlowFiles from entering that queue (including the corresponding 70 byte header files). In that case you would be stuck, since merge would not have the files even on a single node needed to merge a bin.

Thanks,

Matt

Created 03-10-2017 08:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you take a look at the details of the flowfiles in the input queue for MergeContent, do you see the correlation attribute present on both flowfiles? Is it possible that, elsewhere in the flow, a flowfile with a correlation ID the same as one of the two flowfiles in the incoming queue was sent to a failure relationship and had been dropped from the flow? In the past, I have done a bit of processing of files from one of the Split* processors, and encountered errors processing one of the fragments. Due to the way I had designed the flow, the fragment with the error was routed to a failure relationship to another processor that terminated the processing of that flowfile, so not all the fragments from the split were sent to MergeContent. This caused all the other fragments to sit in the incoming queue of MergeContent indefinitely.

Created 03-13-2017 07:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Jeff Storck, the correlation attribute is present on both flowfiles and its value is the same. Also, I am sure that for a correlation attribute value, only two flowfiles will have that value. So with my settings : Minimum number of entries = 2, maximum number of entries = 2, I am sure that only those two flowfiles will merge. Still, in my case the two flowfiles in the screenshot stay infinitely in the queue ... I am pretty sure it's a size problem, but can't figure it out.

Created 03-13-2017 03:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Mohammed El Moumni Are other, smaller files merging? I notice in both of your screenshots that the MergeContent processor is stopped, which will prevent files from being merged. Was the processor stopped just to take the screenshots?

Created 03-13-2017 03:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jeff Storck yes the processor was stopped just to take the screenshots (I left it for running for 1 day and the two files didn't merge). And yes smaller files merge (15MB files merge for example).

Created on 03-14-2017 05:48 PM - edited 08-18-2019 05:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Each Node in a NiFi cluster runs its own copy of the dataflow and works on its own set of FlowFiles.

Looking at the screenshot you have above of your queue list, you can see that the two FlowFiles are not on the same node. So each node is running a MergeContent processor and each node is waiting for another FlowFile to complete their bins. You will need to look back earlier in your dataflow to see how your data is being ingested by your nodes to make sure that the matching sets of files end up on the same node for merging.

Thanks,

Matt

Created 03-14-2017 06:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

good eyes @Matt Clarke 🙂