Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: JA017, Long running shell action Oozie workflo...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

JA017, Long running shell action Oozie workflow failed

- Labels:

-

Apache Oozie

Created on 09-04-2022 08:28 PM - edited 09-04-2022 11:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Running shell actions(Only sleep command) in Oozie workflows for more than 7 days throws JA017 error on exit.

We saw this problem in a shell script that submits a spark job.

Spark job succeeded but submit shell action failed.

We tested by creating a shell action that does a simple sleep and we see the same failure.

https://blog.cloudera.com/hadoop-delegation-tokens-explained/

I saw this blog and tried to increase max-lifetime

dfs.namenode.delegation.token.max-lifetime (hdfs-site.xml)

restart NameNode, Oozie, YARN ResourceManager

but the same fails.

If I run the Oozie workflow and look at the logs, I see that I get 3 delegation tokens.

RM_DELEGATION_TOKEN, MR_DELEGATION_TOKEN, HDFS_DELEGATION_TOKEN

Because the dfs.namenode.delegation.token.max-lifetime setting value is increased, the maxDate of HDFS_DELEGATION_TOKEN is increased.

The maxDate of RM_DELEGATION_TOKEN and MR_DELEGATION_TOKEN tokens did not increase.

Why does authentication problem occur due to expiration of delegation token when Oozie shell action is terminated?

Can't just increasing dfs.namenode.delegation.token.max-lifetime solve this problem?

How to run Shell Actions for long periods of time in Oozie?

Created 09-05-2022 04:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

> Running workflow for more than 7 days means, does it run entire 7 days all the time and fails? Can you provide the script you are running?

> Is the shell script works outside of oozie without issues?

> Please provide the complete error stack trace you are seeing.

Regards,

Chethan YM

Created 09-11-2022 10:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@coco, Has the reply helped resolve your issue? If so, please mark the appropriate reply as the solution, as it will make it easier for others to find the answer in the future. If you are still experiencing the issue, can you provide the information @ChethanYM has requested?

Regards,

Vidya Sargur,Community Manager

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Learn more about the Cloudera Community:

Created on 09-13-2022 11:47 PM - edited 09-13-2022 11:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@VidyaSargur @ChethanYM

The script only execute sleep command.

# 24 * 7 + 16

for i in {1..184}

do

sleep 3600 # 1h

done

dfs.name.delegation.token.max-lifetime change 7d to 8d.

(issueDate=1661416856453, maxDate=1662108056453)

restart namenode, oozie, yarn resource manager, yarn jobhistory server.

workflow still fail.

Oozie log

WARN org.apache.oozie.action.hadoop.ShellActionExecutor: SERVER[*****] USER[develop] GROUP[-] TOKEN[] APP[sleep7d] JOB[0000003-220825173452344-oozie-oozi-W] ACTION[0000003-220825173452344-oozie-oozi-W@shell-eb25] Exception in check(). Message[JA017: Could not lookup launched hadoop Job ID [job_1661416633783_0003] which was associated with action [0000003-220825173452344-oozie-oozi-W@shell-eb25]. Failing this action!]

org.apache.oozie.action.ActionExecutorException: JA017: Could not lookup launched hadoop Job ID [job_1661416633783_0003] which was associated with action [0000003-220825173452344-oozie-oozi-W@shell-eb25]. Failing this action!

at org.apache.oozie.action.hadoop.JavaActionExecutor.check(JavaActionExecutor.java:1497)

at org.apache.oozie.command.wf.ActionCheckXCommand.execute(ActionCheckXCommand.java:182)

at org.apache.oozie.command.wf.ActionCheckXCommand.execute(ActionCheckXCommand.java:56)

at org.apache.oozie.command.XCommand.call(XCommand.java:286)

at org.apache.oozie.service.CallableQueueService$CompositeCallable.call(CallableQueueService.java:332)

at org.apache.oozie.service.CallableQueueService$CompositeCallable.call(CallableQueueService.java:261)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at org.apache.oozie.service.CallableQueueService$CallableWrapper.run(CallableQueueService.java:179)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Yarn log

ERROR LogAggregationService

Failed to setup application log directory for application_......

org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.token.SecretManager$InvalidToken): token (token for develop: HDFS_DELEGATION_TOKEN owner=develop, renewer=yarn, realUser=oozie/*****, issueDate=1661416856453, maxDate=1662108056453, sequenceNumber=166609, masterKeyId=1547) can't be found in cache

at org.apache.hadoop.ipc.Client.call(Client.java:1504)

at org.apache.hadoop.ipc.Client.call(Client.java:1441)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:230)

at com.sun.proxy.$Proxy27.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:786)

at sun.reflect.GeneratedMethodAccessor2.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:258)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:104)

at com.sun.proxy.$Proxy28.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:2167)

at org.apache.hadoop.hdfs.DistributedFileSystem$20.doCall(DistributedFileSystem.java:1265)

at org.apache.hadoop.hdfs.DistributedFileSystem$20.doCall(DistributedFileSystem.java:1261)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1277)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.logaggregation.LogAggregationService.checkExists(LogAggregationService.java:265)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.logaggregation.LogAggregationService.access$100(LogAggregationService.java:68)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.logaggregation.LogAggregationService$1.run(LogAggregationService.java:293)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1920)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.logaggregation.LogAggregationService.createAppDir(LogAggregationService.java:278)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.logaggregation.LogAggregationService.initAppAggregator(LogAggregationService.java:384)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.logaggregation.LogAggregationService.initApp(LogAggregationService.java:337)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.logaggregation.LogAggregationService.handle(LogAggregationService.java:463)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.logaggregation.LogAggregationService.handle(LogAggregationService.java:68)

at org.apache.hadoop.yarn.event.AsyncDispatcher.dispatch(AsyncDispatcher.java:182)

at org.apache.hadoop.yarn.event.AsyncDispatcher$1.run(AsyncDispatcher.java:109)

at java.lang.Thread.run(Thread.java:745)

Created on 09-14-2022 07:30 PM - edited 09-14-2022 07:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried how to increase the hdfs delegation token max lifetime of the token.

But I found a new way in the CDP documentation.

https://docs.cloudera.com/runtime/7.2.9/yarn-security/topics/yarn-long-running-applications.html

This feature was patched in YARN-2704 and released as hadoop 2.6.0.

https://issues.apache.org/jira/browse/YARN-2704

Our cluster is CDH version 5.14.2 and we use hadoop version 2.6.0.

Our cluster has these settings set.

YARN:

yarn.resourcemanager.proxy-user-privileges.enabled : checked(true)

HDFS NameNode:

hadoop.proxyuser.yarn.hosts:*

hadoop.proxyuser.yarn.groups: *

According to this feature, the workflow should succeed because when the delegation token expires it creates a new token.

Still, why is the workflow failing after the dfs.name.delegation.token.max-lifetim(7days) setting?

Created 09-15-2022 02:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @coco

Can you follow the below steps in Hue, if you are running the job from Hue.

1. Login to Hue

2. Go to Workflows -> Editors -> Workflows

3. Open the Workflow to edit.

4. On the left hand pane, Click 'Properties'

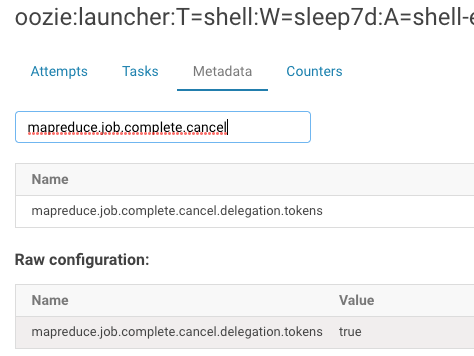

5. Under section 'Hadoop Job Properties', in the Name box enter 'mapreduce.job.complete.cancel.delegation.tokens' and in value enter 'true'.

6. Save the workflow and submit.

If you are running from terminal add the above property in configurations section then rerun the workflow and see if it helps.

If this works please accept it as a solution.

Regards,

Chethan YM

Created on 09-15-2022 08:11 PM - edited 09-15-2022 08:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ChethanYM

Please explain why we need to set 'mapreduce.job.complete.cancel.delegation.tokens' to 'TRUE' in our case.

We confirmed the workflow we tested previously.

We didn't set this, but it will be retrieved as 'TRUE' in the job's metadata in HUE.

It seems to be the default value, do I have to set it as a property in the workflow as you said?

Created 09-16-2022 03:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Below is the suspected causes for this issue:

https://issues.apache.org/jira/browse/YARN-3055

https://issues.apache.org/jira/browse/YARN-2964

Yes, You can set that parameter at workflow level and test.

Regards,

Chethan YM

Created 09-19-2022 05:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm checking the YARN-2694 and YARN-3055 patches. It's like a patch for a bug where the token is revoked when an app in the workflow completes, and other apps don't renew the token.

I still don't understand how this bug relates to setting the mapreduce.job.complete.cancel.delegation.tokens setting to true.

Could you please explain the behavior you expect when you set mapreduce.job.complete.cancel.delegation.tokens to true?

Shouldn't the token be prevented from being canceled by setting the mapreduce.job.complete.cancel.delegation.tokens setting to false ?

Created 09-29-2022 07:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have dfs.namenode.delegation.token.max-lifetime in YARN(yarn-site.xml, mapred-site.xml, core-site.xml), HDFS(hdfs-site.xml)

I increased the setting to 8 days.

The workflow of test 1 ran for 7 days without any settings and ended successfully.

The workflow of test #2 was set to 'TRUE' with the 'mapreduce.job.complete.cancel.delegation.tokens' value set to 'TRUE' and ran for 8 days, but ended in failure.