Support Questions

- Cloudera Community

- Support

- Support Questions

- JobHistory Server Fail to start: Command aborted b...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

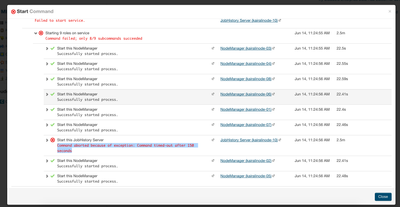

JobHistory Server Fail to start: Command aborted because of exception: Command timed-out after 150 s

- Labels:

-

Apache YARN

-

Cloudera Manager

-

MapReduce

Created on 06-14-2017 04:04 AM - edited 09-16-2022 04:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello everyone,

I am using Cloudera Express 5.5.1 to manage a 8 node cluster.

I was trying to run a new hadoop job when it failed due to some space issues. I was getting the following error:

"No space available in any of the local directories."

After that, I decided to restart the YARN/Mapreduce service to check if the problem is gone. However, I am able to restart all the services except the JobHistory server, which is showing a time out exception. I cannot find any other clue about the issue as nothig is shown in any log.

Any clue? What should I do?

Many thanks in advance.

Created on 06-14-2017 05:12 AM - edited 06-14-2017 05:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well from the error log you have shared it seems your disks storage are full (where Yarn works localy).

Make some room before restarting the services.

Created 06-14-2017 05:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mathieu,

Thank you for your response.

The JobHistory service is running on the Headnode, which seems to have plenty of space available according to df -Th output:

df -Th Filesystem Type Size Used Avail Use% Mounted on /dev/sda1 ext4 164G 66G 90G 43% / none tmpfs 4.0K 0 4.0K 0% /sys/fs/cgroup udev devtmpfs 6.9G 4.0K 6.9G 1% /dev tmpfs tmpfs 1.4G 776K 1.4G 1% /run none tmpfs 5.0M 0 5.0M 0% /run/lock none tmpfs 6.9G 0 6.9G 0% /run/shm none tmpfs 100M 0 100M 0% /run/user cm_processes tmpfs 6.9G 16M 6.9G 1% /run/cloudera-scm-agent/process

Also, I am having the same issue with other services (OOzie and YARN/ResourceManager)

Created on 06-14-2017 05:36 AM - edited 06-14-2017 05:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The error you have shared about space issue is related to the worker node.

Check that before investigating the job history server issue.

Then only investigate why the job history server failed to restart.

Get to the role log file and see what is the real error.

If you have the same issue with roles on the same host then you might want to restart the Cloudera agent of that node. I remember experiencing timeout on commands when the cloudera agent was not working properly.

Created 06-15-2017 02:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue with the jobs is that on the worker nodes the yarn.nodemanager.local-dirs directories (it can be more than one) do not have enough space. Check your config, and the check the space on those directories on the worker nodes.